The editor of Downcodes will take you to learn about a breakthrough research: using generative AI models, especially large language models (LLM), to build an architecture that can accurately simulate human behavior. This research provides unprecedented tools for social science research, and its significance is worthy of further exploration. The researchers collected a large amount of data through in-depth interviews and used this data to build a "generative agent architecture" to create thousands of virtual "clones" whose behavior patterns are highly consistent with real humans, providing insights for social science research. Brand new possibilities.

A new study shows that using generative AI models, especially large language models (LLM), it is possible to build an architecture that can accurately simulate human behavior in a variety of situations. The findings provide a powerful new tool for social science research.

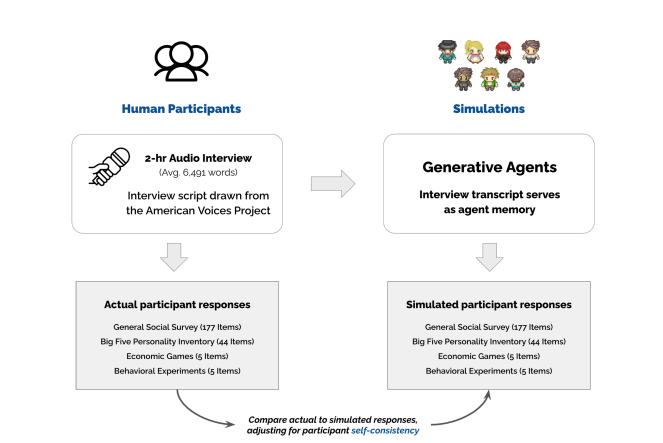

The researchers first recruited more than 1,000 participants from various backgrounds in the United States and conducted two-hour in-depth interviews with them to collect information on their life experiences, opinions, and values. The researchers then used these interview transcripts and a large language model to build a "generative agent architecture."

This architecture can create thousands of virtual "clones" based on participants' interviews, each with a unique personality and behavioral patterns. Researchers assessed the clones' behavioral performance through a series of standard social science tests, such as the Big Five Personality Test and behavioral economics games.

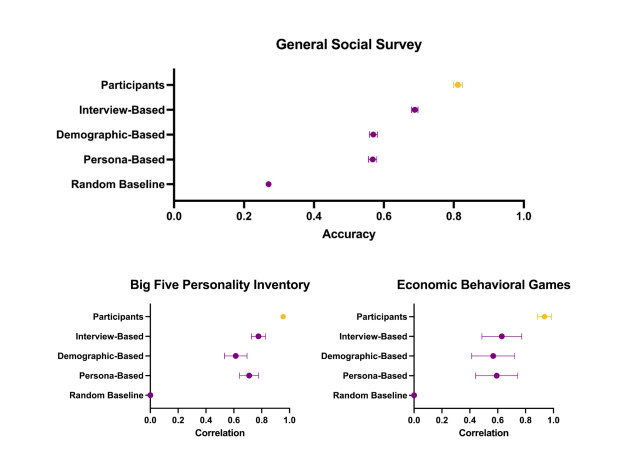

Surprisingly, the clones performed in tests that were highly consistent with real participants. Not only can they accurately predict their responses on questionnaires, but they can also predict their behavioral responses in experiments, such as in experiments where power affects trust, where "clones" performed like real participants, and how trustworthy the high-power group was Significantly lower than the low power group.

This research shows that generative AI models can be used to create highly realistic "virtual humans" and predict the behavior of real humans. This provides a completely new approach to social science research, for example using these "virtual humans" to test the effects of new public health policies or marketing strategies without the need for large-scale experiments with real people.

The researchers also found that relying solely on demographic information to construct "virtual humans" is not enough. Only by combining in-depth interviews can individual behavior be simulated more accurately. This demonstrates that each individual has unique experiences and perspectives, and this information is critical to understanding and predicting their behavior.

To protect participants' privacy, the researchers plan to build an "agent library" and provide access in two ways: open access to aggregate data for fixed tasks, and restricted access to individual data for open tasks. This makes it easier for researchers to use these "virtual humans" while minimizing the risks associated with the content of the interviews.

This research result undoubtedly opens a new door for social science research. Let us wait and see what far-reaching impacts it will have in the future.

Paper address: https://arxiv.org/pdf/2411.10109

The success of this research not only demonstrates the powerful ability of generative AI in simulating human behavior, but also provides new methods and tools for social science research, bringing unlimited possibilities to future social science research. Let us look forward to this research together. Further development and application of technology.