The open source AI field has boomed in recent years, but there is still a gap compared with large technology companies. Computing power is only one aspect, and the more critical aspect is the lack of post-training solutions. The latest breakthrough of AI2 (formerly Allen Artificial Intelligence Institute) - Tülu3 post-training program, provides a powerful weapon to close this gap. The editor of Downcodes will give you an in-depth understanding of how this technology empowers open source AI and makes large language models that were originally difficult to control easy to use and customize.

In the field of open source AI, the gap with large technology companies is not only reflected in computing power. AI2 (formerly Allen Artificial Intelligence Institute) is bridging this gap through a series of groundbreaking initiatives. Its newly released Tülu3 post-training program makes it within reach to convert original large language models into practical AI systems.

Unlike common cognition, basic language models cannot be put into use directly after pre-training. In fact, the post-training process is the key link that determines the final value of the model. It is at this stage that the model transforms from an omniscient network lacking judgment to a practical tool with a specific functional orientation.

For a long time, major companies have been secretive about post-training programs. While anyone can build a model using the latest technology, unique post-training techniques are required to make a model useful in specific fields, such as psychological counseling or research analysis. Even for projects like Meta's Llama, which is advertised as open source, the source of its original model and common training methods are still strictly confidential.

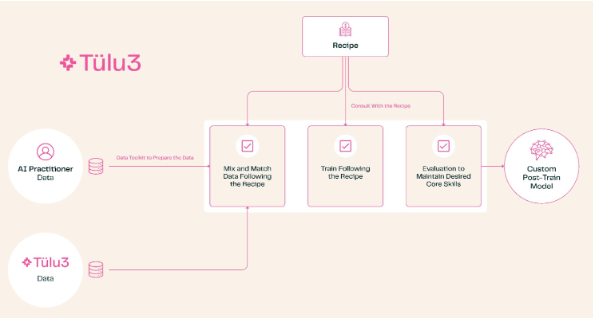

The emergence of Tülu3 changes this situation. This complete set of post-training solutions covers a full range of processes from topic selection to data management, from reinforcement learning to fine-tuning. Users can adjust model capabilities according to their needs, such as strengthening mathematics and programming capabilities, or reducing the priority of multi-language processing.

The test of AI2 shows that the performance of the model trained by Tülu3 has reached the level of top open source models. This breakthrough is significant: it provides companies with a fully autonomous and controllable choice. Especially for institutions that handle sensitive data, such as medical research, they no longer need to rely on third-party APIs or customized services. They can complete the entire training process locally, saving costs and protecting privacy.

AI2 not only released this solution, but also took the lead in applying it to its own products. Although the current test results are based on the Llama model, they have plans to launch a new model based on their own OLMo and trained by Tülu3, which will be a truly completely open source solution from beginning to end.

This open source technology not only demonstrates AI2’s determination to promote the democratization of AI, but also injects a boost into the entire open source AI community. It brings us one step closer to a truly open and transparent AI ecosystem.

The open source of Tülu3 marks a big step forward in the field of open source AI. It lowers the threshold for AI applications, promotes fairness and sharing of AI technology, and brings unlimited possibilities for future AI development. We look forward to the emergence of more similar open source projects to jointly build a more prosperous AI ecosystem.