Meta’s SAM model performs well in the field of image segmentation, but faces challenges in video object tracking. Especially in complex scenes, its "fixed window" memory mechanism leads to error propagation and poor tracking results. To this end, researchers at the University of Washington developed the SAMURAI model and improved SAM2, significantly improving the accuracy and stability of video object tracking.

The "segment everything" model SAM launched by Meta is invincible in the field of image segmentation, but when it comes to video object tracking, it is a bit unable to do what it wants, especially in scenes with crowds of people, fast moving targets, or playing "hide and seek". SAM gets confused. This is because the memory mechanism of the SAM model is like a "fixed window", which only records the most recent images and ignores the quality of the memory content, resulting in error propagation in the video and greatly reducing the tracking effect.

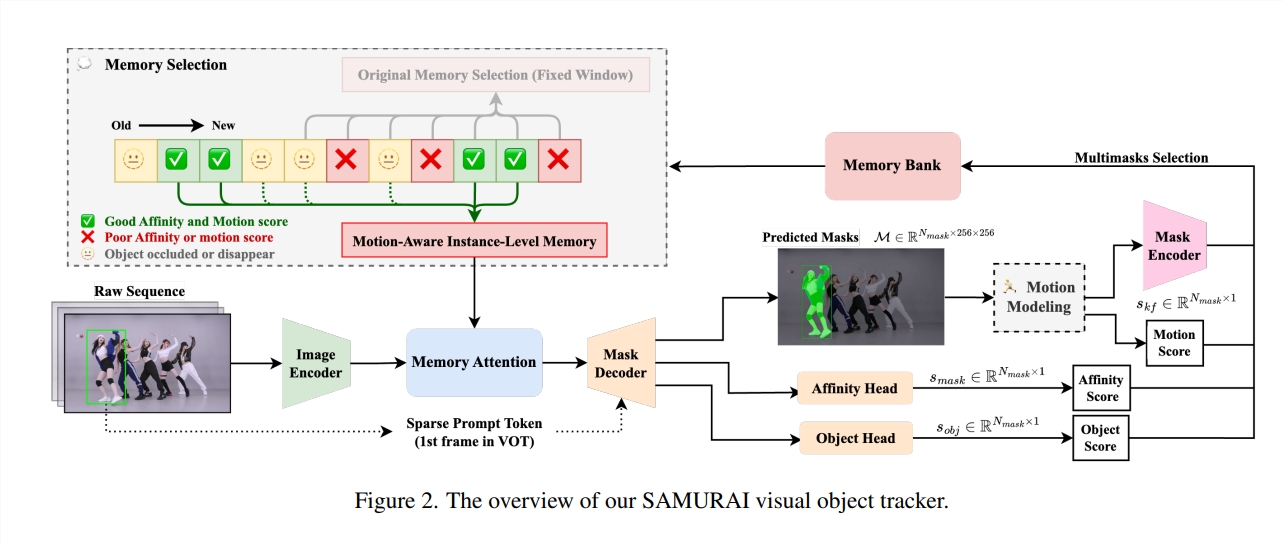

In order to solve this problem, researchers from the University of Washington "thought hard" and finally developed a model called SAMURAI, which "devil-modified" SAM2, specifically used to achieve video object tracking. SAMURAI's name is very domineering, and it does have two brushes: it combines time motion clues and a newly proposed motion perception memory selection mechanism. Like a highly skilled warrior, it can accurately predict the movement trajectory of objects and improve Mask selection ultimately enables robust, accurate tracking without the need for retraining or fine-tuning.

The secret of SAMURAI lies in two major innovations:

The first tip: motion modeling system. This system is like the "Eagle Eye" of a samurai, able to more accurately predict the location of objects in complex scenes, thereby optimizing the selection of masks so that SAMURAI will not be confused by similar objects.

The second move: motion perception memory selection mechanism. SAMURAI abandons SAM2's simple "fixed window" memory mechanism and instead adopts a hybrid scoring system that combines raw mask similarity, object and motion scores, just like a samurai carefully selecting weapons, retaining only the most relevant historical information, thus Improve the overall tracking reliability of the model and avoid error propagation.

SAMURAI is not only highly skilled in martial arts, but also agile and able to operate in real time. More importantly, it has demonstrated strong zero-sample performance on various benchmark data sets, which means that it can adapt to various scenarios without special training and demonstrates strong generalization capabilities.

In field tests, SAMURAI achieved significant improvements over existing trackers in both success rate and accuracy. For example, on the LaSOText data set, it obtains an AUC gain of 7.1%; on the GOT-10k data set, it obtains an AO gain of 3.5%. What is even more surprising is that it even achieves results comparable to fully supervised methods on the LaSOT dataset, which fully proves its power in complex tracking scenarios and its great potential for practical application in dynamic environments.

SAMURAI’s success is due to its clever use of motion information. The researchers combined a traditional Kalman filter with SAM2 to help the model select the most reliable mask from multiple candidate masks by predicting the location and size of objects. In addition, they also designed a memory selection mechanism based on three scores (mask similarity score, object appearance score and motion score). Only when these three scores reach the threshold, the frame will be selected. memory bank. This selective memory mechanism effectively avoids interference from irrelevant information and improves tracking accuracy.

The emergence of SAMURAI brings new hope to the field of video object tracking. Not only does it surpass existing trackers in performance, but it also requires no retraining or fine-tuning and can be easily applied to various scenarios. I believe that in the future, SAMURAI will play an important role in fields such as autonomous driving, robots, and video surveillance, bringing us a more intelligent life experience.

Project address: https://yangchris11.github.io/samurai/

Paper address: https://arxiv.org/pdf/2411.11922

The editor of Downcodes concluded: The emergence of the SAMURAI model has brought significant progress to video target tracking technology. Its innovative memory mechanism and motion modeling system effectively solve the shortcomings of traditional methods, and its future application prospects are broad.