Downcodes editor reports: In recent years, generating realistic human animation has become a research hotspot in the fields of computer vision and animation. The latest technology, EchoMimicV2, stands out. It generates high-quality half-length human animations by integrating reference images, audio clips and gesture sequences, bringing new possibilities to the field of digital humans. This technology breaks through the limitations of traditional methods, simplifies the animation generation process, and improves the detail and expressiveness of animation. Next, let us learn about the innovations of EchoMimicV2.

In recent years, with the rapid development of computer vision and animation technology, generating vivid human animation has gradually become a research hotspot. The latest research result, EchoMimicV2, uses reference images, audio clips and gesture sequences to create high-quality half-length human animations.

Simply put, EchoMimicV2 supports inputting 1 picture + 1 gesture video + 1 audio to generate a new digital person, which can be said to be the input audio content, video with input gestures and head movements.

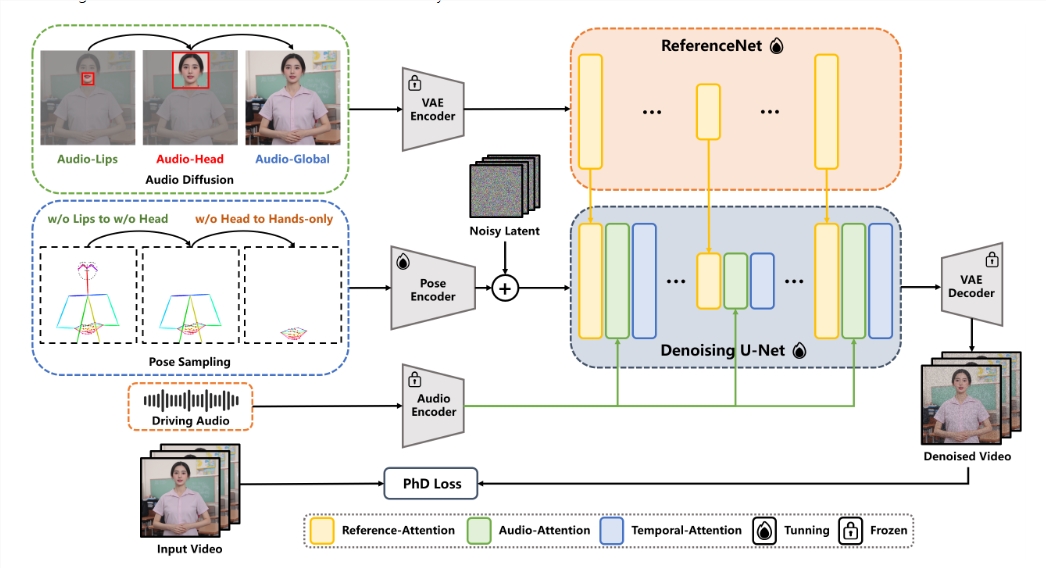

EchoMimicV2 was developed in response to some practical challenges in existing animation generation technology. Traditional methods often rely on multiple control conditions, such as audio, posture, or motion maps, which make animation generation complex and cumbersome, and are often limited to head actuation. Therefore, the research team proposed a new strategy called Audio-Pose Dynamic Harmonization, which aims to simplify the animation generation process while improving the detail and expressiveness of half-body animation.

In order to cope with the scarcity of half-body data, researchers innovatively introduced the "head local attention" mechanism. This method can effectively utilize head image data during the training process and omit these data during the inference stage, thereby providing Animation generation provides greater flexibility.

In addition, the research team designed a "stage-specific denoising loss" to guide the animation's motion, detail, and low-level quality performance at different stages. This multi-level optimization method significantly improves the quality and effect of the generated animation.

In order to verify the effectiveness of EchoMimicV2, the researchers also launched a new benchmark to evaluate the generation effect of half-length human animation. After extensive experiments and analysis, the results show that EchoMimicV2 surpasses other existing methods in both quantitative and qualitative evaluations, demonstrating its strong potential in the field of animation.

With its innovative technology and excellent performance, EchoMimicV2 has opened a new chapter for digital human animation production, and its future development is worth looking forward to. The editor of Downcodes will continue to pay attention to the technological progress in this field and bring more exciting reports to readers.