The rapid development of generative AI has brought convenience, but it has also brought about the problem of the proliferation of false information. To address this issue, Microsoft has launched a new tool called Correction, which is designed to correct misinformation in AI-generated content. The editor of Downcodes will take you to have an in-depth understanding of the principles, applications and challenges of this tool.

Today, with the rapid development of artificial intelligence, although generative AI has brought many conveniences, the false information it generates has also become a problem that cannot be ignored. In response to this challenge, technology giant Microsoft recently launched a new tool called Correction, which is designed to correct false information in AI-generated content.

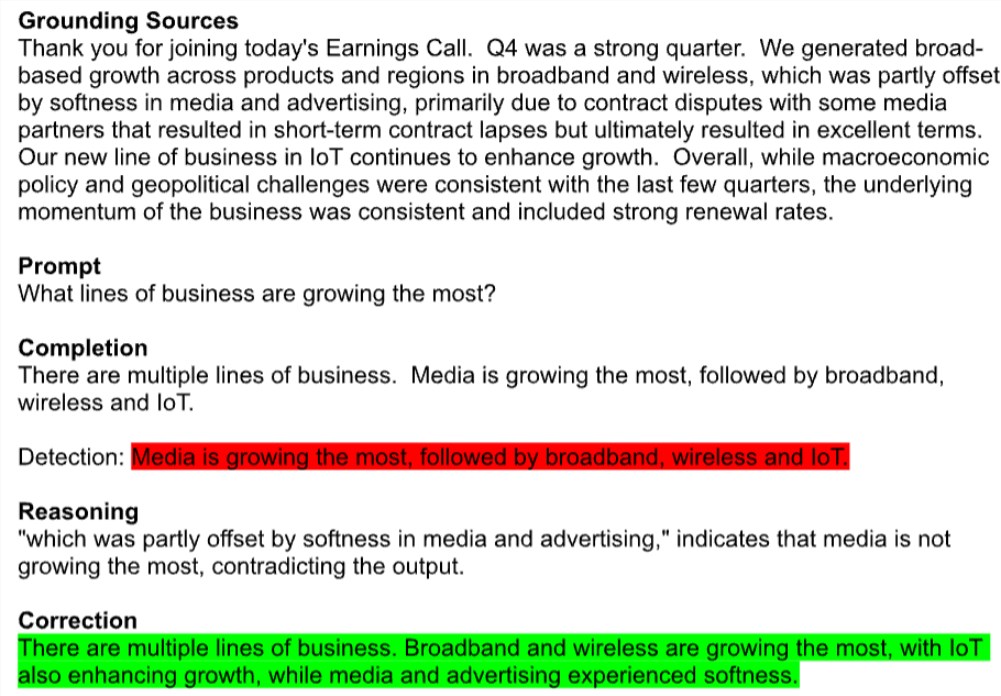

Correction is currently in preview as part of Microsoft's Azure AI Content Security API. The tool automatically flags potentially erroneous text, such as misquoted company quarterly earnings summaries, and compares it to credible sources to correct those errors. Notably, this technology works across all text generation AI models, including Meta’s Llama and OpenAI’s GPT-4.

A Microsoft spokesperson said that Correction ensures that the generated content is consistent with real documents by combining small language models with large language models. They hope this new feature will help developers in fields such as medical improve response accuracy.

However, experts are cautious. Os Keyes, a doctoral student at the University of Washington, believes that trying to remove the illusion of generated AI is like trying to remove hydrogen from water, which is a fundamental part of how the technology works. In fact, text generation models produce false information because they don’t actually know anything and just make guesses based on the training set. One study found that OpenAI’s ChatGPT had an error rate of up to 50% when answering medical questions.

Microsoft's solution is to identify and correct this false information through a pair of cross-referenced editing metamodels. A classification model looks for possible errors, fictitious or irrelevant text fragments, and if these are detected, a second language model is introduced to try to correct it based on the specific underlying document.

Although Microsoft claims that Correction can significantly enhance the reliability and trustworthiness of AI-generated content, experts still have doubts. Mike Cook, a researcher at Queen Mary University, pointed out that even if Correction works as advertised, it may exacerbate trust and explainability issues in AI. The service may lull users into a false sense of security, leading them to believe that the model is more accurate than it actually is.

It is worth mentioning that Microsoft also had hidden business calculations when launching Correction. While the feature itself is free, there is a monthly usage limit for the basic document detection capabilities needed to detect disinformation, and any additional usage will be charged.

Microsoft is clearly under pressure to prove the value of its AI investments. The company spent nearly $19 billion on AI-related capital expenditures and equipment in the second quarter of this year, but has generated modest revenue from AI so far. Recently, some Wall Street analysts lowered Microsoft's stock rating, questioning the feasibility of its long-term AI strategy.

The potential risk of accuracy and disinformation has become one of the biggest concerns for businesses when piloting AI tools. Cook concluded that if this were a normal product life cycle, generative AI should still be in the academic research and development phase to continue to improve and understand its strengths and weaknesses. However, we have put it into use across multiple industries.

Microsoft’s Correction tool is undoubtedly an attempt to solve the problem of AI disinformation, but whether it can truly break the trust crisis in generative AI remains to be seen. While AI technology is developing rapidly, how to balance innovation and risk will become an important issue facing the entire industry.

All in all, the emergence of Microsoft's Correction tool marks the industry's increasing attention to the problem of AI false information, but it also triggers in-depth thinking about the credibility and business model of AI. In the future, how to better utilize AI technology while effectively controlling its risks will be a major challenge facing everyone.