Large language models (LLMs) have shown great potential in the field of data processing, but processing complex unstructured data still faces challenges. Existing LLM-based frameworks often focus on cost and ignore accuracy improvement, especially in complex tasks. The editor of Downcodes will introduce to you a breakthrough research result-DocETL system, which effectively solves the accuracy problem of LLM when processing complex documents.

In recent years, large language models (LLMs) have received widespread attention in the field of data management, and their application scope has continued to expand, including data integration, database tuning, query optimization, and data cleaning. However, there are still many challenges when dealing with unstructured data, especially complex documents.

At present, some unstructured data processing frameworks based on LLM tend to focus more on reducing costs, while ignoring the issue of improving processing accuracy. This problem is particularly prominent when analyzing complex tasks, because the results output by LLM often cannot accurately meet the specific needs of users.

In the case of the UC Berkeley Investigative Reporting Project, researchers hope to analyze large amounts of police records obtained through records requests to reveal officer misconduct and potential procedural violations. The task, called Police Misconduct Identification (PMI), requires processing multiple types of documents, extracting and summarizing key information, and simultaneously aggregating data across multiple documents to generate detailed behavioral summaries. Existing methods usually use LLM only once to process each document. This single-step mapping operation is often insufficient in accuracy, especially when the document length exceeds the context limit of LLM, important information may be missed.

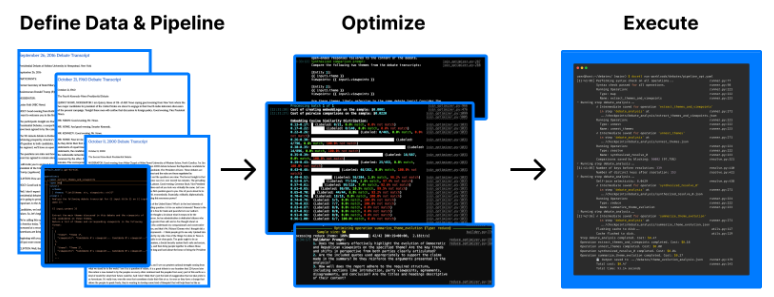

To solve these problems, a research team from the University of California, Berkeley, and Columbia University proposed an innovative system called DocETL. DocETL aims to optimize complex document processing processes and solve the limitations of existing LLM. This system provides a declarative interface that allows users to flexibly define processing flows and leverage an agent-based framework for automatic optimization. Key features of DocETL include a logic rewriting process tailored for LLM tasks, an agent-guided plan evaluation mechanism, and an efficient optimization algorithm that helps identify processing plans with the highest potential.

When evaluated on the police misconduct identification task, DocETL adopted a set of 227 documents from California police departments and faced multiple challenges such as document length exceeding the LLM context limit. Evaluated across different pipeline variants, DocETL shows a unique ability in optimizing complex document processing tasks.

Human evaluation and LLM review show that the output accuracy of DocETL is 1.34 times higher than that of traditional methods, indicating the importance and effectiveness of this system in processing complex document tasks.

To sum up, DocETL, as an innovative declarative system, can not only effectively solve many problems in complex document processing, but also lay a solid foundation for future research and application.

Paper: https://arxiv.org/abs/2410.12189v1

Project: https://github.com/ucbepic/docetl

Highlight:

LLM presents significant challenges with its lack of accuracy when handling complex documents.

The DocETL system provides a flexible declarative interface and automatic optimization capabilities for document processing.

Through human evaluation, DocETL output quality is significantly improved, with an improvement of 1.34 times.

The emergence of the DocETL system provides new ideas for solving the accuracy problem of LLM in processing complex documents. Its excellent performance in practical applications also lays a solid foundation for the future application of LLM in the field of data processing. We look forward to the emergence of more similar innovative technologies to promote LLM technology to better serve various fields.