The editor of Downcodes learned that DeepMind’s latest research uses the ultra-large-scale Transformer model to achieve breakthrough progress in the field of chess. The researchers constructed a ChessBench data set containing tens of millions of human game records and trained a Transformer model with up to 270 million parameters, aiming to explore its capabilities in complex planning problems. This research result has attracted widespread attention from the AI community and provided a new direction and benchmark for the research on AI planning capabilities.

Recently, a DeepMind paper on the application of ultra-large-scale Transformer in the field of chess has triggered extensive discussions in the AI community. The researchers used a new data set called ChessBench to train a Transformer model with up to 270 million parameters to explore its capabilities in complex planning problems such as chess.

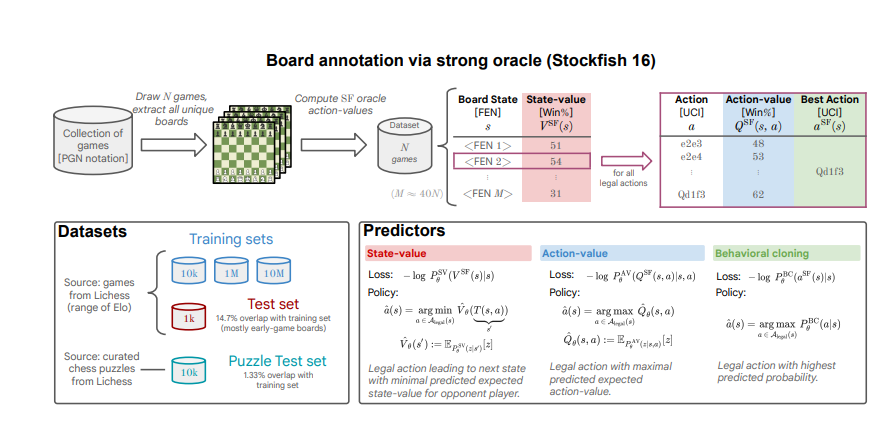

The ChessBench data set contains 10 million human game records collected from the Lichess platform, and the chess games are annotated using the top chess engine Stockfish16, providing up to 15 billion data points, including the winning rate and best move of each chess game state. and the valuation of all legal moves.

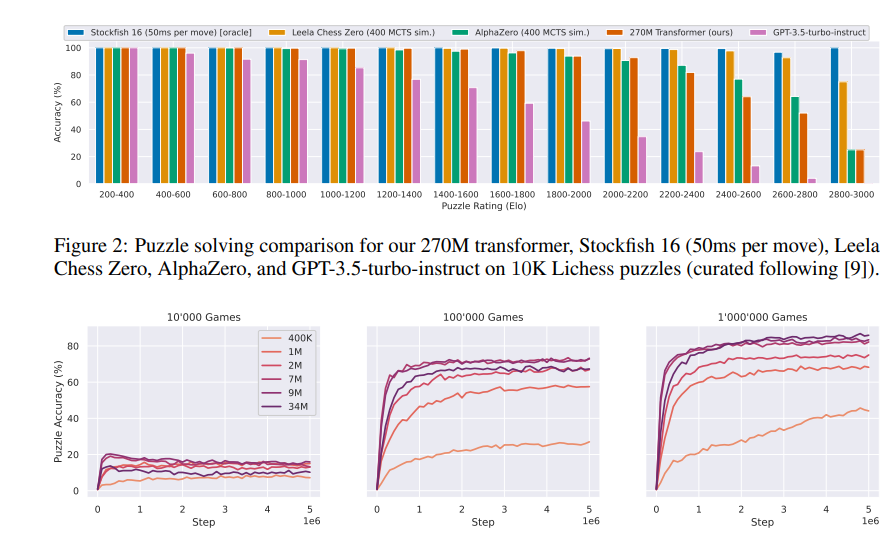

The researchers used supervised learning methods to train the Transformer model to predict the value of each legal move in a given game state. Experimental results show that even without using any explicit search algorithm, the largest model can make fairly accurate predictions in new chess game states, demonstrating strong generalization capabilities.

Surprisingly, when the model played fast chess against human players on the Lichess platform, it achieved an Elo rating of 2895, reaching the chess master level.

The researchers also compared the model with chess engines trained on reinforcement learning and self-play such as Leela Chess Zero and AlphaZero. The results show that although an approximate version of the Stockfish search algorithm can be refined into the Transformer model through supervised learning, there are still challenges in achieving perfect refinement.

This research shows that the ultra-large-scale Transformer model has great potential in solving complex planning problems, and also provides new ideas for the development of future AI algorithms. The release of the ChessBench data set will also provide a new benchmark platform for researchers to explore AI planning capabilities.

DeepMind's research results not only demonstrate the power of the Transformer model in the field of chess, but also provide important reference value for future AI applications in more complex fields. This marks another big step forward for AI in the field of complex strategy games and deserves continued attention.