GPT-4V, this artifact known as "looking at pictures and talking", has been criticized for its lack of understanding of graphical interfaces. It’s like a “screen blind” person who often clicks the wrong buttons, which is maddening. However, the OmniParser model released by Microsoft is expected to completely solve this problem! OmniParser is like a "screen translator", converting screenshots into GPT-4V's easy-to-understand structured language, making GPT-4V's "eyesight" sharper. The editor of Downcodes will take you to have an in-depth understanding of this magical model, see how it helps GPT-4V overcome the defect of "eye blindness", and the amazing technology behind it.

Do you still remember the GPT-4V, an artifact that is known as "looking at pictures and talking"? It can understand the content of pictures and perform tasks based on pictures. It is a blessing for lazy people! But it has a fatal weakness: its eyesight is not very good !

Imagine that you ask GPT-4V to click a button for you, but it clicks all over the place like a "screen blind". Isn't it crazy?

Today I will introduce to you an artifact that can make GPT-4V look better - OmniParser! This is a new model released by Microsoft, aiming to solve the problem of automatic interaction of graphical user interfaces (GUI).

What does OmniParser do?

To put it simply, OmniParser is a "screen translator" that can parse screenshots into a "structured language" that GPT-4V can understand. OmniParser combines the fine-tuned interactive icon detection model, the fine-tuned icon description model and the output of the OCR module.

This combination produces a structured, DOM-like representation of the UI, as well as screenshots covering the bounding boxes of potentially interactable elements. The researchers first created an interactive icon detection dataset using popular web pages and icon description datasets. These datasets are used to fine-tune specialized models: a detection model for parsing interactable areas on the screen and a description model for extracting the functional semantics of detected elements.

Specifically, OmniParser will:

Identify all interactive icons and buttons on the screen, mark them with boxes, and give each box a unique ID.

Use text to describe the function of each icon, such as "Settings" and "Minimize". Recognize text on the screen and extract it.

In this way, GPT-4V can clearly know what is on the screen and what each thing does. Just tell it the ID of which button you want to click.

How awesome is OmniParser?

Researchers used various tests to test OmniParser, and found that it can really make GPT-4V “better”!

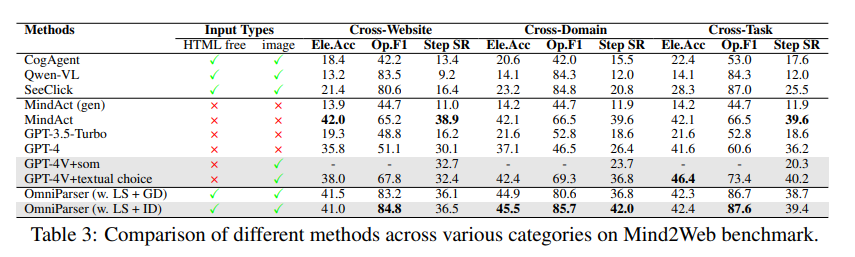

In the ScreenSpot test, OmniParser greatly improved the accuracy of GPT-4V, even surpassing some models specially trained for graphical interfaces. For example, on the ScreenSpot dataset, OmniParser improves accuracy by 73%, outperforming models that rely on underlying HTML parsing. Notably, incorporating local semantics of UI elements resulted in a significant improvement in prediction accuracy - GPT-4V's icons were correctly labeled from 70.5% to 93.8% when using OmniParser's output.

In the Mind2Web test, OmniParser improved the performance of GPT-4V in web browsing tasks, and its accuracy even exceeded GPT-4V that uses HTML information assistance.

In the AITW test, OmniParser significantly improved the performance of GPT-4V in mobile phone navigation tasks.

What are the shortcomings of OmniParser?

Although OmniParser is very powerful, it also has some minor flaws, such as:

It is easy to get confused when faced with repeated icons or text , and more detailed descriptions are needed to distinguish them.

Sometimes the frame is not drawn accurately enough , causing GPT-4V to click in the wrong position.

Interpretation of icons is occasionally erroneous and requires context for a more accurate description.

However, researchers are working hard to improve OmniParser and believe that it will become more and more powerful and eventually become the best partner of GPT-4V!

Model experience: https://huggingface.co/microsoft/OmniParser

Paper entrance: https://arxiv.org/pdf/2408.00203

Official introduction: https://www.microsoft.com/en-us/research/articles/omniparser-for-pure-vision-based-gui-agent/

Highlight:

✨OmniParser can help GPT-4V better understand the screen content and perform tasks more accurately.

OmniParser performed well in various tests, proving its effectiveness.

?️OmniParser still has some areas for improvement, but there is hope in the future.

All in all, OmniParser brings revolutionary improvements to GPT-4V's interaction with graphical user interfaces. Although there are still some shortcomings, its potential is huge and its future development is worth looking forward to. The editor of Downcodes believes that with the continuous advancement of technology, OmniParser will become a shining star in the field of artificial intelligence!