The editor of Downcodes learned that artificial intelligence company Anthropic has released a message batch processing API. This new technology reduces the cost of enterprises processing large amounts of data by 50%. This move marks a major breakthrough in the field of big data processing, saving enterprises a lot of money, while also improving the efficiency and convenience of big data processing. This new feature not only reduces costs, but more importantly changes the industry's pricing philosophy, creates economies of scale for AI computing, and is expected to promote the popularization of AI applications in medium-sized enterprises.

Recently, artificial intelligence company Anthropic officially launched its new product - Message Batches API. This new technology allows companies to reduce the cost of processing large amounts of data by 50%. This move undoubtedly brings good news to big data processing.

Through this API, enterprises can process up to 10,000 queries asynchronously within 24 hours, making high-end AI models more approachable.

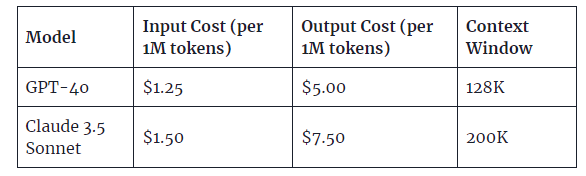

As AI technology continues to develop, the challenges faced by enterprises are also increasing, especially in data processing. The batch processing API launched by Anthropic this time is 50% cheaper than real-time processing in terms of input and output token fees.

What’s interesting is that this change is not just a simple price reduction strategy, but also a change in the industry’s pricing philosophy. By discounting large-scale processing, Anthropic is creating economies of scale for AI computing while also potentially driving the adoption of AI applications in mid-sized enterprises. Imagine that large-scale data analysis, previously considered expensive and complex, is now so simple and cost-effective.

It is worth mentioning that Anthropic's batch processing API is already available in its Claude3.5Sonnet, Claude3Opus and Claude3Haiku models. In the future, this functionality will be expanded on Google Cloud’s Vertex AI and Amazon Bedrock.

Compared with applications that require real-time response, although batch processing is slower, in many business scenarios, "timely" processing is often more important than "real-time" processing. Enterprises are beginning to pay attention to how to find the best balance between cost and speed, which will have a new impact on the implementation of AI.

However, despite the obvious advantages of batch processing, it also raises some questions. As enterprises become accustomed to low-cost batch processing, will there be an impact on the further development of real-time AI technology? In order to maintain a healthy AI ecosystem, it is necessary to find the right balance between advancing batch processing and real-time processing capabilities.

Highlight:

✅ Anthropic's newly launched message batch processing API allows enterprises to reduce the cost of processing large amounts of data by 50%.

✅ The new API supports up to 10,000 asynchronous queries, improving the accessibility of big data processing.

✅ Enterprises are beginning to pay attention to "just-in-time" processing in AI applications, which may pose challenges to the development of real-time AI.

The launch of Anthropic's message batch processing API undoubtedly brings new possibilities to the AI industry and provides enterprises with more cost-effective big data processing solutions. In the future, we will continue to pay attention to Anthropic's innovation and development in the field of AI. I believe that as technology continues to advance, AI will better serve all walks of life.