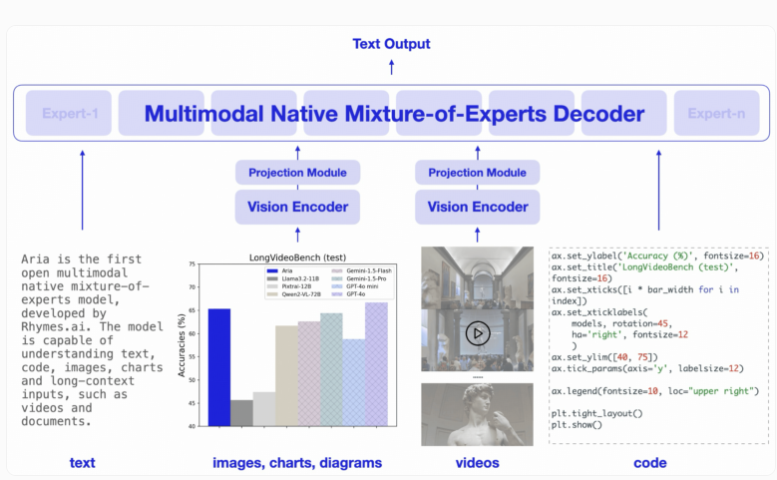

Tokyo-based startup Rhymes AI has released its first artificial intelligence model, Aria, an open-source multi-modal hybrid expert (MoE) model. The editor of Downcodes learned that Aria performs well in processing a variety of inputs such as text, code, images, and videos, and its capabilities even surpass some well-known business models. Aria uses a unique MoE architecture to increase computational efficiency through multiple specialized experts, and has a multi-modal context window of up to 24.9 billion parameters and 64,000 tokens, allowing it to handle longer input data. Rhymes AI also cooperated with AMD to optimize model performance and launched the BeaGo search application based on AMD hardware.

Aria is designed to provide superior understanding and processing capabilities across a variety of input formats, including text, code, images, and video. Different from the traditional Transformer model, the MoE model replaces its feed-forward layer with multiple professional experts. When processing each input token, a routing module selects a subset of experts to activate, thereby improving computational efficiency and reducing the number of activation parameters per token.

Aria's decoder can activate 3.5 billion parameters per text token, and the entire model has 24.9 billion parameters. To handle visual input, Aria also designed a lightweight visual encoder with 438 million parameters that can convert visual input of various lengths, sizes, and aspect ratios into visual tokens. Additionally, Aria’s multimodal context window reaches 64,000 tokens, meaning it can handle longer input data.

In terms of training, Rhymes AI is divided into four stages. It first uses text data for pre-training, then introduces multi-modal data, followed by long sequence training, and finally fine-tuning.

In this process, Aria used a total of 6.4 trillion text tokens and 400 billion multi-modal tokens for pre-training. The data came from well-known datasets such as Common Crawl and LAION, and some synthetic enhancements were performed.

According to relevant benchmark tests, Aria outperforms models such as Pixtral-12B and Llama-3.2-11B in multiple multi-modal, language and programming tasks, and has lower inference costs due to fewer activation parameters.

In addition, Aria performs well when processing videos with subtitles or multi-page documents, and its ability to understand long videos and documents exceeds other open source models such as GPT-4o mini and Gemini1.5Flash .

For ease of use, Rhymes AI releases the source code of Aria on GitHub under the Apache2.0 license, supporting academic and commercial use. At the same time, they also provide a training framework that can fine-tune Aria for multiple data sources and formats on a single GPU. It is worth mentioning that Rhymes AI has reached a cooperation with AMD to optimize model performance and demonstrated a search application called BeaGo, which can run on AMD hardware to provide users with more comprehensive text and image AI search. result.

Highlight:

Aria is the world's first open source multi-modal hybrid expert AI model.

Aria outperforms many peer models when processing a variety of inputs such as text, images, and videos.

? Rhymes AI cooperates with AMD to optimize model performance and launch the BeaGo search application that supports multiple functions.

All in all, the open source and high performance of the Aria model have brought new breakthroughs to the field of artificial intelligence and provided powerful tools for developers and researchers. Its multi-modal capabilities and high computational efficiency give it great potential in future applications. The editor of Downcodes looks forward to the application and development of Aria in more fields.