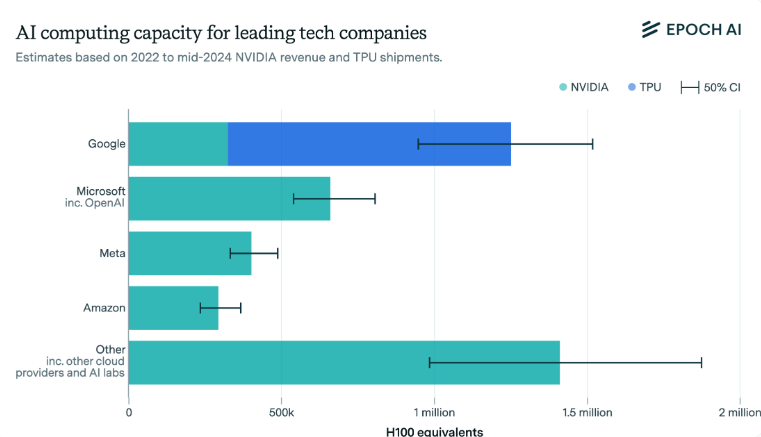

According to analysis by AI research company Epoch AI, Google is far ahead in AI computing power and may have the largest AI computing cluster in the world. This conclusion is based on Google's powerful customized tensor processing unit (TPU), which has the computing power equivalent to at least 600,000 Nvidia H100 GPUs. The editor of Downcodes will interpret Epoch AI's report in detail and analyze its impact on future AI development.

According to an analysis by AI research firm Epoch AI, Google may have the largest AI computing power in the world. This advantage comes from Google's custom tensor processing units (TPUs), which provide the same computing power as at least 600,000 Nvidia H100 GPUs. Researchers at Epoch AI note: “Given Google’s massive fleet of TPUs, coupled with their NVIDIA GPUs, Google likely has the most AI computing power of any single company.”

This bar chart shows the AI computing power of leading tech companies, with Google leading the way with its own TPUs. This distribution may have a significant impact on the development and application of future AI models. | Image: provided by EpochAI

As AI technology continues to advance, competition in the AI chip market is also intensifying. Google's TPUs and Nvidia's GPUs play a key role in providing the powerful computing power that is essential for training and running complex AI models. As more companies enter this field, we can expect that the performance of AI chips will continue to improve while costs will decrease, which will further promote the development and popularization of AI technology.

Google’s leading position in AI computing power indicates that it will occupy a dominant position in the future development and application of AI technology. This is not only reflected in the research and development of its own products, but will also have a profound impact on the entire AI industry ecology. The future development of AI may largely depend on Google’s strategic direction. Let's wait and see.