Downcodes editor reports: Alimama’s creative team has launched a new image generation model FLUX.1-Turbo-Alpha, which is an 8-step distilled Lora model based on FLUX.1-dev model training. This model uses a multi-head discriminator, which significantly improves the quality of image generation. It supports multiple functions such as text-to-image generation, repair control network, etc., and is compatible with the Diffusers and ComfyUI frameworks, making it easy for users to get started quickly. The model is adversarially trained on millions of high-quality image data. The aesthetic score exceeds 6.3 and the resolution is higher than 800, ensuring high-quality image output. What is even more exciting is that a version with lower steps will be launched in the future.

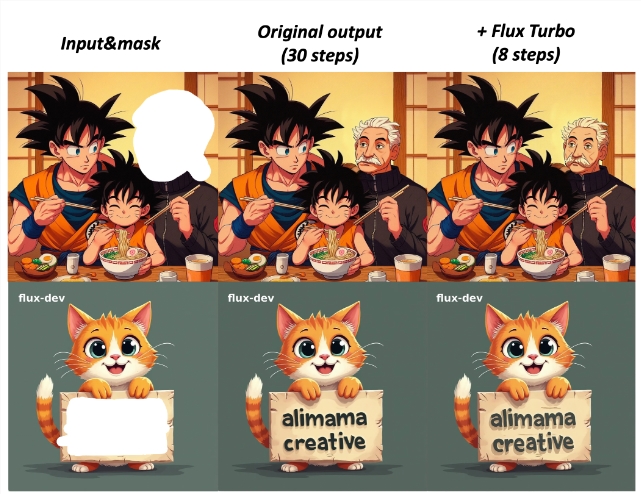

Recently, Alimama’s creative team released FLUX.1-Turbo-Alpha, an 8-step distillation Lora model trained based on the FLUX.1-dev model.

This model uses a multi-head discriminator, which significantly improves the quality of distillation and supports a variety of FLUX-related applications such as text-to-image generation and repair control networks. The team recommends setting the guide scale to 3.5 and the Lora scale to 1 when using it. A version with a lower number of steps will be launched in the future.

FLUX.1-Turbo-Alpha can be used directly with the Diffusers framework. Users can load the model and generate the required images with just a few lines of code. For example, you could create a fun scene of a smiling sloth wearing a leather jacket, cowboy hat, plaid skirt and bow, standing in front of a sleek Volkswagen van painted with a cityscape. By simply adjusting the parameters, you can generate high-quality images at a resolution of 1024x1024.

In addition, the model is also compatible with ComfyUI and can be used for fast text-to-image workflows or to achieve more efficient generation effects in repair control networks. Through this technology, the generated images can closely follow the original output, improving the user's creative experience.

The training process of FLUX.1-Turbo-Alpha is equally impressive. The model was trained on over 1 million images from open source and internal sources, with an aesthetic score of over 6.3, all at resolutions above 800. The team adopted an adversarial training method during the training process to improve image quality and added a multi-head design for each transformer layer. The bootstrap scale during training was fixed at 3.5, the time offset was set to 3, mixed precision bf16 was used, the learning rate was set to 2e-5, the batch size was 64, and the image size was 1024x1024.

The launch of FLUX.1-Turbo-Alpha marks another breakthrough for Alimama in the field of image generation, promoting the popularization and application of artificial intelligence technology.

Project entrance: https://huggingface.co/alimama-creative/FLUX.1-Turbo-Alpha

Highlight:

This model is based on FLUX.1-dev and uses 8-step distillation and multi-head discriminator to improve the quality of image generation.

Supporting text-to-image generation and repair control networks, users can easily create a variety of interesting scenes.

? The training process uses adversarial training, and the training data exceeds 1 million images to ensure high-quality output of the model.

All in all, FLUX.1-Turbo-Alpha brings new possibilities to the field of image generation with its high efficiency, high-quality image generation capabilities and convenient and easy-to-use features. Interested users can go to the Hugging Face page to experience this powerful model. The editor of Downcodes will continue to pay attention to Alibaba’s latest progress in the field of artificial intelligence and bring you more exciting reports.