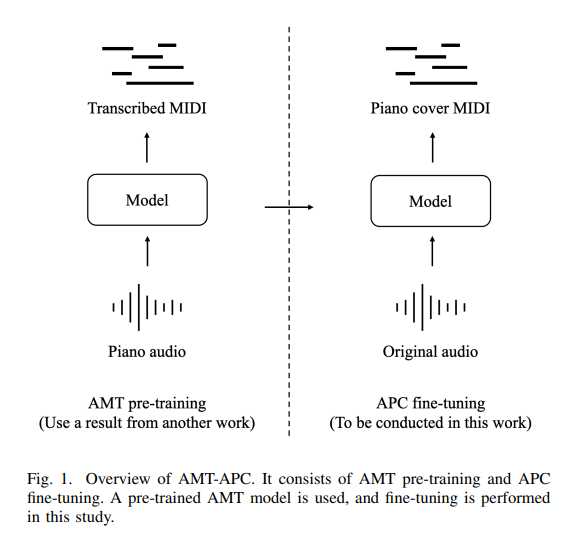

The editor of Downcodes learned that researchers from the School of Data Science at Musashino University have recently made a major breakthrough and developed a new algorithm called AMT-APC, which can automatically generate piano music more accurately. This technology is based on the Automatic Music Transcription (AMT) model. Through clever fine-tuning, it significantly improves the level of sound quality and expressiveness of the generated piano music, overcoming the sound quality fidelity and performance problems of previous automatic piano music generation technologies. Bottlenecks such as insufficient power. The innovation of this algorithm lies in its unique two-step strategy: first, the pre-trained AMT model is used to capture various sound details in the music, and then fine-tuned through a paired data set containing the original music audio and piano performance MIDI files. The result is a piano performance version that is closer to the style of the original song. In addition, the researchers also introduced the concept of "style vector" to further improve the expressiveness of the generated piano music.

For a long time, technology for automatically generating piano music has faced the challenge of insufficient sound quality fidelity and expressiveness. Existing models often can only generate simple melodies and rhythms, and cannot capture the rich details and emotions in the original songs.

The AMT-APC algorithm takes a different approach. It first uses a pre-trained AMT model to accurately "capture" various sounds in music, and then applies it to the automatic piano performance (APC) task through fine-tuning.

The core of the AMT-APC algorithm lies in the two-step strategy:

Step one: pre-training. The researchers chose a high-performance AMT model called hFT-Transformer as the basis and further trained it using the MAESTRO dataset, making it capable of processing longer music clips.

Step 2: Fine-tuning. The researchers created a paired data set containing the original music audio and piano performance MIDI files, and used this data set to fine-tune the AMT model so that it could generate a piano performance version that was more consistent with the style of the original music.

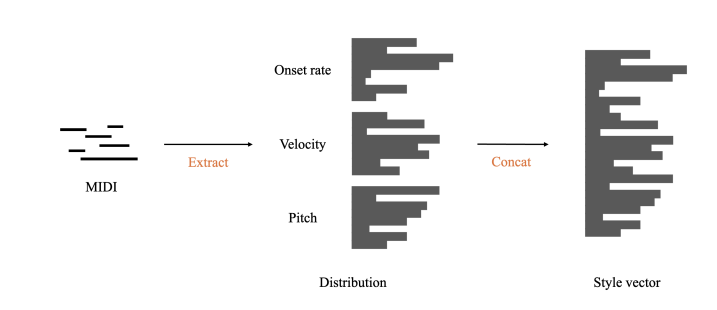

In order to make the generated piano music more expressive, the researchers also introduced a concept called "style vector". Style vectors are a set of features extracted from each piano performance version, including note onset rate distribution, velocity distribution, and pitch distribution. By inputting style vectors into the model along with the original music audio, the AMT-APC algorithm is able to learn different playing styles and reflect them in the generated piano music.

Experimental results show that compared with existing automatic piano playing models, the piano music generated by the AMT-APC algorithm has significant improvements in sound quality fidelity and expressiveness. By using a metric called Qmax to evaluate the similarity between the original song and the generated audio, the AMT-APC model achieved the lowest Qmax value, which means it is better able to restore the characteristics of the original song.

This study shows that AMT and APC are highly related tasks, and using existing AMT research results can help us develop more advanced APC models. In the future, the researchers plan to further explore AMT models that are more suitable for APC applications, in order to achieve more realistic and expressive automatic piano playing.

Project address: https://misya11p.github.io/amt-apc/

Paper address: https://arxiv.org/pdf/2409.14086

The success of the AMT-APC algorithm has brought new possibilities to the field of automatic music generation, and also indicates that more realistic and expressive automatic music generation technology is coming in the future. We look forward to future researchers continuing to explore on this basis and bringing us more surprises!