The editor of Downcodes reports: An open source AI image generation model called Meissonic has emerged. It can generate high-quality images using only one billion parameters. It can be called a lightweight giant in the field of AI image generation! This is due to the unique converter architecture and novel training methods adopted by the R&D team (researchers from Alibaba, Skywork AI and multiple universities). Meissonic can not only run on ordinary gaming PCs, but is also expected to implement localized text-to-image applications on mobile phones in the future, which will greatly reduce the entry threshold for AI image generation.

Recently, the scientific research team jointly launched an open source AI image generation model called Meissonic. Surprisingly, this model can generate high-quality images using only one billion parameters. This compact design gives Meissonic the potential to localize text-to-image applications on mobile devices.

The R&D team behind this technology includes researchers from Alibaba, Skywork AI and multiple universities. They used a unique converter architecture and novel training methods to enable Meissonic to run on regular gaming PCs and possibly even mobile phones in the future.

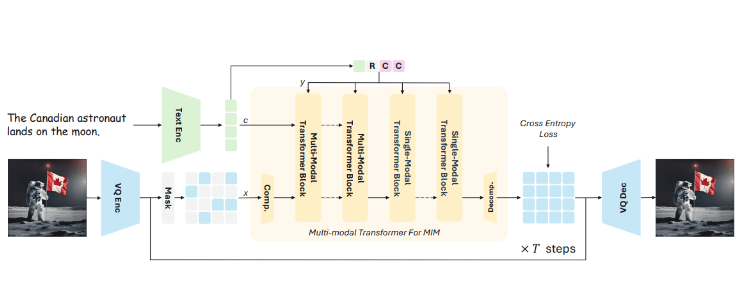

Meissonic’s training method uses a technique called “masked image modeling”, which simply means that part of the image is hidden during the training process. The model learns how to reconstruct missing parts based on visible regions and textual descriptions. This approach helps the model understand the relationship between image elements and text.

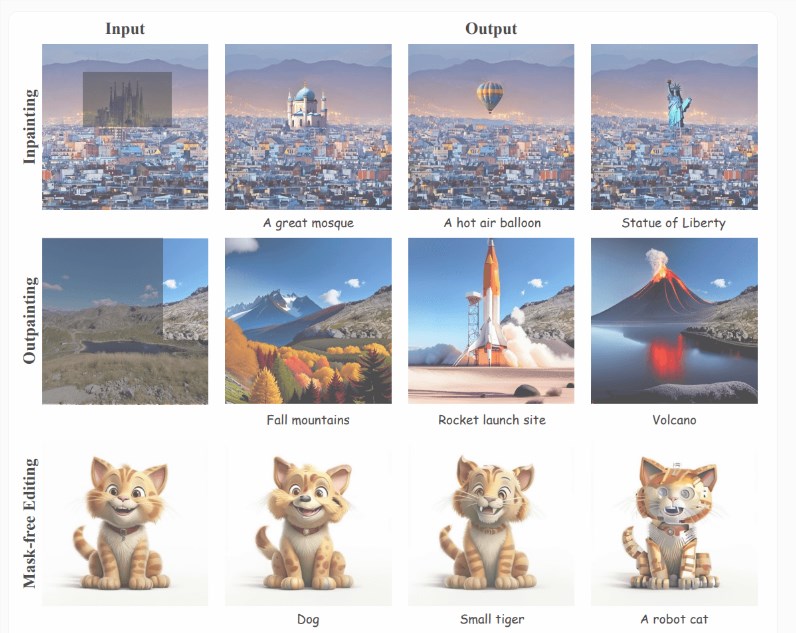

Meissonic's architecture allows it to generate high-resolution images of 1024x1024 pixels, whether it is realistic scenes or stylized text, emoticons, or even cartoon stickers.

Unlike traditional autoregressive models that gradually generate images, Meissonic predicts all image information at the same time through parallel iterative optimization. This innovation significantly reduces the decoding steps, reducing the time by approximately 99%, and greatly improves image generation. speed.

In the process of building the model, the researchers went through four steps:

First, they used 200 million 256x256 pixel images to teach the model basic concepts; then, they used 10 million strictly screened image-text pairs to improve its text understanding capabilities; then, by adding a special compression layer, the model was able to output 1024x1024 pixel-by-pixel images; finally, they performed fine-tuning that incorporated data on human preferences to improve the model’s performance.

Interestingly, despite having a smaller number of parameters, Meissonic outperformed some larger models such as SDXL and DeepFloyd-XL on multiple benchmarks, achieving a high “Human Preference Score” of 28.83. Additionally, Meissonic is capable of image patching and expansion without additional training, allowing users to easily add missing image parts or creatively enhance existing images.

The research team believes that this method may promote the rapid and low-cost development of customized AI image generators, and is also expected to promote the development of text-to-image applications on mobile devices. Interested friends can find the demo version on Hugging Face and view the code of the model on GitHub, which can be easily run on a consumer GPU with ordinary 8GB of video memory.

demo:https://huggingface.co/spaces/MeissonFlow/meissonic

Project: https://github.com/viiika/Meissonic

Highlight:

Meissonic is an open source AI model that can generate high-quality images with only one billion parameters, suitable for use on ordinary gaming PCs and future mobile devices.

Using a parallel iterative optimization training method, Meissonic can generate images 99% faster than traditional models.

? Despite its small parameter size, Meissonic outperforms larger models in multiple tests and enables training-free image inpainting and expansion.

All in all, the emergence of Meissonic has brought new possibilities to the field of AI image generation. Its lightweight design and efficient performance are worth looking forward to! The editor of Downcodes recommends that everyone go to Hugging Face and GitHub to experience and explore this powerful AI model.