The editor of Downcodes learned that significant progress has been made in the field of video generation! Genmo has open sourced its latest video generation model Mochi1, which has 10 billion parameters and is the largest video generation model currently released publicly. Mochi1 adopts the innovative Asymmetric Diffusion Transformer (AsymmDiT) architecture, which is simple and easy to modify, providing great convenience to open source community developers, and can generate high-quality videos up to 5.4 seconds long and with a frame rate of up to 30 frames/second.

A major breakthrough has come in the field of video generation! Genmo has open sourced its latest video generation model, Mochi1, setting a new benchmark in the field of video generation. Mochi1 uses the innovative Asymmetric Diffusion Transformer (AsymmDiT) architecture and has up to 10 billion parameters, making it the largest video generation model publicly released to date.

More importantly, it is trained completely from scratch and has a simple and modifiable architecture, which provides great convenience to developers in the open source community.

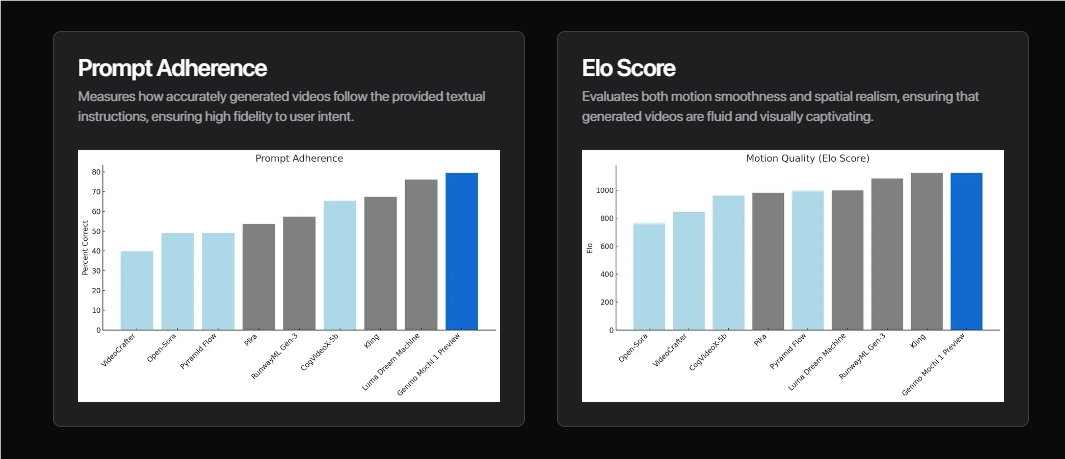

The biggest highlight of Mochi1 is its excellent motion quality and precise compliance with text prompts. It is capable of generating smooth videos up to 5.4 seconds long with a frame rate of up to 30 frames/second, with stunning temporal coherence and realistic motion dynamics.

Mochi1 can also simulate various physical phenomena, such as fluid dynamics, hair simulation, etc. The characters it generates have natural and smooth movements, almost comparable to real-life performances.

In order to make it easier for developers to use, Genmo has also open sourced its video VAE, which can compress the video to 1/128 of the original size, effectively reducing the calculation amount and memory requirements of the model.

The AsymmDiT architecture efficiently handles user prompts and compressed video tags through a multi-modal self-attention mechanism, and learns separate MLP layers for each modality, further improving the efficiency and performance of the model.

The release of Mochi1 marks an important step in the field of open source video generation. Genmo company said that they will release the full version of Mochi1 before the end of the year, including Mochi1HD that supports 720p video generation, by which time the fidelity and smoothness of the video will be further improved.

In order to let more people experience the powerful functions of Mochi1, Genmo has also launched a free hosted playground, which users can experience at genmo.ai/play. The weights and architecture of Mochi1 have also been made public on the HuggingFace platform for developers to download and use.

Genmo is comprised of core members of projects such as DDPM, DreamFusion and Emu Video, and its advisory team includes Ion Stoica, executive chairman and co-founder of Databricks and Anyscale; Pieter Abbeel, co-founder of Covariant and early team member of OpenAI; and Language Model Systems Industry leaders such as Joey Gonzalez, pioneer and co-founder of Turi.

Genmo's mission is to unlock the right brain of general artificial intelligence, and Mochi1 is the first step in building a world simulator that can imagine anything, possible or impossible.

Genmo recently completed a Series A round of financing led by NEA, totaling US$28.4 million, which will provide sufficient financial support for their future research and development.

While Mochi1 has achieved impressive results, it still has some limitations. For example, the initial version can currently only produce 480p video, with slight distortion and distortion in some edge cases of extreme motion. In addition, Mochi1 is currently optimized for photo-realistic style, and its performance in animation content needs to be improved.

Genmo says it will continue to improve the Mochi1 and encourages the community to fine-tune the model to suit different aesthetic preferences. At the same time, they have also implemented strong safety audit protocols in their playgrounds to ensure that all video generation is ethical.

Model download: https://huggingface.co/genmo/mochi-1-preview

Online experience: https://www.genmo.ai/play

Official introduction: https://www.genmo.ai/blog

The open source of Mochi1 brings new possibilities to the field of video generation, and its powerful functions and convenient use are worth looking forward to. Genmo's continued efforts and active community participation will further promote the advancement of video generation technology. Looking forward to the arrival of Mochi1HD and the emergence of more innovative achievements.