French AI startup Les Ministraux has released two lightweight AI models, Ministral3B and Ministral8B, with parameters of 3 billion and 8 billion respectively, designed for edge devices. These two models performed outstandingly in the instruction following benchmark test, especially surpassing models of the same level in terms of knowledge, common sense, reasoning, and efficiency. They even outperformed models with larger parameters in some aspects, setting a new benchmark for low-parameter models. Benchmark. The editor of Downcodes will provide a detailed explanation of the performance, features and companies behind these two models.

French AI startup Les Ministraux has launched two new lightweight models, Ministral3B and Ministral8B, specially designed for edge devices, with parameters of 3 billion and 8 billion respectively. The two models performed well in the instruction following benchmark, with Ministral3B surpassing Llama38B and Mistral7B, while Ministral8B outperformed these two models in all aspects except code capabilities.

Test results show that the performance of Minitral3B and Minitral8B is comparable to open source models such as Gemma2 and Llama3.1. Both models support up to 128k contexts and set new benchmarks for sub-10B parameter models in terms of knowledge, common sense, reasoning, function calls and efficiency. Ministral8B is also equipped with a sliding window attention mechanism for faster and more efficient in-memory inference. They can be fine-tuned to a variety of use cases, such as managing complex AI agent workflows or creating specialized task assistants.

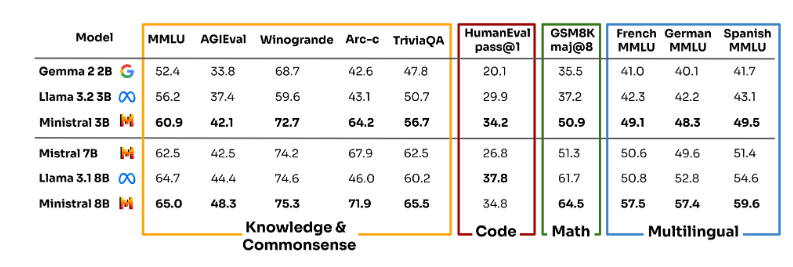

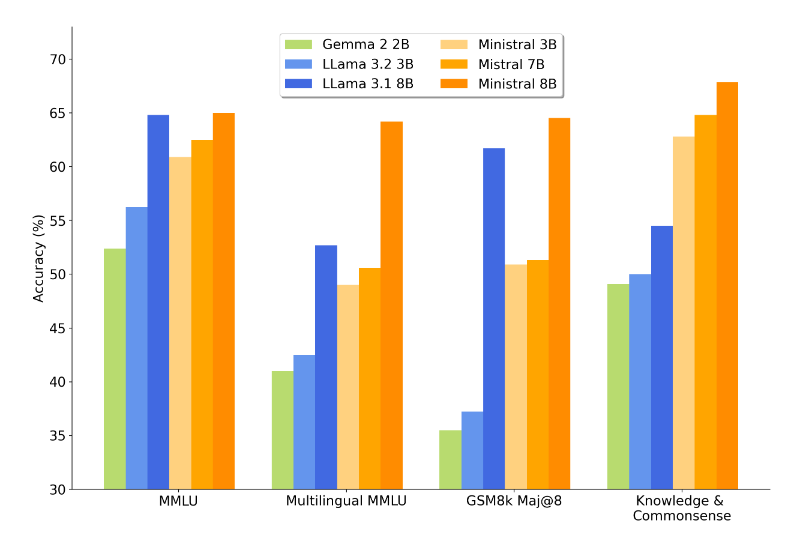

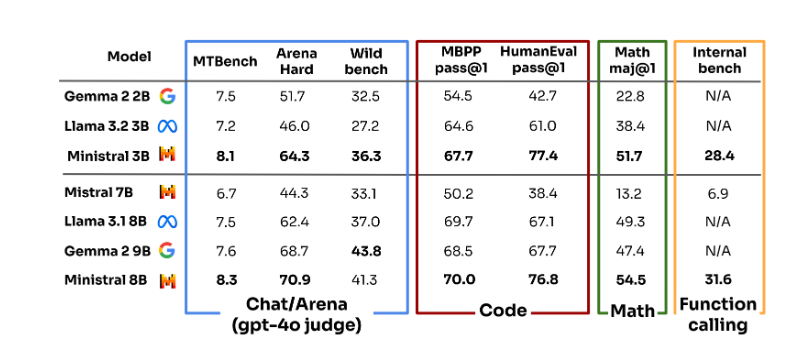

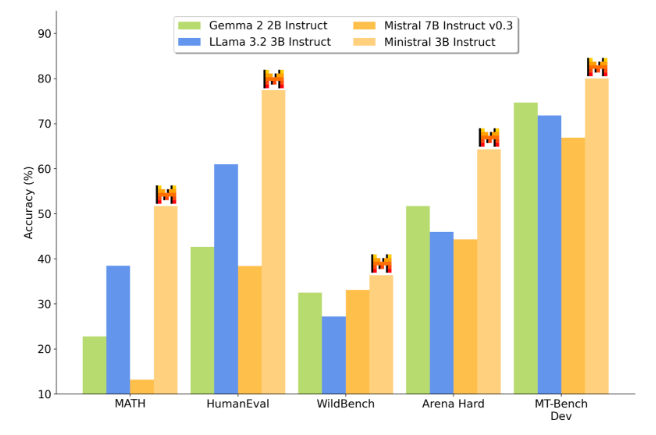

The researchers conducted multiple benchmark tests on the Les Ministraux model, covering aspects such as knowledge and common sense, coding, mathematics and multilingualism. In the pre-training model stage, Minitral3B achieved the best results in comparison with Gema22B and Llama3.23B. In comparison with Llama3.18B and Mistral7B, Mistral8B performed best in all aspects except coding capabilities. In the instruction model stage after fine-tuning, Minitral3B achieved the best results in different benchmark tests, and Minitral8B was only slightly inferior to Gema29B on the Wild bench.

The launch of the Les Ministraux model provides users with a highly computationally efficient, low-latency solution that meets the needs of an increasing number of users for local-first inference for critical applications. Users can apply these models to scenarios such as on-device translation, smart assistants that do not require an Internet connection, and autonomous robots. The input and output price of Ministral8B is US$0.1 per million tokens, and that of Ministral3B is US$0.04 per million tokens.

It is worth noting that Mistral has previously open sourced multiple models through magnet links and has been recognized by the AI community. However, the company has been embroiled in controversy this year as it is no longer as open as it once was. There is news that Microsoft will acquire part of Mistral's shares and invest in it, which means that Mistral's models will be hosted on Azure AI. Reddit netizens discovered that Mistral had removed its commitment to open source from its official website. Some of the company's models have also begun to charge, including the Ministral3B and Ministral8B released this time.

Details: https://mistral.ai/news/ministraux/

All in all, the emergence of Minitral3B and Minitral8B provides a strong choice for edge computing AI applications, and their efficient performance and low price give them significant competitive advantages. But the change in Mistral's strategy has also triggered the industry's thinking about the balance between open source models and commercialization. In the future, we will continue to pay attention to the application and development of the Les Ministraux model.