Researchers at OpenAI have released an impressive continuous-time consistency model (sCM) that achieves a breakthrough in the speed of generating multimedia content, generating images 50 times faster than traditional diffusion models, requiring less than 0.1 An image can be generated in seconds. This research was co-authored by Lu Cheng and Yang Song, and the paper has been published on arXiv.org. Although it has not yet been peer-reviewed, its potential impact is huge and heralds a major leap in real-time generative AI applications. The editor of Downcodes will give you an in-depth understanding of the innovations and future application prospects of the sCM model.

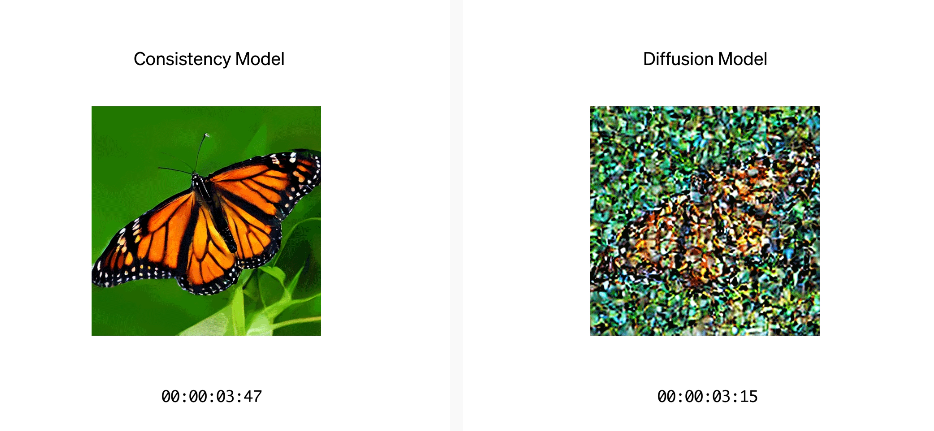

Recently, researchers at OpenAI released an exciting research result, introducing a new continuous-time consistency model (sCM). This model achieves a leap in the speed of generating multimedia content (such as images, videos, and audio), a full 50 times faster than the traditional diffusion model. Specifically, sCM can generate an image in less than 0.1 seconds, while traditional diffusion models often require more than 5 seconds.

Through this technology, the research team successfully generated high-quality samples with only two sampling steps. This innovation makes the generation process more efficient without sacrificing sample quality. The article was co-written by two researchers from OpenAI, Lu Cheng and Yang Song, and has been published on arXiv.org. Although it has not yet been peer-reviewed, its potential impact cannot be underestimated.

Yang Song first proposed the concept of "consistency model" in a 2023 paper, which laid the foundation for the development of sCM. Although diffusion models are excellent at generating photorealistic images, 3D models, audio, and video, they are not very efficient at sampling, often requiring tens to hundreds of steps, making them impractical in real-time applications.

Sampling is faster

The biggest highlight of the sCM model is that it can achieve faster sampling speed without increasing the computational burden . OpenAI's largest sCM model has 1.5 billion parameters, and on an A100 GPU, the sample generation time is only 0.11 seconds. This results in a 50x speedup in wall clock time compared to diffusion models, making real-time generative AI applications more feasible.

Requires less computing resources

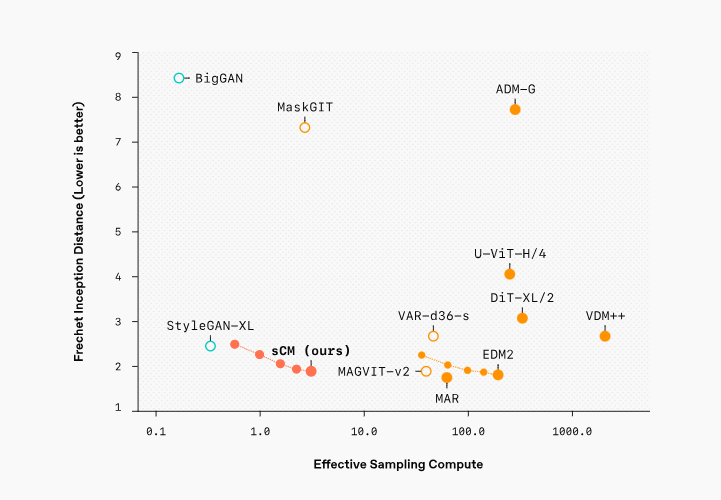

In terms of sample quality, sCM was trained on the ImageNet 512×512 dataset and achieved a Fréchet Inception Distance (FID) score of 1.88, which is less than 10% different from the top diffusion model. Through extensive benchmarking against other advanced generative models, the research team demonstrated that sCM provides top results while significantly reducing computational overhead.

In the future, the rapid sampling and scalability of sCM models will open new possibilities for real-time generative AI applications in multiple fields. From image generation to audio and video synthesis, sCM provides a practical solution to the need for fast, high-quality output. At the same time, OpenAI’s research also hints at the potential for further optimization of the system, which may accelerate model performance according to the needs of different industries.

Official blog: https://openai.com/index/simplifying-stabilizing-and-scaling-continuous-time-consistency-models/

Paper: https://arxiv.org/html/2410.11081v1

The emergence of the sCM model marks a major breakthrough in the field of AI image generation. Its efficient sampling speed and high-quality output have opened a new chapter for real-time applications. Its future development potential is unlimited and worth looking forward to!