Google recently announced that starting next week, the Google Photos app will add AI editing logos to photos edited with AI features such as Magic Editor, Magic Eraser and Zoom Enhance. The update was intended to increase transparency but sparked controversy. Because the logo is only displayed in the photo details, not the photo itself, it is difficult for users to intuitively identify AI editing traces in daily use. The editor of Downcodes will interpret this in detail, analyze its pros and cons, and discuss its impact on the future development of AI image technology.

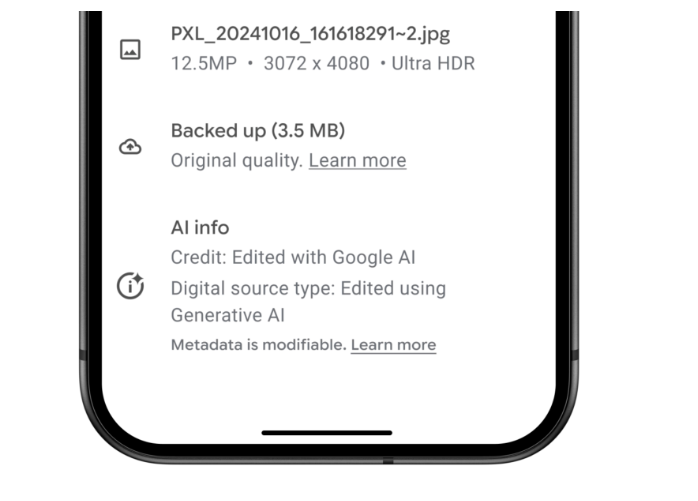

At a time when artificial intelligence imaging technology is becoming increasingly popular, Google announced that it will introduce a new AI editing logo function to the Google Photos application starting next week. All photos edited using AI features such as Magic Editor, Magic Eraser and Zoom Enhance will have annotations edited using Google AI displayed at the bottom of the app’s Details section.

This update comes more than two months after Google released the Pixel 9 phone equipped with multiple AI photo editing features. However, this labeling method has caused some controversy. Although Google claims that this move is to further improve transparency, the actual effect is questionable: the photo itself does not add any visual watermark, which means that users cannot intuitively identify it when browsing photos on social media, instant messaging, or daily. Whether these photos have been processed by AI.

For photo editing features like Best Take and Add Me that don’t use generative AI, Google Photos will also annotate the editing information in the metadata, but it will not be displayed under the details tab. These functions are mainly used to combine multiple photos into one complete image.

Michael Marconi, communications manager for Google Photos, told TechCrunch: The work isn't done yet. We will continue to gather feedback, strengthen and improve safety safeguards, and evaluate other solutions to increase the transparency of generative AI editing. While the company hasn't made it clear whether it will add a visual watermark in the future, it hasn't completely ruled out the possibility.

It is worth noting that all photos currently edited by Google AI already contain AI editing information in the metadata. The new feature simply moves this information under the Details tab, which is easier to find. However, the actual effect of this approach is worrying, because most users do not specifically look for metadata or detailed information when browsing images on the Internet.

Of course, adding a visible watermark within a photo frame isn't a perfect solution either. These watermarks can easily be cropped or edited out, and the problem remains. As Google's AI imaging tools gain popularity, synthetic content is likely to proliferate on the Internet, making it increasingly difficult for users to distinguish real from fake content.

The metadata watermarking method currently adopted by Google relies heavily on each platform to identify AI-generated content to users. Meta has implemented this practice on Facebook and Instagram, and Google plans to identify AI images in search results later this year. However, relevant measures on other platforms have progressed more slowly.

This controversy highlights an important issue in the development of AI technology: how to ensure content authenticity and users’ right to know while promoting technological innovation. Although Google has taken the first step to improve transparency, it is clear that more efforts and improvements are needed to prevent synthetic content from misleading users.

Although Google's move is intended to increase transparency, its effect remains to be seen. How to strike a balance between the development of AI technology and the authenticity of content is a difficult problem facing all technology companies. In the future, more effective AI-generated content identification methods are imperative to better protect the rights and interests of users.