The editor of Downcodes will take you to understand the latest progress of physical neural networks (PNNs)! This emerging technology uses the characteristics of physical systems for calculations and is expected to break through the limitations of existing AI models. It can not only train larger-scale AI models, but also achieve low-energy edge computing and perform local inference on devices such as smartphones, which will completely change the application scenarios of AI and bring unprecedented possibilities.

Recently, scholars from multiple institutions have discovered a new technology—physical neural networks (PNNs). This is not the digital algorithms we are familiar with running in computers, but a brand new intelligent computing method based on physical systems.

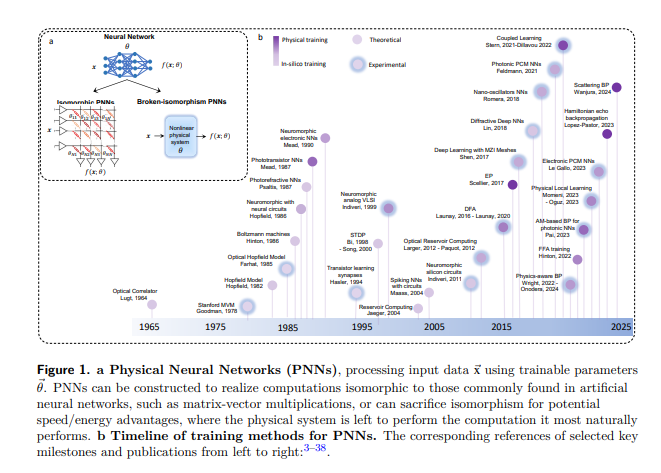

PNNs, as the name suggests, are neural networks that exploit the characteristics of physical systems to perform calculations. While currently a niche area of research, they may be one of the most undervalued opportunities in modern AI.

The potential of PNNs: large models, low energy consumption, edge computing

Imagine if we could train AI models that are 1,000 times larger than today, but also enable local, private inference on edge devices, such as smartphones or sensors? It sounds like science fiction scenario, but research shows it's not impossible.

To achieve large-scale training of PNNs, researchers are exploring methods including those based on backpropagation and those without backpropagation. Each of these methods has pros and cons, and none currently achieves the same scale and performance as the backpropagation algorithm widely used in deep learning. But the situation is changing rapidly, and the diverse ecosystem of training technologies provides clues for the utilization of PNNs.

The implementation of PNNs involves multiple fields, including optics, electronics, and brain-inspired computing. They can be structured in a similar way to digital neural networks to perform calculations such as matrix-vector multiplication, or they can sacrifice this structural similarity for potential speed/energy advantages and let the physical system perform its most natural calculations.

The future of PNNs: Beyond digital hardware performance

Future applications of PNNs are likely to be very broad, ranging from large generative models to classification tasks in smart sensors. They will need to be trained, but the constraints for training may vary depending on the application. An ideal training method should be model-agnostic, fast and energy-efficient, and robust to hardware variation, drift, and noise.

Although the development of PNNs is full of potential, it also faces many challenges. How to ensure the stability of PNNs during the training and inference phases? How to integrate these physical systems with existing digital hardware and software infrastructure? These are questions that need to be addressed.

Paper address: https://arxiv.org/pdf/2406.03372

The emergence of physical neural networks (PNNs) has brought new hopes and challenges to the field of artificial intelligence. In the future, as technology continues to develop and problems are solved, PNNs are expected to play an important role in various fields and promote artificial intelligence to new heights. The editor of Downcodes will continue to pay attention to the latest research progress of PNNs, so stay tuned!