Google recently released an update to the Gemini 1.5 series of models, including the Gemini 1.5 Flash-8B small version as well as Gemini 1.5 Flash and Gemini 1.5 Pro with significantly improved performance. The editor of Downcodes will explain in detail the changes brought about by this update and user feedback. This update not only improves the model's processing capabilities in mathematics, coding, and complex prompts, but more importantly, Gemini 1.5 Flash can handle multi-modal inputs of more than 10 million tokens, demonstrating its strong adaptability. Logan Kilpatrick, head of Google AI products, strongly recommended Gemini 1.5 Flash on social media, calling it the best choice for developers around the world.

Google has recently made another effort in the field of AI and launched the latest Gemini1.5 model. This release includes a smaller variant, the Gemini1.5Flash-8B, as well as the "significantly improved" Gemini1.5Flash and the "more powerful" Gemini1.5Pro.

According to Google, performance has improved compared to many internal benchmarks, with Gemini 1.5 Flash seeing a "huge improvement" in overall performance, while 1.5 Pro is much better at math, coding, and complex prompts.

Google's AI product leader Logan Kilpatrick said on social media: "Gemini1.5Flash is now the best choice for developers around the world!" The Gemini1.5 series models can handle long text and can handle more than 10 million token information. perform inference, which enables them to handle large amounts of multimodal inputs such as documents, video, and audio.

Google launched Gemini 1.5 Flash in May - a lightweight version of Gemini 1.5. Gemini1.5 series models are designed to handle long contexts and can reason on fine-grained information from 10M tokens and more. This allows the model to handle large volumes of multimodal inputs, including documents, video, and audio.

This time Google launched a small version of Gemini1.5Flash with 8 billion parameters . The performance of the new Gemini1.5Pro in encoding and processing complex prompts has been significantly improved . Kilpatrick said Google will roll out a production-ready version in the coming weeks and hopes to bring more assessment tools.

According to Kilpatrick, these experimental models are being rolled out to gather feedback in order to get the latest updates into the hands of developers faster. He revealed that the new model will be provided to developers for free for testing through Google AI Studio and Gemini API, and will also be launched through Vertex AI's experimental endpoint in the future.

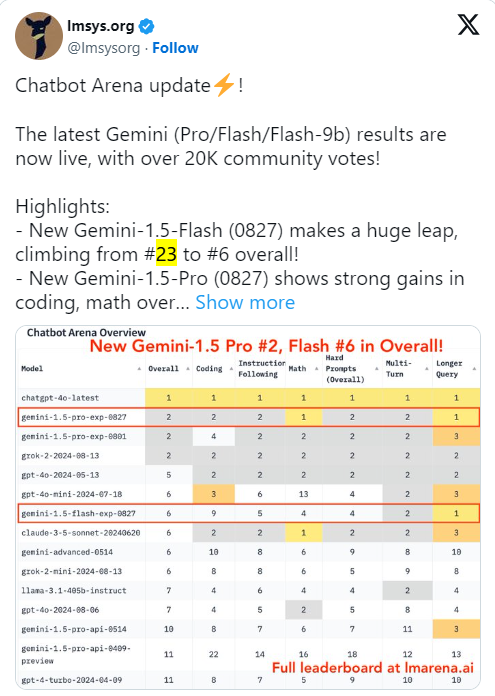

Just hours after launch, the Large Model Systems Organization (LMSO) released an update to its rankings in the chatbot space, based on 20,000 community votes. Gemini1.5-Flash achieved a "huge leap", climbing from 23rd to 6th place, on par with Llama, and outperforming Google's Gemma open model.

Starting September 3, Google will automatically redirect requests to the new model and remove older versions to avoid confusion. Kilpatrick is excited about this new model and hopes to see developers leverage it to implement more multimodal applications.

However, with the release of new models, early feedback has also shown a polarized trend. Some users expressed doubts about the frequent updates and believed that what users were looking forward to was the more comprehensive Gemini 2.0 version. At the same time, some users praised the speed and performance of the update, believing that it allowed Google to maintain its leadership in the field of AI.

All in all, the update of the Gemini 1.5 series models marks Google’s continued exploration and progress in the field of AI. Although there are differences in user feedback, this update undoubtedly provides developers with more powerful and efficient tools, and we are full of expectations for the further development of the Gemini series models in the future. The editor of Downcodes will continue to pay attention to the subsequent updates of the Gemini model, so stay tuned!