The editor of Downcodes brings you big news! Cerebras Systems launched the world's fastest AI inference service - Cerebras Inference, which has completely changed the rules of the game in the field of AI inference with its amazing speed and extremely competitive price. It performs well in processing various AI models, especially large language models (LLMs), and is 20 times faster than traditional GPU systems at a price as low as one-tenth or even one hundredth. How will this affect the future development of AI applications? Let’s take a closer look.

Cerebras Systems, a pioneer in performance AI computing, has introduced a groundbreaking solution that will revolutionize AI inference. On August 27, 2024, the company announced the launch of Cerebras Inference, the world’s fastest AI inference service. Cerebras Inference's performance indicators dwarf traditional GPU-based systems, providing 20 times the speed at an extremely low cost, setting a new benchmark for AI computing.

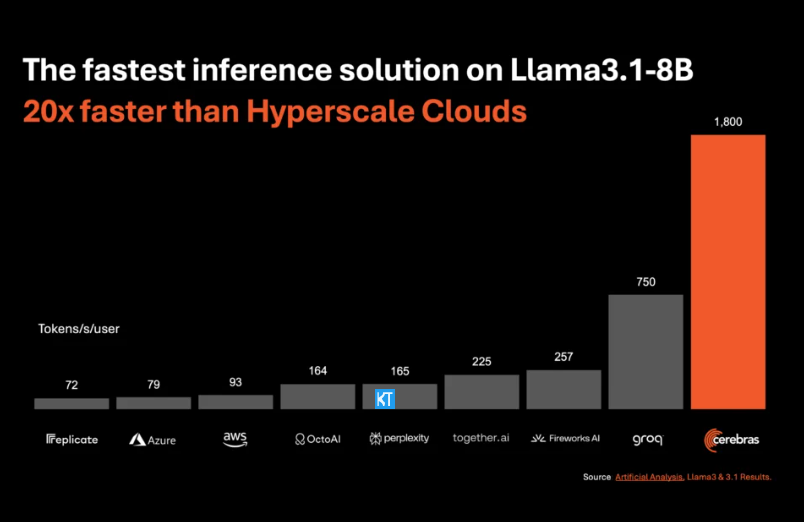

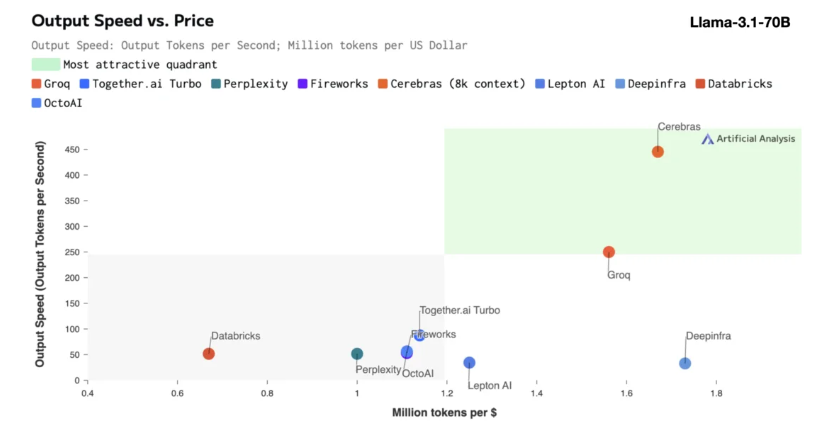

Cerebras inference is particularly suitable for processing various types of AI models, especially the rapidly developing "large language models" (LLMs). Taking the latest Llama3.1 model as an example, its 8B version can process 1,800 tokens per second, while the 70B version can process 450 tokens. Not only is this 20 times faster than NVIDIA GPU solutions, it's also more competitively priced. The pricing of Cerebras Inference starts at only 10 cents per million tokens, and the 70B version is 60 cents. Compared with existing GPU products, the price/performance ratio is improved by 100 times.

It’s impressive that Cerebras Inference achieves this speed while maintaining industry-leading accuracy. Unlike other speed-first solutions, Cerebras always performs inference in the 16-bit domain, ensuring that performance improvements do not come at the expense of AI model output quality. Micha Hill-Smith, CEO of Artificial Analytics, said that Cerebras achieved a speed of more than 1,800 output tokens per second on Meta's Llama3.1 model, setting a new record.

AI inference is the fastest growing segment of AI computing, accounting for approximately 40% of the entire AI hardware market. High-speed AI inference, such as that provided by Cerebras, is like the emergence of broadband Internet, opening up new opportunities and ushering in a new era for AI applications. Developers can use Cerebras Inference to build next-generation AI applications that require complex real-time performance, such as intelligent agents and intelligent systems.

Cerebras Inference offers three reasonably priced service tiers: free tier, developer tier, and enterprise tier. The free tier provides API access with generous usage limits, making it ideal for a broad range of users. The developer tier provides flexible serverless deployment options, while the enterprise tier provides customized services and support for organizations with continuous workloads.

In terms of core technology, Cerebras Inference uses the CerebrasCS-3 system, driven by the industry-leading Wafer Scale Engine3 (WSE-3). This AI processor is unparalleled in scale and speed, providing 7,000 times more memory bandwidth than the NVIDIA H100.

Cerebras Systems not only leads the trend in the field of AI computing, but also plays an important role in multiple industries such as medical, energy, government, scientific computing and financial services. By continuously advancing technological innovation, Cerebras is helping organizations in various fields address complex AI challenges.

Highlight:

Cerebras Systems' service speed is increased by 20 times, its price is more competitive, and it opens a new era of AI reasoning.

Supports various AI models, especially performing well on large language models (LLMs).

Three service levels are provided to facilitate developers and enterprise users to choose flexibly.

All in all, the emergence of Cerebras Inference marks an important milestone in the field of AI inference. Its excellent performance and economy will promote the widespread popularization and innovative development of AI applications, and it deserves the attention and anticipation of the industry! The editor of Downcodes will continue to bring you more cutting-edge technology information.