Alibaba Cloud has launched the Qwen2-Math series, a new large-scale language model focusing on the field of mathematics, which has attracted widespread attention in the industry. This series of models surpasses existing open source models in multiple mathematical benchmark tests, and even outperforms well-known closed source models such as GPT-4o and Claude-3.5-Sonnet in some aspects. The editor of Downcodes will give you an in-depth explanation of the excellent performance, innovative technology and future development direction of the Qwen2-Math series models, and take you to explore the latest breakthroughs in the field of AI mathematics.

Recently, Alibaba Cloud has launched the Qwen2-Math series of large-scale language models. This AI newcomer focusing on the field of mathematics has attracted widespread attention in the industry as soon as it was unveiled.

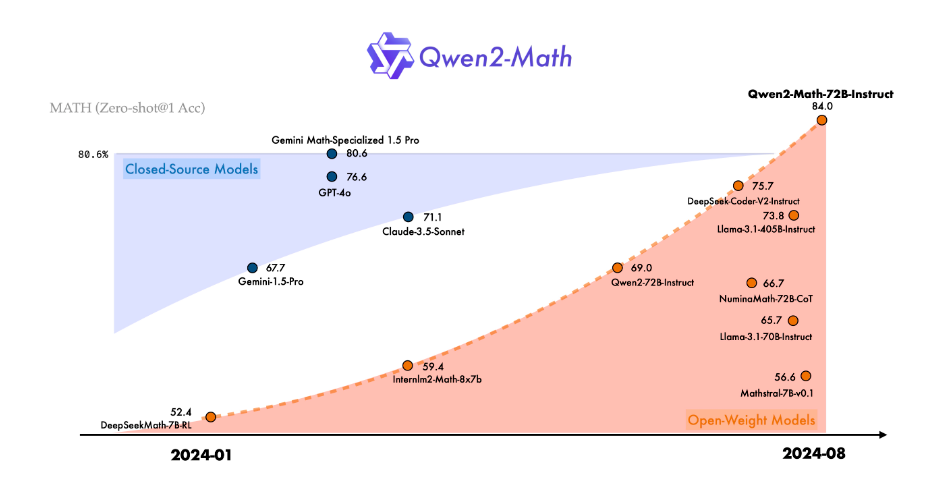

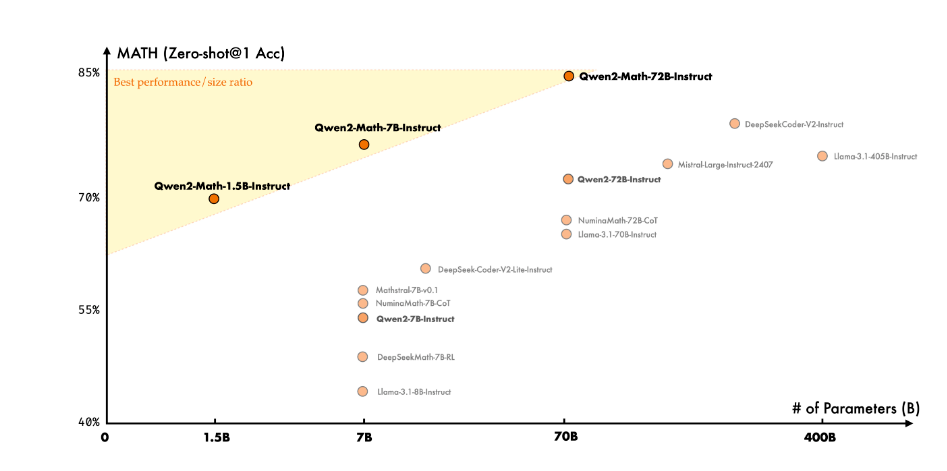

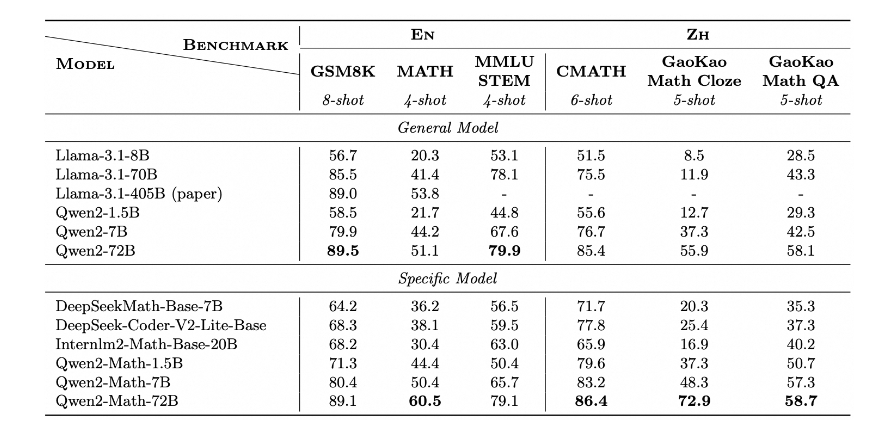

As the latest members of the Qwen2 series, the Qwen2-Math and Qwen2-Math-Instruct-1.5B/7B/72B models have demonstrated impressive strength in mathematical problem-solving capabilities. It is reported that this series of models not only surpassed existing open source models in multiple mathematical benchmark tests, but also outperformed in some aspects including GPT-4o, Claude-3.5-Sonnet, Gemini-1.5-Pro and Llama- Well-known closed-source models, including 3.1-405B, can be called dark horses in the AI mathematics community.

The success of Qwen2-Math is no accident. The Alibaba Cloud team has devoted a lot of effort in the past year to improving the reasoning capabilities of large language models on arithmetic and mathematical problems. The basis of this series of models is Qwen2-1.5B/7B/72B. On this basis, the R&D team conducted in-depth pre-training using a carefully designed professional mathematics corpus. This unique corpus covers large-scale and high-quality mathematics online texts, professional books, code examples, and massive examination questions, and even includes mathematics pre-training data independently generated by Qwen2.

Particularly worth mentioning is the Qwen2-Math-Instruct model. This mathematics professional reward model based on Qwen2-Math-72B training adopts an innovative training method. The R&D team cleverly combines the dense reward signal with the binary signal of whether the model answers correctly or not. This combined signal is used as a supervision signal to construct SFT (Supervised Fine-Tuning) data through rejection sampling, and in the reinforcement learning after SFT Group relative policy optimization (GRPO) technology is applied. This unique training method greatly improves the model's mathematical problem-solving capabilities.

In practical applications, Qwen2-Math-Instruct shows amazing performance. Whether it is the 2024 AIME (American Invitational Mathematics Examination) or the 2023 AMC (American Mathematics Competition), this model has performed well in various settings, including greedy search (Greedy), majority voting, risk minimization and other strategies .

What’s even more exciting is that Qwen2-Math has also shown great strength in solving some International Mathematical Olympiad (IMO) level problems. Through the analysis of a series of test cases, the researchers found that Qwen2-Math can not only easily solve simple mathematics competition problems, but also provide convincing solutions when facing complex problems.

However, the Alibaba Cloud team did not stop there. They revealed that the current Qwen2-Math series only supports English, but they are already actively developing bilingual models that support English and Chinese, and plan to launch multilingual versions in the near future. In addition, the team is continuing to optimize the model to further improve its ability to solve more complex and challenging mathematical problems.

The emergence of Qwen2-Math has undoubtedly opened up new possibilities for the application of AI in the field of mathematics. It will not only bring revolutionary changes to the education industry and help students better understand and master mathematical knowledge, but may also play an important role in scientific research, engineering and other fields that require complex mathematical calculations.

Project page: https://top.aibase.com/tool/qwen2-math

Model download: https://huggingface.co/Qwen

All in all, the emergence of the Qwen2-Math series of models marks a major breakthrough for AI in the field of mathematics. Its future development potential is huge and deserves continued attention. The editor of Downcodes believes that with the continuous advancement of technology, Qwen2-Math will bring more possibilities to mathematics education and scientific research.