The Shanghai Artificial Intelligence Laboratory released the latest version of the Scholar Puyu series model, InternLM2.5, at the WAIC Science Frontier Main Forum on July 4, 2024. This version's reasoning capabilities in complex scenarios have been significantly improved, it supports 1M ultra-long context, and can independently conduct Internet searches and information integration, which is a major breakthrough. The editor of Downcodes will explain in detail the functions and open source information of InternLM2.5.

The Shanghai Artificial Intelligence Laboratory launched a new version of the Scholar Puyu series model, InternLM2.5, at the WAIC Science Frontier Main Forum on July 4, 2024. This version's reasoning capabilities in complex scenarios have been comprehensively enhanced, it supports 1M ultra-long context, and can independently conduct Internet searches and integrate information from hundreds of web pages.

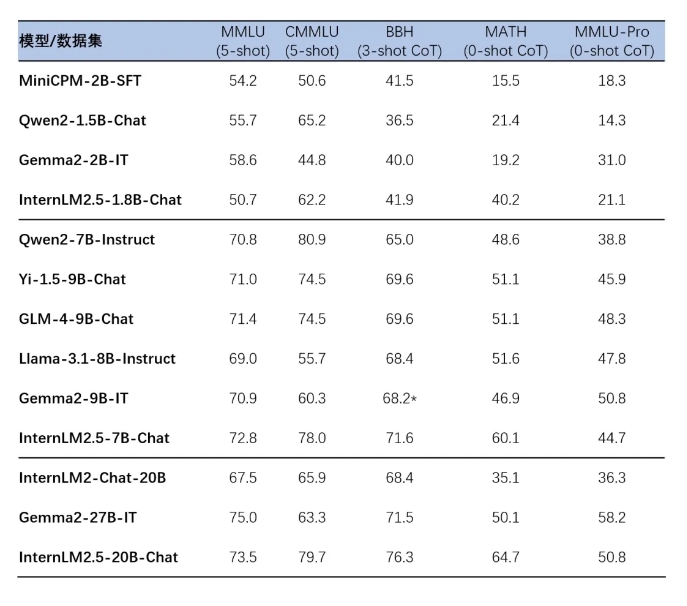

InternLM2.5 released three parameter versions of the model, namely 1.8B, 7B and 20B, to adapt to different application scenarios and developer needs. The 1.8B version is an ultra-lightweight model, while the 20B version provides stronger comprehensive performance and supports more complex practical scenarios. All these models are open source and can be found on the Scholar·Puyu series large model homepage, ModelScope homepage and Hugging Face homepage.

InternLM2.5 iterates on multiple data synthesis technologies, significantly improving the model's reasoning capabilities. In particular, the accuracy rate on the mathematics evaluation set MATH reached 64.7%. In addition, the model improves context length processing capabilities through efficient training in the pre-training stage.

The InternLM2.5 series models also achieve seamless integration with downstream inference and fine-tuning frameworks, including the XTuner fine-tuning framework and LMDeploy inference framework independently developed by the Shanghai Artificial Intelligence Laboratory, as well as other frameworks with extensive user bases in the community such as vLLM and Ollama. and llama.cpp. The SWIFT tool launched by Moda community also supports the inference, fine-tuning and deployment of InternLM2.5 series models.

The application experience of these models includes multi-step complex reasoning, precise understanding of multi-turn conversation intentions, flexible format control operations, and the ability to follow complex instructions. Detailed installation and usage guides are provided to facilitate developers to get started quickly.

Scholar·Puyu series large model home page:

https://internlm.intern-ai.org.cn

ModelScope Home Page:

https://www.modelscope.cn/organization/Shanghai_AI_Laboratory?tab=model

Hugging Face Home Page:

https://huggingface.co/internlm

InternLM2.5 open source link:

https://github.com/InternLM/InternLM

The open source release of InternLM2.5 brings new possibilities to research and applications in the field of artificial intelligence. It is believed that its powerful performance and ease of use will attract many developers to explore and innovate. The editor of Downcodes looks forward to seeing more excellent applications based on InternLM2.5!