Israeli artificial intelligence startup aiOla has released a new open source speech recognition model Whisper-Medusa, which is improved on OpenAI’s Whisper and is 50% faster. It is open sourced under the MIT license on Hugging Face. Research and commercial use permitted. The editor of Downcodes will give you a detailed explanation of this eye-catching new model.

Israeli artificial intelligence startup aiOla recently made a big move and announced the launch of a new open source speech recognition model Whisper-Medusa.

This model is not simple. It is a full 50% faster than OpenAI's famous Whisper! It is built on the basis of Whisper, but uses a novel "multi-head attention" architecture to predict the number of tokens at one time Far beyond OpenAI's products. Moreover, the code and weights have been released on Hugging Face under an MIT license, which allows research and commercial use.

Gill Hetz, vice president of research at aiOla, said that open source can encourage community innovation and cooperation, making it faster and more complete. This work could pave the way for complex artificial intelligence systems that can understand and answer user questions in almost real time.

In this era where basic models can produce a variety of content, advanced speech recognition is still very important. Whisper, for example, can handle complex speech in different languages and accents. It is downloaded more than 5 million times a month, supports many applications, and has become the gold standard for speech recognition.

So what’s so special about aiOla’s Whisper-Medusa?

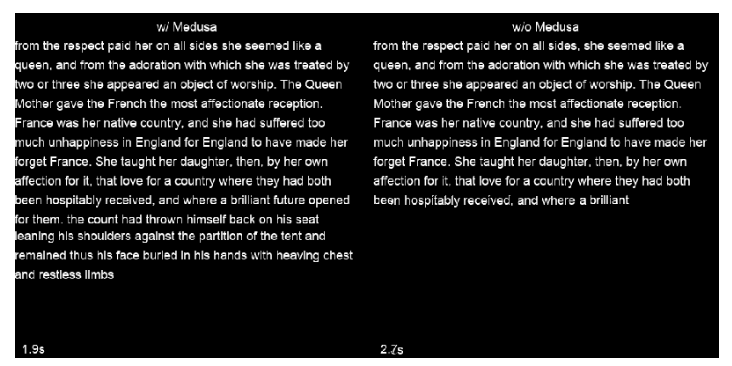

The company changed the structure of Whisper and added a multi-head attention mechanism, which can predict 10 tokens at a time and increase the speed by 50% without affecting the accuracy. A weakly supervised machine learning method was used to train this model, and there will be more powerful versions in the future. What's more, because Whisper-Medusa's backbone is built on Whisper, the speed improvements won't come at the expense of performance.

When training Whisper-Medusa, aiOla used a machine learning method called weak supervision. As part of this, it froze the main components of Whisper and trained an additional token prediction module using the audio transcriptions generated by the model as labels.

When asked if any companies could get early access to Whisper-Medusa, Hetz said they have been tested on real enterprise data use cases and can run accurately in real-world scenarios, making voice applications more responsive in the future. Ultimately, he believes increased recognition and transcription speeds will enable faster turnaround times for voice applications and pave the way for providing real-time responses.

Highlight:

?50% faster: aiOla's Whisper-Medusa is significantly faster than OpenAI's Whisper speech recognition.

?No loss of accuracy: The speed is improved while maintaining the same accuracy as the original model.

Broad application prospects: It is expected to speed up response, improve efficiency and reduce costs in voice applications.

All in all, aiOla's Whisper-Medusa model, with its speed advantage and open source attributes, is expected to set off a new wave in the field of speech recognition and bring significant performance improvements to various speech applications. The editor of Downcodes will continue to pay attention to the subsequent development and community contributions of this model.