Mistral AI amazingly released the first open source multi-modal large model Pixtral12B. Its 12 billion parameter scale and powerful ability to process images and text are comparable to Anthropic's Claude series and OpenAI's GPT-4. What’s even more striking is that Mistral AI directly discloses model weights and even provides magnet link downloads, which greatly lowers the threshold for use and makes it easier for developers and researchers to get started quickly. The size of Pixtral12B is only 23.64GB. It is lightweight among multi-modal models, has low energy consumption, is easy to deploy, and can be downloaded in a few minutes under a high-speed network.

Mistral AI once again shocked the AI world and launched Pixtral12B, the first open source multi-modal large model. This model, which can process images and text simultaneously, is not only technologically advanced, but also attracts widespread attention for its openness. Mistral AI directly publishes the model weights online, and even thoughtfully provides magnet links.

The highlight of Pixtral12B is not only its powerful functions, but also its exquisite design. The total model size is only 23.64GB, making it a lightweight player among multi-modal models. This feature greatly reduces energy consumption and deployment threshold, allowing more developers and researchers to get started easily. It is reported that users with high-speed Internet connections can complete the download in just a few minutes, greatly improving the accessibility of the model.

As the latest masterpiece of Mistral AI, Pixtral12B is developed based on its text model Nemo12B and has 12 billion parameters. Its capabilities are comparable to well-known multi-modal models such as Anthropic's Claude series and OpenAI's GPT-4, and can understand and answer a variety of complex image-related questions.

In terms of technical specifications, Pixtral12B is equally impressive: 40-layer network structure, 14,336 hidden dimensions, 32 attention heads, and a 400M dedicated visual encoder that supports processing of 1024x1024 resolution images.

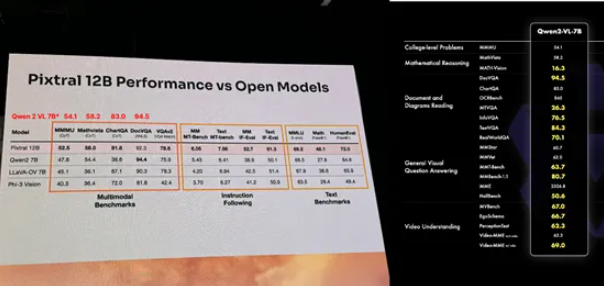

What's more worth mentioning is that Pixtral12B performed well in a number of authoritative benchmark tests. On platforms such as MMMU, Mathvista, ChartQA, and DocVQA, its results have surpassed many well-known multi-modal models including Phi-3 and Qwen-27B, fully proving its strong strength.

Mistral AI’s move will undoubtedly further promote the open source wave of multi-modal models. The community response to this new model has been overwhelming, with many developers and researchers eager to start exploring the potential of Pixtral12B. This not only reflects the vitality of the open source community, but also indicates that multi-modal AI technology may usher in a new round of innovation.

With the release of Pixtral12B, we have reason to look forward to the emergence of more innovative applications. Whether in the fields of image understanding, document analysis, or cross-modal reasoning, this model may bring breakthrough progress. This move by Mistral AI has undoubtedly contributed to the democratization and popularization of AI technology. Let us wait and see how it will reshape the pattern of the AI field in the future.

huggingface address: https://huggingface.co/mistral-community/pixtral-12b-240910

The open source release of Pixtral12B marks a new stage in the development of multi-modal AI technology. Its lightweight design and powerful performance will greatly promote the popularization and application of AI technology. We look forward to seeing more innovative applications based on Pixtral12B emerge. .