Alibaba announced that it will open source its second-generation visual language model Qwen2-VL and provide API interfaces and open source code to facilitate developers' use. The model has made significant progress in image and video understanding, supports multiple languages, and has strong visual agent capabilities, capable of autonomously operating mobile phones and robots. Qwen2-VL provides models in three sizes: 2B, 7B and 72B to meet the needs of different application scenarios. The 72B model performs best on most indicators, while the 2B model is suitable for mobile applications.

On September 2, Tongyi Qianwen announced the open source of its second-generation visual language model Qwen2-VL, and launched APIs for 2B and 7B sizes and its quantified version model on the Alibaba Cloud Bailian platform for users to directly call.

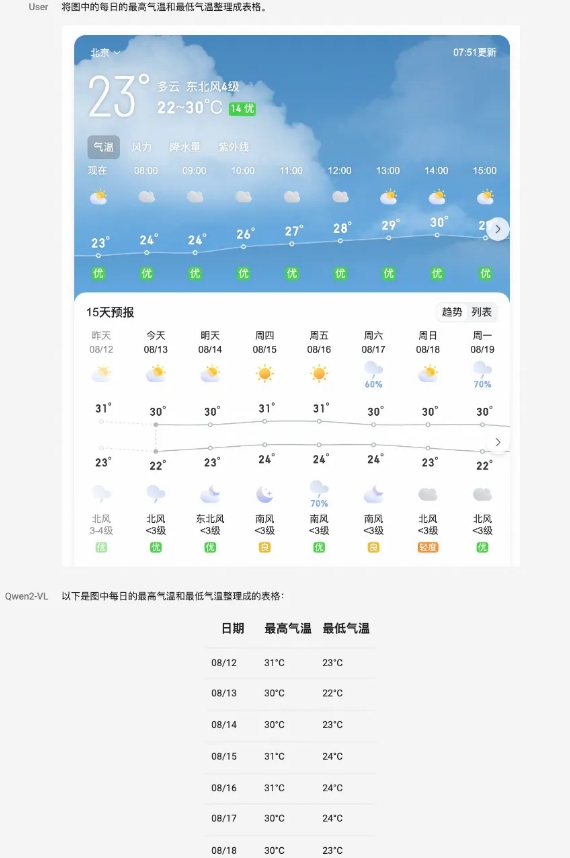

The Qwen2-VL model achieves comprehensive performance improvements in multiple aspects. It can understand images of different resolutions and aspect ratios, and has achieved world-leading performance in benchmark tests such as DocVQA, RealWorldQA, and MTVQA. In addition, the model can also understand long videos of more than 20 minutes and support video-based Q&A, dialogue and content creation applications. Qwen2-VL also has powerful visual intelligence capabilities and can autonomously operate mobile phones and robots to perform complex reasoning and decision-making.

The model is capable of understanding multilingual text in images and videos, including Chinese, English, most European languages, Japanese, Korean, Arabic, Vietnamese, and more. The Tongyi Qianwen team evaluated the model's capabilities from six aspects, including comprehensive college questions, mathematical ability, understanding of documents, tables, multi-language text and images, general scene question and answer, video understanding, and agent capabilities.

As the flagship model, Qwen2-VL-72B has reached the optimal level in most indicators. Qwen2-VL-7B achieves highly competitive performance with its economical parameter scale, while Qwen2-VL-2B supports rich mobile applications and has complete image and video multi-language understanding capabilities.

In terms of model architecture, Qwen2-VL continues the series structure of ViT plus Qwen2. All three sizes of models use 600M ViT, supporting unified input of images and videos. In order to improve the model's perception of visual information and video understanding capabilities, the team has upgraded the architecture, including implementing full support for native dynamic resolution and using the multi-modal rotation position embedding (M-ROPE) method.

Alibaba Cloud Bailian platform provides Qwen2-VL-72B API, which users can call directly. At the same time, the open source code of Qwen2-VL-2B and Qwen2-VL-7B has been integrated into Hugging Face Transformers, vLLM and other third-party frameworks, and developers can download and use the models through these platforms.

Alibaba Cloud Bailian Platform:

https://help.aliyun.com/zh/model-studio/developer-reference/qwen-vl-api

GitHub:

https://github.com/QwenLM/Qwen2-VL

HuggingFace:

https://huggingface.co/collections/Qwen/qwen2-vl-66cee7455501d7126940800d

Magic ModelScope:

https://modelscope.cn/organization/qwen?tab=model

Model experience:

https://huggingface.co/spaces/Qwen/Qwen2-VL

In short, the open source of the Qwen2-VL model provides developers with powerful tools, promotes the development of visual language model technology, and brings more possibilities to various application scenarios. Developers can obtain the model and code through the provided link to start building their own applications.