Alibaba Tongyi Qianwen team released the amazing Qwen2-Math mathematical model. Its performance surpassed GPT-4 in multiple benchmark tests, and even surpassed the 72B parameter version of the open source model NuminaMath in the 7B parameter version. . This model can not only handle mathematical problems entered by text, but also recognize formulas in pictures and screenshots, making it a powerful auxiliary tool for mathematics learning. Different versions (72B, 7B and 1.5B) provide options for different needs, showing strong performance and adaptability.

Alibaba's Tongyi Qianwen team has made another big news! They have just released the Qwen2Math Demo. This mathematical model is simply a little monster, even GPT-4 is trampled under its feet.

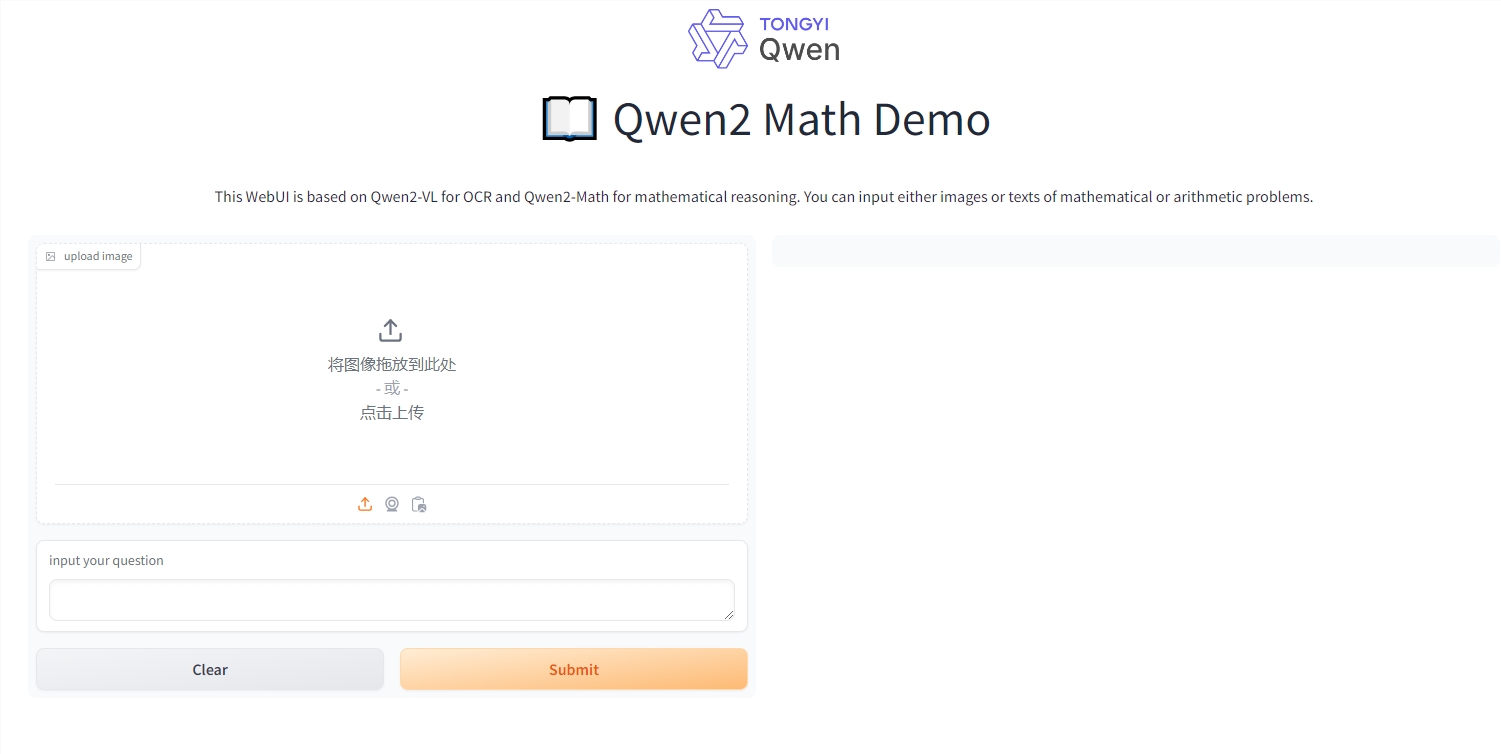

This model can not only handle mathematical problems of text input, but also understand formulas in pictures and screenshots. Imagine that you take a photo of a calculation and it can give you the answer. It is simply a tool for solving problems in math class! (Of course, we do not advocate cheating)

Qwen2-Math is launched in three versions: 72B, 7B and 1.5B. Among them, version 72B is simply a mathematical genius. It actually scored 7 points more than GPT-4 on the MATH data set, an increase of 9.6%. This is like you scored 145 points in the college entrance examination mathematics test, but the top student next to you only scored 132 points.

What's even more amazing is that the 7B version uses less than one-tenth of the parameters, surpassing the 72B open source mathematical model NuminaMath. You know, NuminaMath is the model that won the award in the world's first AIMO, and the award was personally presented by Terence Tao, the top figure in the mathematics world.

Lin Junyang, Alibaba's senior algorithm expert, excitedly announced that they turned the Qwen2 model into a mathematical master. How did they do it? They used a special mathematical brain supplement - a carefully designed mathematics-specific corpus. This brain supplement contains a large number of high-quality mathematics online texts, books, codes, exam questions, and even mathematics questions compiled by the Qwen2 model.

The result? On the classic mathematics test sets such as GSM8K and MATH, Qwen2-Math-72B left behind the 405B Llama-3.1. These test sets are no joke. They contain various mathematical problems such as algebra, geometry, probability, and number theory.

Not only that, Qwen2-Math also challenged the Chinese data set CMATH and college entrance examination questions. On the Chinese data set, even the 1.5B version can beat the 70B Llama3.1. Moreover, no matter which version, compared with the Qwen2 basic model of the same scale, the performance has been significantly improved.

It seems that Tongyi Qianwen really asked a math genius this time! Can we ask it when doing math problems in the future? But remember, this is just a tool, don’t be fooled by its intelligence Confused eyes, you still need to practice your math skills!

Online experience address: https://huggingface.co/spaces/Qwen/Qwen2-Math-Demo

The emergence of Qwen2-Math marks significant progress in large-scale language models in the field of mathematics. Although it is a powerful tool, it is more important to develop your own mathematical abilities and never rely on the tool and ignore the learning process. We look forward to Qwen2-Math being able to play a role in more fields in the future, bringing more convenience to learning and scientific research.