Geekbench launches Geekbench AI, a new cross-platform AI performance testing tool designed to evaluate the performance of devices under AI-intensive workloads. This tool tests CPU, GPU, and NPU performance to determine a device's ability to handle machine learning applications. Previously developed under the name Geekbench ML, it has now been renamed Geekbench AI, which is more in line with the current development trend of AI technology. It evaluates performance in terms of speed and accuracy, supports multiple frameworks such as ONNX, CoreML, TensorFlow Lite, and OpenVINO, provides three scores of full precision, half precision, and quantified precision, and includes accuracy measurements.

Geekbench AI has been released on Windows, macOS, Linux, Android and iOS platforms. The test results will help consumers better compare the AI performance of different devices, and also provide hardware manufacturers with a reference for optimizing AI performance. However, AI performance testing is still in its early stages, and the correlation between its test results and actual user experience needs further verification. In the future, more similar AI performance testing tools will appear, and AI performance will become an important dimension to measure device performance, as important as traditional CPU and GPU performance.

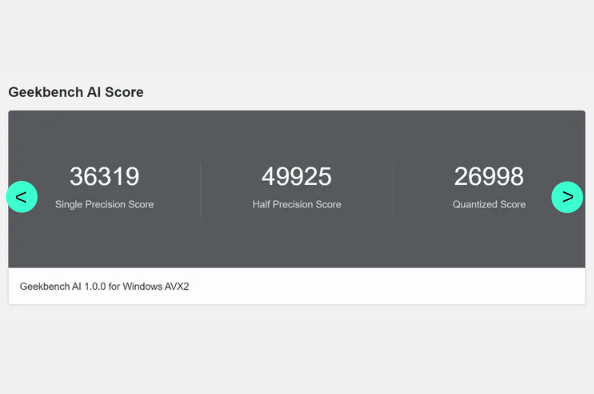

The test results of Geekbench AI include three scores: full precision, half precision and quantized precision. Primate Labs says the scores also include an accuracy measure that evaluates how close a workload output is to real-world results—that is, how accurately the model performs its intended task.

Currently, Geekbench AI has been released on Windows, macOS, Linux, Android and iOS platforms, and users can download and try it out. However, to fully understand how these test scores relate to actual task performance, we will need more time testing devices equipped with native AI capabilities, such as Copilot Plus PCs and various new smartphones.

Unlike traditional frame rate or load time tests, the emergence of Geekbench AI means that we may need to start paying attention to new metrics such as the accuracy of predictive text or the performance of generative AI image editors. This reflects the fact that AI technology is profoundly changing the way we evaluate device performance.

The launch of Geekbench AI undoubtedly provides a new standard for the evaluation of device AI capabilities. As more and more devices integrate AI functions, the importance of such testing tools will become increasingly prominent. It not only helps consumers better understand and compare the AI performance of different devices, but also provides hardware manufacturers with reference indicators for optimizing AI performance.

However, we should also recognize that AI performance testing is still in its early stages. How the test results of Geekbench AI are related to actual user experience, and how to accurately reflect device performance in different AI application scenarios, require further observation and verification.

In the future, we may see more similar AI performance testing tools emerge, which will evaluate the AI capabilities of devices from different perspectives. This trend also reflects that AI technology is becoming an important dimension in judging device performance, which is as important as traditional CPU and GPU performance.

All in all, the emergence of Geekbench AI marks an increasing emphasis on AI performance evaluation. It provides an important reference basis for consumers and manufacturers, but it also requires continuous improvement and improvement to better reflect actual application scenarios. AI performance.