Anthropic has recently launched a new feature of the Claude series of large -scale language models -prompting cache to reduce the cost of using AI and improve performance. This move is regarded as a unique strategy of Anthropic in competing with giants such as OpenAi, Google and Microsoft to choose from increasing efficiency and reducing cost perspectives. This function is currently publicly tested on the API of Claude3.5 Sonnet and Claude3 Haiku model, promising to reduce the cost of up to 90%and increase the response speed of some application scenarios. However, the actual effect still needs market inspection.

On August 14, Anthropic announced that it launched a new feature called the Claude series large language model, claiming that it can greatly reduce the cost of using AI and improve performance. But whether this function can be as magical as the company said and has to be tested in the market.

The cache function will be publicly tested on its API of its Claude3.5sonnet and Claude3haiku model. This function allows users to store and reuse specific context information, including complex instructions and data, without additional costs or increased delays. The company spokesman said that this is one of the many cutting -edge characteristics they developed to enhance Claude capabilities.

At present, technological giants such as OpenAI, Google, and Microsoft have launched fierce competitions in the field of large -scale language models, and each company is trying to improve the performance and market competitiveness of their products. In this competition, Anthropic chose to cut in from the perspective of improving use efficiency and reducing costs, showing its unique market strategy.

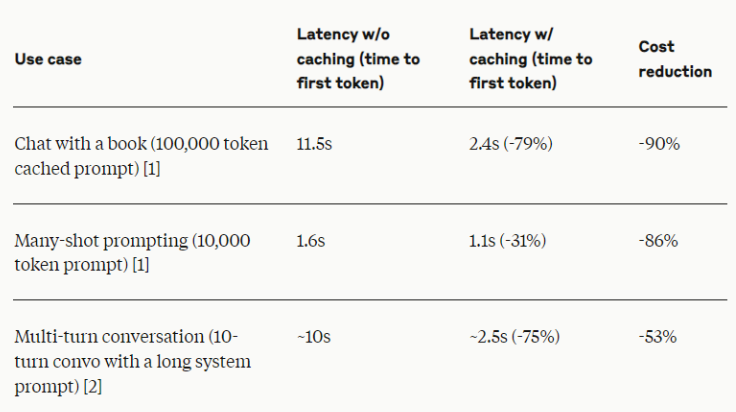

According to Anthropic, this new feature may bring up to 90%of the cost, and double the response speed in some application scenarios. These numbers are undoubtedly impressive, but industry experts remind that the actual effect may be different due to the specific application scenarios and implementation.

Anthropic said that the cache function is particularly suitable for scenes that need to be consistent in context in multiple query or sessions, such as long -term dialogue, large -scale document processing, code assistance, and complex tools. This method is expected to bring efficiency improvement to various commercial AI applications.

Industry insiders pointed out that although Anthropic's new features seem to be bright, other AI companies are also actively exploring the method of improving model efficiency and reducing use costs. For example, OPENAI provides different capabilities and prices model options, while Google is committed to developing models that can operate efficiently on ordinary hardware.

For the actual effect of this new feature, the market is still cautious. Like any new technology, especially in the rapidly developing AI field, the performance of the cache function in the real world is yet to be observed. Anthropic said that it will work closely with customers to collect relevant data and feedback, which is in line with the best practice in the industry that evaluates the influence of new AI technology.

Anthropic's initiative may have a wide impact on the AI industry, especially in providing advanced AI capabilities for SMEs. If this function is really as effective as promoting, it may reduce the threshold for enterprises to adopt complex AI solutions, thereby promoting the application of AI technology in a broader business field.

With the development of public tests, enterprises and developers will have the opportunity to personally evaluate the actual performance of the cache function and how it adapts to their respective AI strategies. In the next few months, we are expected to see the performance of this management AI prompt and the context in practical applications.

Anthropic's prompt cache function represents an interesting attempt by the AI industry in terms of efficiency and cost optimization. However, whether it can truly lead the industry's change, it also requires further inspection of the market. In any case, this innovation reflects the continuous exploration of new directions in AI companies in fierce competition, and also indicates that AI technology may usher in a new round of efficient revolution.

All in all, the key to the success of the Anthropic prompt cache function is its actual application effect. The subsequent market performance will become the ultimate standard for testing this technology and will also bring new revelation to the entire AI industry.