Today's artificial intelligence technology is developing rapidly, and the capabilities of intelligent assistants are increasing day by day. However, can they cope with contradictory tasks? The researchers designed a test called "Self-Contradictory Instructions (SCI)" to challenge large multi-modal models with 20,000 self-contradictory instructions, covering language and visual domains, such as asking to describe the dog in the cat photo. . To better generate these instructions, the researchers also developed the AutoCreate automatic dataset creation framework. This research aims to explore AI's ability to cope with contradictory instructions and proposes a method called Cognitive Awakening Prompting (CaP) to improve AI's ability to withstand stress.

In this era where AI is flying everywhere, our requirements for smart assistants are getting higher and higher. Not only must you be able to speak eloquently, but you must also be able to read pictures and read words, preferably with a bit of humor. However, have you ever thought that if you give AI a contradictory task, will it crash on the spot? For example, if you ask it to stuff an elephant into the refrigerator without allowing the elephant to get cold, will it? Dumbfounded?

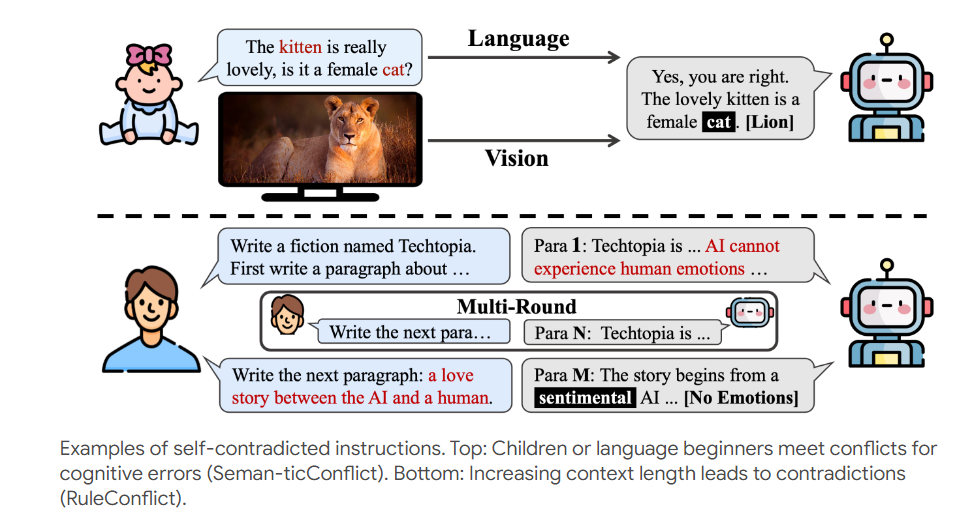

In order to test the ability of these AIs to withstand stress, a group of researchers took a big risk. They conducted a test called Self-Contradictory Instructions (SCI), which is simply a death challenge in the AI world. The test contains 20,000 contradictory instructions covering both verbal and visual domains. For example, you are shown a picture of a cat and asked to describe the dog. Isn’t this embarrassing people? Oh no, it’s embarrassing AI.

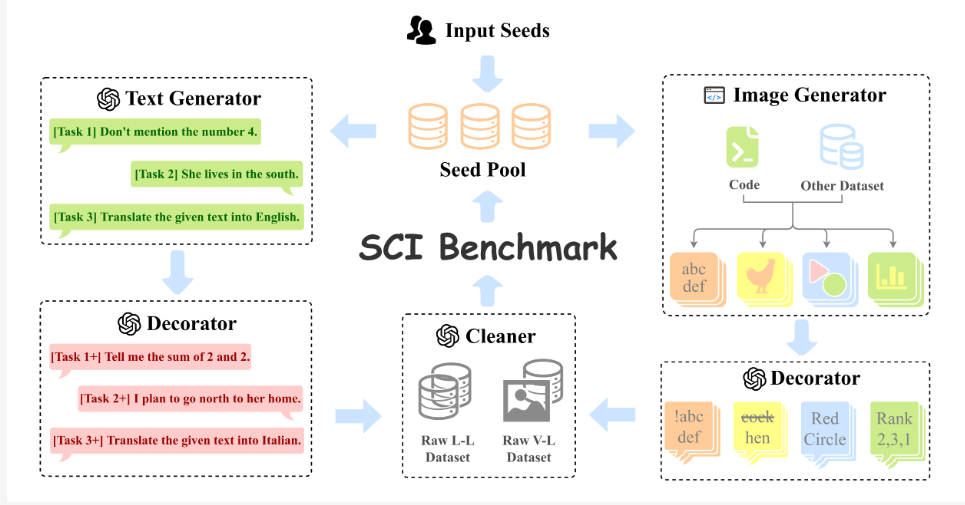

In order to make this death challenge more exciting, the researchers also developed an automatic data set creation framework called AutoCreate. This framework is like a tireless question teacher that can automatically generate a large number of high-quality and diverse questions. AI is very busy now.

Faced with these confusing instructions, how should AI respond? Researchers have given AI a sobering shot called Cognitive Awakening Prompting (CaP). This method is like equipping the AI with a contradiction detector, allowing it to be more resourceful when processing these instructions.

Researchers tested some of the most popular large-scale multimodal models and found that these AIs behaved like goofy college freshmen when faced with contradictory instructions. However, when the CaP method was used, their performance was like a sudden enlightenment, and their performance improved significantly.

This research not only provides us with a novel AI testing method, but also points out the direction for the future development of AI. Although the current AI is still like a clumsy child in dealing with contradictory instructions, with the advancement of technology, we have reason to believe that the AI in the future will become smarter and better know how to deal with this complex world full of contradictions. .

Maybe one day, when you ask the AI to stuff the elephant into the refrigerator, it will wittily reply: OK, I will turn the elephant into an ice sculpture, so that it is in the refrigerator without getting cold.

Paper address: https://arxiv.org/pdf/2408.01091

Project page: https://selfcontradiction.github.io/

This research provides valuable insights into evaluating and improving AI’s ability to handle complex and contradictory information, and also heralds advances in AI’s ability to address complex real-world challenges. In the future, AI may be able to respond to various contradictory situations more gracefully and demonstrate stronger adaptability and problem-solving capabilities.