Image matting technology has always been a major problem in the field of image processing. Especially for images with complex details, traditional methods are often incompetent. This article introduces a new matting technology called "Matting by Generation", which uses generative models to achieve more efficient and accurate matting effects, and can handle objects with complex boundaries, such as hair, animal hair, etc. This technology does not require the user to input additional information, relying only on a single image to automatically complete the cutout, and can be combined with auxiliary information such as text descriptions and image tags to further improve accuracy.

In the world of image processing, matting—the technique of separating foreground objects from the background in an image—has always been a challenge. Now, a new technology called "Matting by Generation" is using generative models to redefine the accuracy and efficiency of matting.

At the heart of this technology is its ability to automate. Traditional cutout methods often require users to input auxiliary information, such as outline markers or specific colors. "Matting by Generation" is different. It only relies on a single input image to automatically extract foreground objects without any additional input.

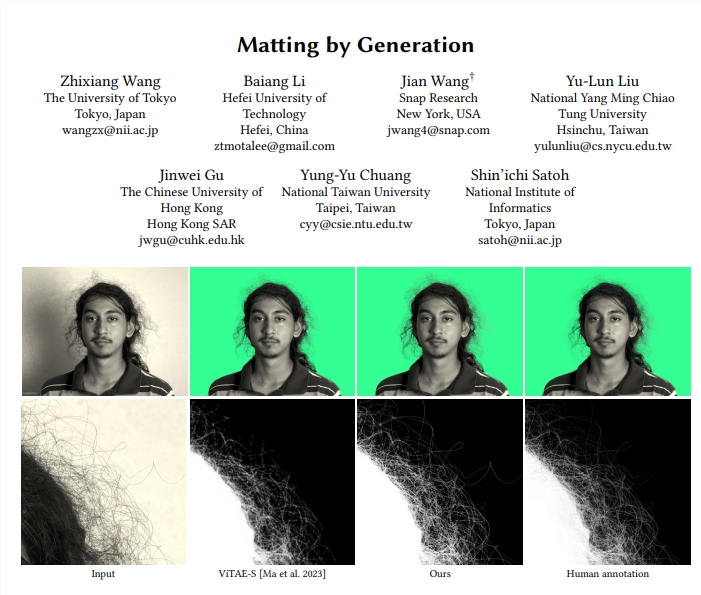

For objects with complex boundaries, such as hair, animal hair, shoelaces, etc., traditional cutout methods are often inadequate. But Matting by Generation excels in these areas, producing near-realistic edge effects thanks to its advanced latent diffusion model, which is better able to understand and reconstruct the intricate details of an image.

A distinctive feature of the “Matting by Generation” approach is that it incorporates a large amount of pre-training knowledge. This means that when processing images, the model does not just analyze the current input, but utilizes a wide range of data and patterns, thereby improving the accuracy of the cutout and the richness of details.

Although Matting by Generation works without additional input, it can also use a variety of auxiliary information to improve matting accuracy. Whether it’s text descriptions, simple image tags, or doodles, the model is able to integrate this information to more accurately identify foreground and background.

Assuming you have an image, you can simply describe the foreground in the image with a sentence, such as "a kitten sitting on the grass," or doodle to mark the area you want to cut out. The "Matting by Generation" model will use these cues to generate more accurate foreground images.

"Matting by Generation" represents a huge leap forward in image matting technology. It not only improves work efficiency, but also reaches new heights in quality. As the technology continues to evolve, we can look forward to how it will further change our understanding of image processing in future applications.

Paper address: https://arxiv.org/pdf/2407.21017

All in all, "Matting by Generation" technology has brought revolutionary progress to the field of image matting. Its automation, high precision and ability to process complex details give it broad prospects in future image processing applications. We look forward to this technology showing its power in more areas.