The rapid development of large language models (LLMs) has brought amazing natural language processing capabilities, but their huge computing and storage requirements limit their popularity. Running a model with 176 billion parameters requires hundreds of gigabytes of storage space and multiple high-end GPUs, making it expensive and difficult to scale. To solve this problem, researchers have focused on model compression techniques, such as quantization, to reduce model size and running requirements, but they also face the risk of accuracy loss.

Artificial intelligence (AI) is getting smarter, especially large language models (LLMs), which are amazing at processing natural language. But did you know? Behind these smart AI brains, huge computing power and storage space are needed to support them.

A multi-language model Bloom with 176 billion parameters requires at least 350GB of space just to store the weights of the model, and it also requires several advanced GPUs to run. This is not only costly but also difficult to popularize.

In order to solve this problem, researchers have proposed a technique called "quantification". Quantification is like "downsizing" the AI brain. By mapping the weights and activations of the model to a lower-digit data format, it not only reduces the size of the model, but also speeds up the running speed of the model. But this process also comes with risks, and some accuracy may be lost.

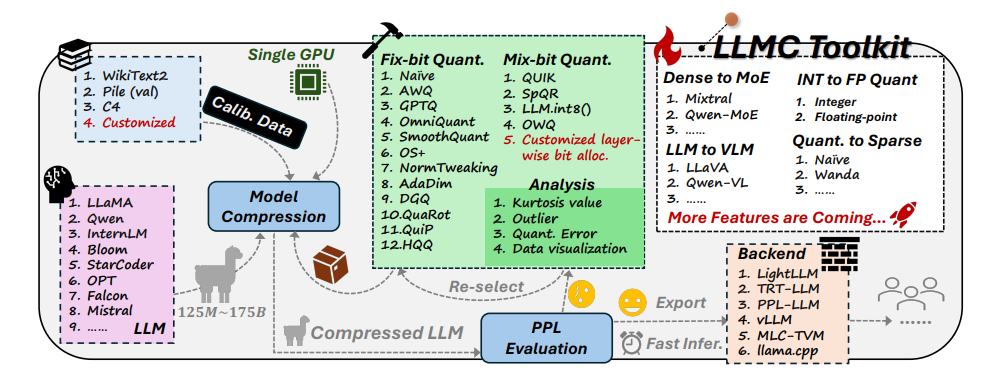

Faced with this challenge, researchers from Beihang University and SenseTime Technology jointly developed the LLMC toolkit. LLMC is like a personal weight loss coach for AI. It can help researchers and developers find the most suitable weight loss plan, which can make the AI model lighter without affecting its intelligence level.

The LLMC toolkit has three major features:

Diversification: LLMC provides 16 different quantitative methods, which is like preparing 16 different weight loss recipes for AI. Whether your AI wants to lose weight all over or locally, LLMC can meet your needs.

Low cost: LLMC is very resource-saving and requires only little hardware support even for processing very large models. For example, using only a 40GB A100 GPU, the OPT-175B model with 175 billion parameters can be adjusted and evaluated. This is as efficient as using a home treadmill to train an Olympic champion!

High compatibility: LLMC supports a variety of quantization settings and model formats, and is also compatible with a variety of backends and hardware platforms. It's like a universal trainer that can help you develop a suitable training plan no matter what equipment you use.

Practical applications of LLMC: making AI smarter and more energy-efficient

The emergence of the LLMC toolkit provides a comprehensive and fair benchmark test for the quantification of large language models. It considers three key factors: training data, algorithm and data format to help users find the best performance optimization solution.

In practical applications, LLMC can help researchers and developers integrate appropriate algorithms and low-bit formats more efficiently, promoting the popularization of compression of large language models. This means that we may see more lightweight but equally powerful AI applications in the future.

The authors of the paper also shared some interesting findings and suggestions:

When selecting training data, you should choose a data set that is more similar to the test data in terms of vocabulary distribution, just like when humans lose weight, they need to choose appropriate recipes based on their own circumstances.

In terms of quantification algorithms, they explored the impact of the three main techniques of transformation, cropping and reconstruction, just like comparing the effects of different exercise methods on weight loss.

When choosing between integer and floating-point quantization, they found that floating-point quantization has more advantages in handling complex situations, while integer quantization may be better in some special cases. It's like different exercise intensities are needed at different stages of weight loss.

The advent of the LLMC toolkit has brought a new trend to the AI field. It not only provides a powerful assistant for researchers and developers, but also points out the direction for the future development of AI. Through LLMC, we can expect to see more lightweight, high-performance AI applications, allowing AI to truly enter our daily lives.

Project address: https://github.com/ModelTC/llmc

Paper address: https://arxiv.org/pdf/2405.06001

All in all, the LLMC toolkit provides an effective solution to solve the resource consumption problem of large language models. It not only reduces the cost and threshold of model operation, but also improves the efficiency and usability of the model, injecting an injection into the popularization and development of AI. new vitality. In the future, we can look forward to the emergence of more lightweight AI applications based on LLMC, bringing more convenience to our lives.