The Shanghai Artificial Intelligence Laboratory recently announced that the InternLM-XComposer multi-modal large model developed by it has been upgraded to version 2.5 (IXC-2.5). This version has made major breakthroughs in long context understanding, visual language understanding and application expansion, significantly improved text image understanding and creation capabilities, and surpassed existing open source models in multiple benchmark tests. Some indicators are even comparable to GPT-4V and Gemini Pro is comparable. IXC-2.5 shows strong performance and wide application potential, setting a new benchmark for the development of multi-modal large models.

Recently, the InternLM-XComposer multi-modal large model was upgraded to version 2.5. This model, developed by the Shanghai Artificial Intelligence Laboratory, has improved text and image understanding with its excellent long-context input and output capabilities. and creative applications have brought revolutionary breakthroughs.

IXC-2.5 is able to handle long contexts up to 96K with ease, thanks to the 24K interleaved image-text data used in its training. This long context capability allows IXC-2.5 to perform well in tasks that require extensive input and output context.

Compared with the previous version, IXC-2.5 has three major upgrades in visual language understanding:

Ultra-high-resolution understanding: IXC-2.5 supports high-resolution images of any aspect ratio through the native 560×560ViT visual encoder.

Fine-grained video understanding: Treat video as an ultra-high-resolution composite image composed of tens to hundreds of frames, capturing details through dense sampling and higher resolution.

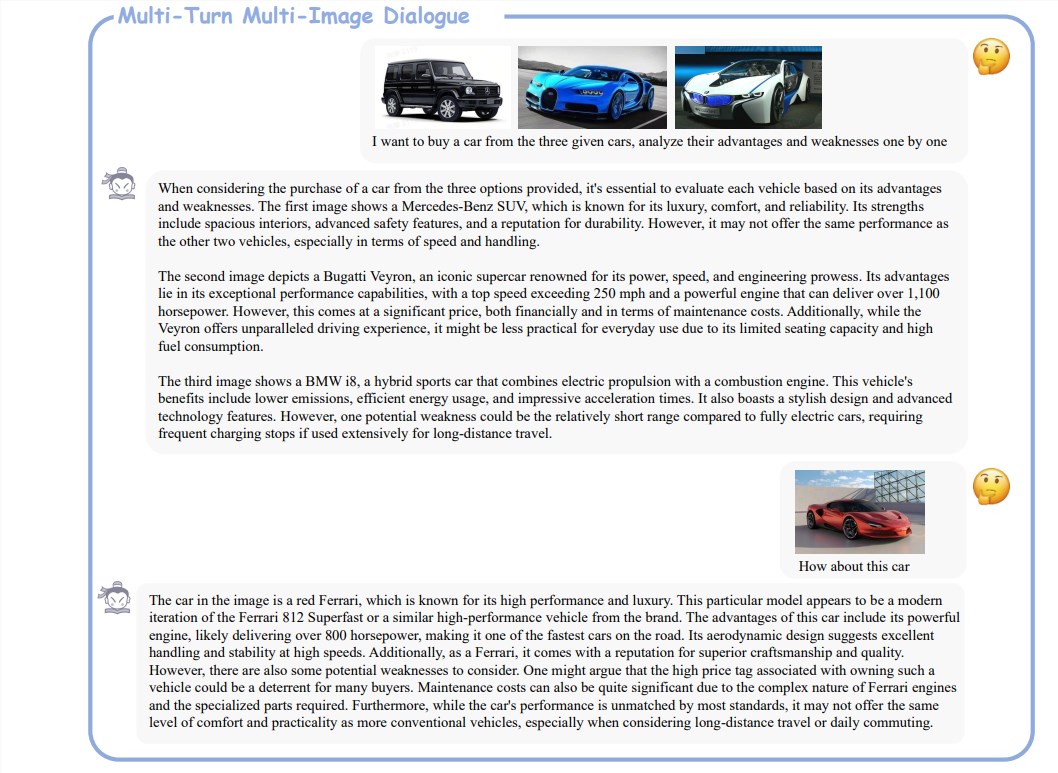

Multi-turn and multi-picture dialogue: Supports free-form multi-turn and multi-picture dialogue for natural interaction with humans.

In addition to improvements in understanding, IXC-2.5 also expands two compelling applications using additional LoRA parameters for text image creation:

Create web pages: Based on text image instructions, IXC-2.5 can write HTML, CSS and JavaScript source codes to create web pages.

Write high-quality graphic articles: Use specially designed Chain-of-Thought (CoT) and Direct Preference Optimization (DPO) technologies to significantly improve the quality of your writing content.

IXC-2.5 is evaluated on 28 benchmarks, and it outperforms existing open source state-of-the-art models on 16 benchmarks. Furthermore, it matched or surpassed GPT-4V and Gemini Pro in 16 key tasks. This achievement fully proves the powerful performance and wide application potential of IXC-2.5.

Paper address: https://arxiv.org/pdf/2407.03320

Project address: https://github.com/InternLM/InternLM-XComposer

All in all, the release of version IXC-2.5 marks significant progress in multi-modal large model technology, and its powerful performance and rich application scenarios indicate a bright future for the development of artificial intelligence technology in the future. Looking forward to more and more powerful feature updates in the future!