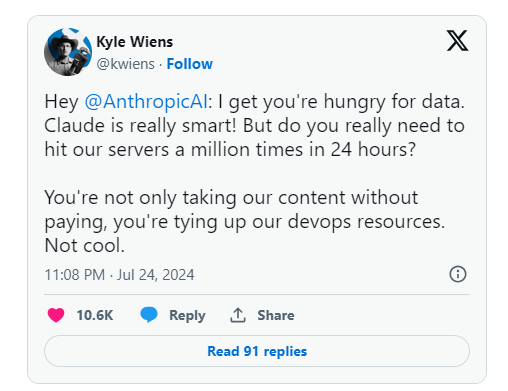

The ClaudeBot web crawler used by Anthropic frequently visited the iFixit website over a 24-hour period, appearing to violate the company's terms of use.

iFixit CEO Kyle Wiens said that not only was this unauthorized use of content, it also took up their development resources. In response to this, Fixit has added a crawl-delay extension to robots.txt to restrict crawler access.

In addition to iFixit, Read the Docs co-founder Eric Holscher and Freelancer.com CEO Matt Barrie also reported that their websites were invaded by the Anthropic crawler.

In the previous months, Reddit posts reported a sharp increase in Anthropic's web scraping activity. In April this year, a site outage on the Linux Mint web forum was also attributed to ClaudeBot's crawling activity.

Many AI companies like OpenAI deny crawler access through robots.txt files, but this does not provide website owners with the option to flexibly define what crawling content is allowed and prohibited. Another AI company, Perplexity, was found to be completely ignoring robots.txt exclusion rules.

Still, it's one of the few options many companies have to protect data from being used for AI training material, and Reddit has also recently taken action against web crawlers.