What would happen if the AI were retrained using images it generated? Recently, researchers at Stanford University and the University of California, Berkeley, conducted such an experiment, and the results were surprising.

The researchers found that when AI image-generating models were retrained to use images they generated themselves, the models produced highly distorted images. Worse, this distortion is not limited to the text cues used for retraining. Once the model is "contaminated", it is difficult for the model to fully recover, even if it is later retrained with only real images.

The starting point for the experiment is an open source model called Stable Diffusion (SD). The researchers first selected 70,000 high-quality face images from the FFHQ face dataset and automatically classified them. They then used these real images as input to generate 900 images consistent with the characteristics of a specific group of people through the Stable Diffusion model.

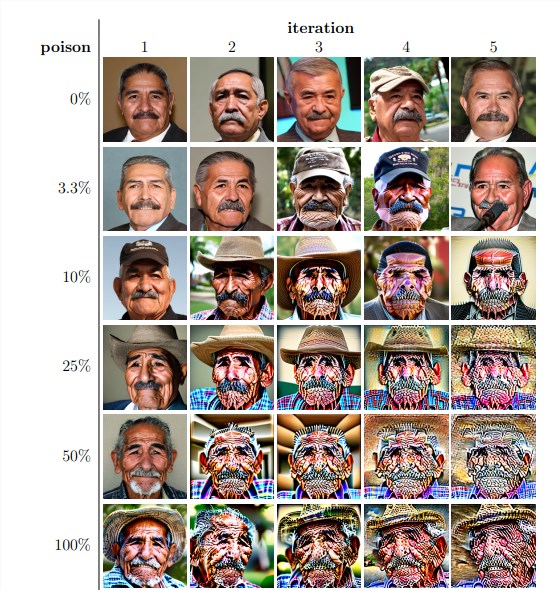

Next, the researchers used these generated images for iterative retraining of the model. They found that regardless of the proportion of self-generated images in the retraining dataset, the model eventually collapsed and the quality of the generated images dropped dramatically. Even when the retraining data contains only 3% self-generated images, the phenomenon of model collapse still exists.

Experimental results show that the baseline version of the Stable Diffusion model generates images that are consistent with text cues and have high visual quality. But as the model was iteratively retrained, the generated images began to exhibit semantic inconsistencies and visual distortions. The researchers also found that model collapse not only affected image quality but also resulted in a lack of diversity in the generated images.

To verify this, the researchers also conducted controlled experiments to try to mitigate the impact of model collapse by adjusting the color histogram of the generated images and removing low-quality images. But the results show that these measures are not effective in preventing model collapse.

The researchers also explored whether it was possible for a model to recover through retraining after being "contaminated." They found that although in some cases the quality of the resulting images recovered after multiple iterations of retraining, signs of model collapse remained. This shows that once a model is "contaminated", the effects may be long-term or even irreversible.

This study reveals an important issue: currently popular diffusion-based text-to-image generation AI systems are very sensitive to data “contamination”. This "contamination" can occur unintentionally, such as by indiscriminately scraping images from online sources. It could also be a targeted attack, such as deliberately placing "tainted" data on a website.

Faced with these challenges, researchers have proposed some possible solutions, such as using image authenticity detectors to exclude AI-generated images, or adding watermarks to generated images. While imperfect, these methods combined may significantly reduce the risk of data "contamination."

This study reminds us that the development of AI technology is not without risks. We need to be more careful with AI-generated content to ensure they don’t have long-term negative impacts on our models and datasets. Future research needs to further explore how to make AI systems more resilient to this type of data "contamination" or develop techniques that can speed up model "healing."