After Meta released Llama 3.1, Mistral AI launched its flagship model Mistral Large 2, with a parameter volume of 123 billion, a context window of an astonishing 128k, and performance comparable to Llama 3.1. The model supports multiple languages and programming languages, is designed for single-node inference, is efficient and cost-friendly. This article will take an in-depth look at Mistral Large 2’s various performance indicators, usage methods, and its excellent performance in multi-language processing, code understanding, and instruction following, and analyze its potential in commercial applications and research fields.

Following Meta's announcement yesterday of the release of the strongest open source model Llama3.1, Mistral AI grandly launched its flagship model Mistral Large2 early this morning. This new product has 123 billion parameters and a large 128k context window. In this regard, It looks comparable to Llama3.1.

Mistral Large2 model details

Mistral Large2 has a 128k context window and supports dozens of languages including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese and Korean, as well as Python, Java, C, C++, More than 80 coding languages including JavaScript and Bash.

Mistral Large2 is designed for single-node inference, targeting long-context applications - its 123 billion parameter size enables it to run at high throughput on a single node. Mistral Large2 is released under the Mistral Research License and is intended for research and non-commercial use; if there is commercial need, users need to contact to obtain a commercial license.

Overall performance:

In terms of performance, Mistral Large2 has established a new benchmark in evaluation indicators, especially achieving an accuracy of 84.0% in the MMLU benchmark test, demonstrating a strong balance between performance and service cost.

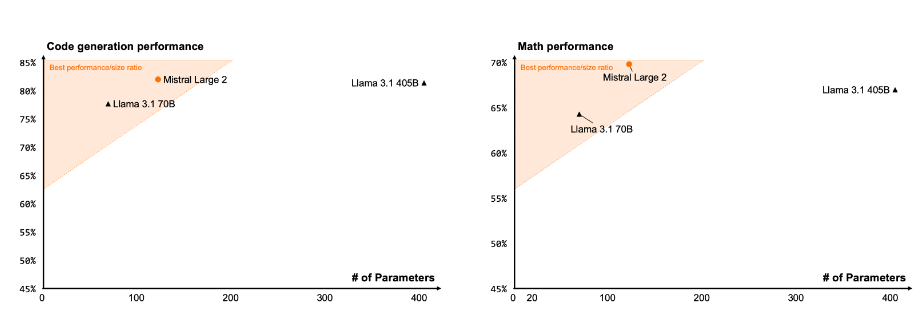

Code and Reasoning

Through the training experience of Codestral22B and Codestral Mamba, Mistral Large2 performs well in code processing, even comparable to top models such as GPT-4o, Claude3Opus and Llama3405B.

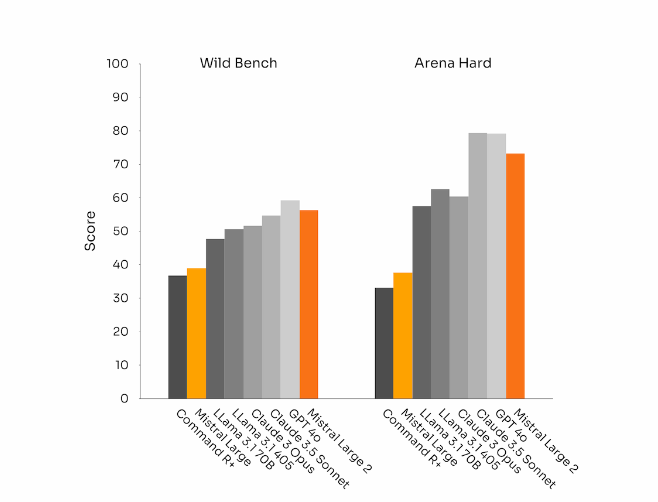

Instruction compliance and alignment

Mistral Large 2 has also made significant progress in command following and dialogue capabilities, especially when handling complex, multi-turn dialogues with greater flexibility. In some benchmarks, generating longer responses tends to improve scores. However, in many commercial applications, simplicity is crucial—shorter model generation helps faster interactions and makes inference more cost-effective.

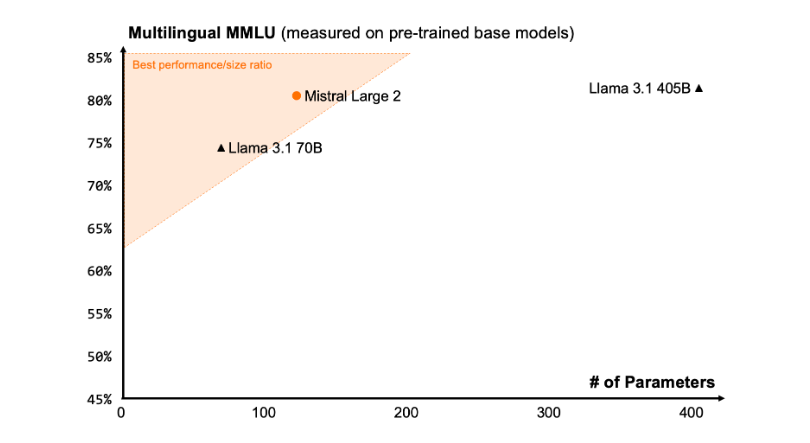

linguistic diversity

The new Mistral Large2 is trained on large amounts of multilingual data, specifically in English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic and Hindi Excellent performance. Below are the performance results of Mistral Large2 on the multilingual MMLU benchmark, compared with previous Mistral Large, Llama3.1 models, and Cohere's Command R+.

Tool usage and function calls

Mistral Large2 comes equipped with enhanced function calling and retrieval skills and is trained to expertly execute parallel and sequential function calls, enabling it to serve as a powerhouse for complex business applications.

How to use:

Currently, users can use Mistral Large2 under the name mistral-large-2407 a la Plateforme (https://console.mistral.ai/) and tested on le Chat. It is available in version 24.07 (the YY.MM version control system we apply to all models) and under the API name mistral-large-2407 . The weights for the instruct model are available and are also hosted on HuggingFace (https://huggingface.co/mistralai/Mistral-Large-Instruct-2407).

The products on La Plateforme include two universal models, Mistral Nemo and Mistral Large, and two professional models, Codestral and Embed. As we phase out older models on La Plateforme, all Apache models (Mistral7B, Mixtral8x7B and 8x22B, Codestral Mamba, Mathstral) can still be deployed and fine-tuned using the SDK mistral-inference and mistral-finetune.

Starting today, the product will expand the fine-tuning capabilities a la Plateforme: these capabilities are now available for Mistral Large, Mistral Nemo and Codestral.

Mistral AI has also partnered with several leading cloud service providers to make Mistral Large2 available globally, notably in Vertex AI on Google Cloud Platform.

** Highlights: **

Mistral Large2 has 128k context windows and supports up to ten languages and more than 80 programming languages.

Achieving an accuracy of 84.0% in the MMLU benchmark test, with excellent performance and cost.

?Users can access new models through La Plateforme and are widely used on cloud service platforms.

All in all, Mistral Large 2 has demonstrated strong competitiveness in the field of large language models with its powerful performance, extensive language support and convenient use, providing new possibilities for research and commercial applications. Its open source nature also further promotes innovative development in the field of AI.