With the explosion of artificial intelligence-generated content, social media platforms are facing an unprecedented challenge: How to ensure that users can distinguish between true and false information? Instagram head Adam Mosseri recently published a series of posts on Threads expressing his concerns about AI-generated content and laying out Instagram and Meta's strategies for dealing with this challenge. He emphasized that users need to be more vigilant and learn to identify AI-generated content, and called on platforms to strengthen content supervision and improve the transparency and credibility of information.

In a recent series of Threads posts, Instagram head Adam Mosseri stressed the need for social media users to be more vigilant in the face of growing amounts of AI-generated content.

He pointed out that the images and information users see online may not be real, because AI technology can already generate content that is very similar to reality. To help users better judge the authenticity of information, Mosseri mentioned that users should consider the source of the content, and social platforms also have a responsibility to support this.

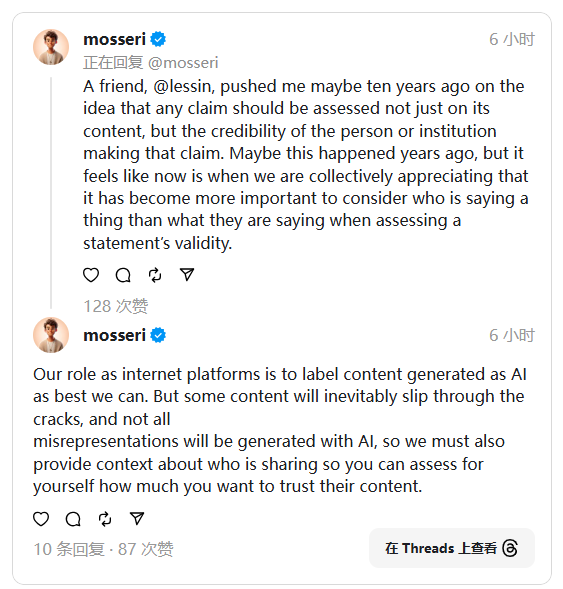

Mosseri said that the role of social media platforms is to label the generated AI content as much as possible to help users identify it. However, he also admitted that some content may be missed and cannot be marked in time. To this end, he emphasized that platforms also need to provide user background information about shared content so that users can better decide how much to trust the content. His views reflect deep concerns about the credibility of social media messages.

At this stage, Meta (the parent company of Instagram) has not provided enough contextual information to help users judge the authenticity of the content. Still, the company recently hinted that it may be making significant changes to its content rules. This change is expected to increase users' trust in information and help them better cope with the challenges posed by AI technology.

Mosseri mentioned that users should confirm whether the information provided by the chatbot is reliable before trusting an AI-driven search engine. This is similar to checking the source of a posting statement or image, only information coming from reputable accounts is more trustworthy.

The approach to content moderation Mosseri describes is closer to user-driven moderation systems, such as community notes on Twitter and YouTube's content filters. While it's unclear whether Meta will introduce similar features, given the company's past experience borrowing from other platforms, future content moderation policies are worth keeping an eye on.

All in all, in the face of the challenges brought by AI-generated content, Instagram and Meta are actively exploring solutions, which not only requires the efforts of the platform itself, but also requires users to improve their own discernment capabilities and jointly maintain the credibility and security of online information. How the future content supervision model will evolve deserves our continued attention.