With the rapid development of artificial intelligence technology, more and more AI-generated content floods the Internet, and how to identify its authenticity has become an important issue. Adam Mosseri, the head of Instagram, recently issued a statement on this, reminding users to be vigilant and treat online images with caution, especially those realistic artificial intelligence-generated content. He stressed that platforms have the responsibility to help users identify such content, and called on users to improve their ability to identify, pay attention to the source of information, and avoid being misled.

Adam Mosseri, head of Instagram, said on social media that users should be wary of the images they see online, especially artificial intelligence-generated content that can easily be mistaken for reality. Mosseri emphasized that artificial intelligence technology has significantly improved its ability to produce realistic content, so users should be cautious and consider the source of information, and social platforms have a responsibility to help in this regard.

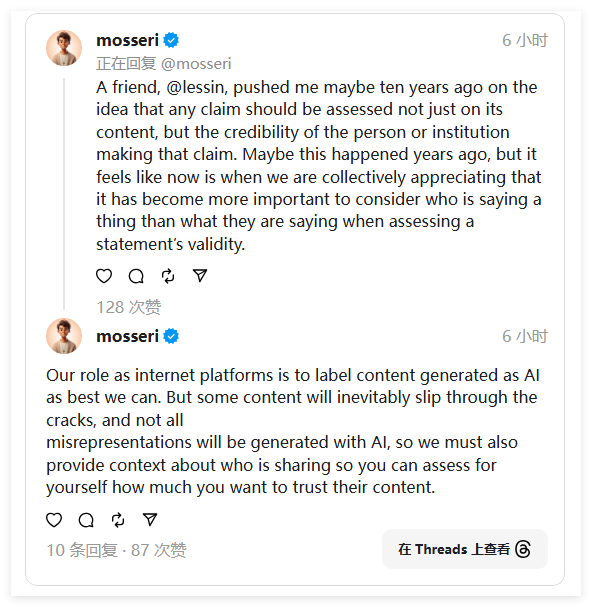

"As an internet platform, it is our job to label AI-generated content as much as possible," he wrote. However, Mosseri acknowledged that these labels sometimes miss some content due to the limitations of the technology. Therefore, the platform also needs to provide background information of the sharer to help users evaluate the credibility of the information.

Mosseri further pointed out that just as you need to be aware that chatbots may provide incorrect information before using artificial intelligence search engines, checking whether the publisher of an image or statement comes from a trusted account is also an important means of judging the authenticity of the content. Currently, the Meta platform has not provided background information similar to what Mosseri mentioned, although the company has recently hinted that major content rule changes will be made in the future.

The system he describes sounds more like a user-led moderation mechanism, similar to community notes on Platform X and YouTube or Bluesky’s custom moderation feature. Although there is no clear news whether Meta will introduce similar functions, it is worth noting that Meta has recently learned from Bluesky’s experience and may make corresponding improvements in the future.

Mosseri’s statement reminds us that in an era of information explosion, it is crucial to improve media literacy and enhance discernment. Social platforms also need to take on more responsibilities, actively explore effective methods to help users identify and respond to the challenges brought by artificial intelligence-generated content, and jointly build a safer and more reliable online environment.