In recent years, large language models (LLMs) have made significant progress in the field of natural language processing, but their token-level processing methods have limitations in context understanding and multi-language and multi-modal applications. Meta AI proposes a brand new method for this purpose - Large Concept Models (LCMs), aiming to solve the shortcomings of existing LLMs. By modeling in the high-dimensional embedding space SONAR, LCMs support multiple languages and modalities, and adopt a hierarchical architecture to improve the consistency and local editing capabilities of long content, greatly improving the efficiency and generalization capabilities of the model.

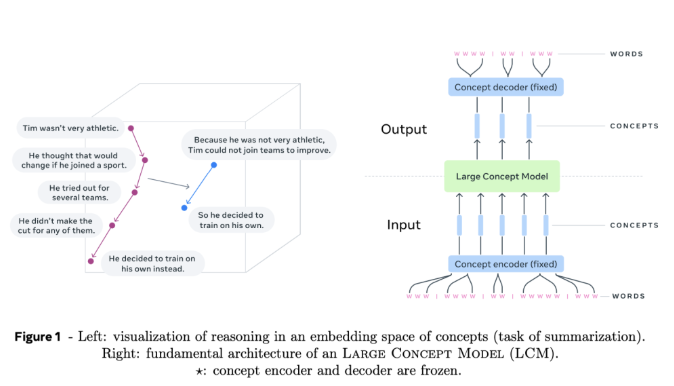

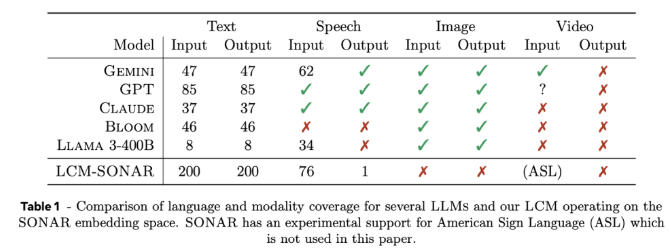

Large concept models (LCMs) represent an important shift from the traditional LLM architecture. They introduce two major innovations: First, LCMs model in a high-dimensional embedding space rather than relying on discrete tokens. This embedding space, called SONAR, is designed to support more than 200 languages and multiple modalities, including text and speech, providing language- and modality-independent processing capabilities. Second, the design of LCMs allows for seamless transition at the semantic level, enabling strong zero-shot generalization capabilities across different languages and modalities.

At the core of LCMs, there are concept encoders and decoders, which are components that map input sentences to SONAR’s embedding space and decode the embeddings back to natural language or other modalities. The frozen design of these components ensures modularity, making it easy to extend new languages or modalities without retraining the entire model.

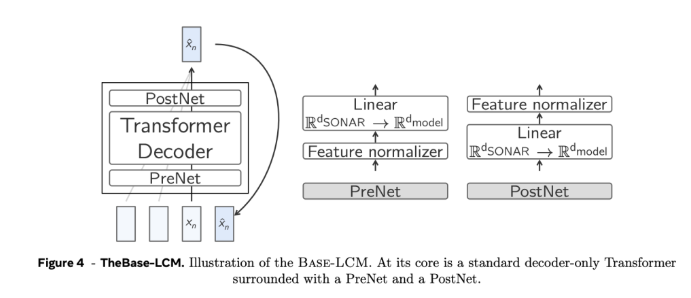

In terms of technical details, LCMs adopt a hierarchical architecture that mimics the human reasoning process, thereby improving the consistency of long-form content while enabling local editing without disturbing the overall context. LCMs excel in the generation process by employing diffusion models that predict the next SONAR embedding based on the previous embedding. In the experiment, two architectures, single-tower and dual-tower, were used. The dual-tower architecture processed context encoding and denoising separately, which improved efficiency.

Experimental results show that diffusion-based two-tower LCMs demonstrate competitiveness in multiple tasks. For example, in the multilingual summarization task, LCMs outperform baseline models in the zero-shot case, demonstrating their adaptability. At the same time, LCMs also show high efficiency and accuracy when processing shorter sequences, which is confirmed by the significant improvement in related metrics.

Meta AI's large concept model provides a promising alternative to traditional token-level language models, solving some of the key limitations of existing methods through high-dimensional concept embedding and modality-independent processing. As research into this architecture deepens, LCMs are expected to redefine the capabilities of language models and provide a more scalable and adaptable approach to AI-driven communication.

Project entrance: https://github.com/facebookresearch/large_concept_model

All in all, the LCMs model proposed by Meta AI provides an innovative solution to solve the limitations of traditional LLM. Its advantages in multi-language, multi-modal processing and efficient architecture design make it have a great future in the field of natural language processing. It has huge potential and deserves continued attention and in-depth research.