The latest Project Astra project released by the Google DeepMind team shows a prototype glasses equipped with real-time multi-mode AI. The glasses run on Google's new Android XR operating system created for visual computing and have been tested by a small number of users to explore the future possibilities of combining AI and AR. The project aims to create a stylish, comfortable and powerful AR glasses that can provide users with convenient real-time translation, location memory, text reading and other functions, and seamlessly integrate with Android devices.

In its latest presentation, Google took the wraps off Project Astra, the DeepMind team's effort to build real-time, multi-modal AI agents running on a mysterious prototype pair of glasses. On Wednesday, Google announced that it would release the prototype glasses equipped with AI and AR capabilities to a small number of selected users for real-world testing.

Translation feature demo on Google Glass prototype

These glasses are powered by the Android XR operating system, a new platform created by Google for visual computing and designed to support the development of glasses, headphones and other devices. Google revealed that although the glasses look very cool, they are currently only a technology demonstration, and the specific product release time and details have not yet been announced.

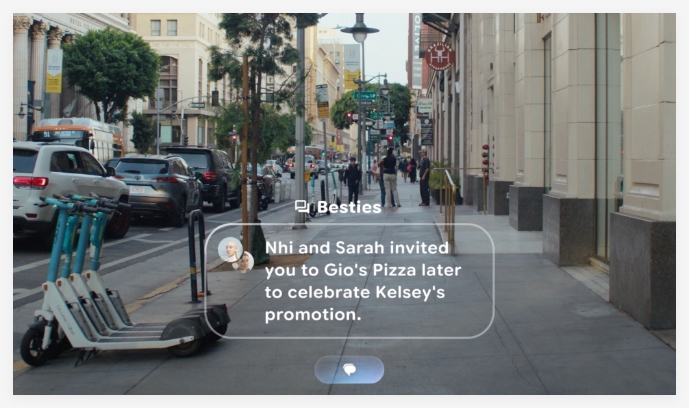

The functions of the new glasses include real-time translation, memory location, and the ability to read text without a mobile phone, etc., demonstrating the strong potential of the combination of AI and AR. Google said its future goal is to create more stylish and comfortable glasses that work seamlessly with Android devices and provide information support such as directions, translations and message summaries through simple touch.

Demo of Google Glass prototype

Google's vision is leading the field of AR glasses, especially its Project Astra technology, which provides stronger multi-modal AI capabilities than existing technologies. Google also said that the AI system of the glasses can process environmental images and voice input in real time to help users complete tasks. Although Project Astra is currently limited to mobile phone applications, its potential for application in AR glasses in the future is huge.

Compared with Meta and Snap’s AR glasses, Google’s Project Astra may have a greater advantage in the field of multi-modal AI. Although it is still in the development stage, Google’s technology may bring new breakthroughs to the future of AR glasses.

The emergence of Project Astra heralds innovation in the field of AR glasses, and its powerful multi-modal AI functions will bring users a smarter and more convenient experience. Although there is still some distance before the official launch, Google’s technical strength has pointed out the direction for the future development of AR glasses. Look forward to more surprises from Google in the future.