The open source of large language models (LLM) promotes the development of AI technology, but it also brings challenges in intellectual property protection. The emergence of "shell" models makes identifying the source of the model an urgent problem that needs to be solved. This article will discuss the shortcomings of existing model fingerprint identification methods, and introduce a new, more robust model fingerprint identification method - REEF, how it can effectively solve the "shelling" problem and protect LLM's intellectual property .

In the AI era, large language models (LLM) are like martial arts secrets. The training process consumes huge computing power and data, just like a martial arts master who has practiced in seclusion for many years. The release of the open source model is like a master making his secrets public, but it will come with some licenses (such as Apache2.0 and LLaMA2 community license) to protect his intellectual property (IP).

However, the world is treacherous, and "shelling" incidents always happen. Some developers claim to have trained new LLMs, but in fact they are wrappers or fine-tuning on other base models (such as Llama-2 and MiniCPM-V). This is like secretly learning other people's martial arts but claiming to be your own original creation. To prevent this from happening, model owners and third parties need a way to identify “shelled” models.

There are two main types of existing model fingerprint identification methods:

Injection fingerprint: This is like secretly marking the secret book, such as the watermark method. This method artificially adds some "triggers" during the model training or fine-tuning process, allowing the model to generate specific content under specific conditions, thereby identifying the source of the model. However, this approach will increase training costs, affect model performance, and may even be removed. Moreover, this method cannot be applied to already published models.

Intrinsic Fingerprinting: This is like judging the source of a cheat based on its content and style. This method utilizes the properties of the model itself for identification, including model weights and feature representations. Among them, the weight-based fingerprint method performs identification by calculating the similarity of model weights. However, this method is susceptible to weight changes, such as weight permutations, pruning, and fine-tuning. The method based on semantic analysis performs recognition through the text generated by the statistical analysis model. However, both methods suffer from insufficient robustness.

So, is there a method that can effectively identify "shell" models without affecting model performance and resist various "fancy" modifications?

Researchers from Shanghai Artificial Intelligence Laboratory and other institutions have proposed a new model fingerprint identification method - REEF.

The working principle of REEF is:

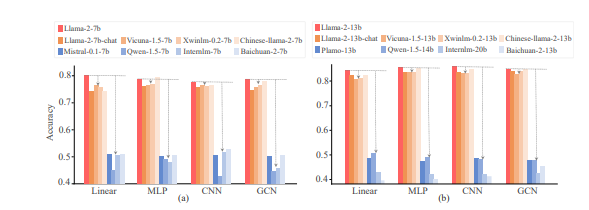

REEF is a fingerprint identification method based on feature representation. It does not rely on the representation of any specific layer, but utilizes the powerful representation modeling capabilities of LLM to extract features from various layers for recognition.

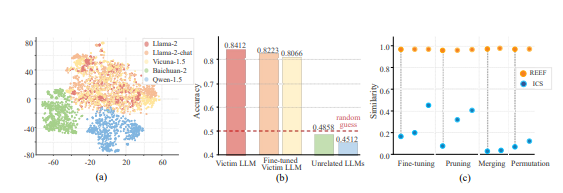

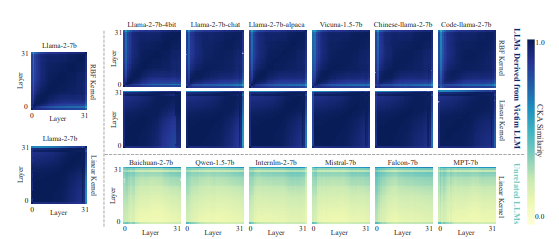

It compares the center kernel alignment (CKA) similarity of feature representations of two models on the same sample. CKA is a similarity index based on the Hilbert-Schmidt Independence Criterion (HSIC), which can measure the independence between two sets of random variables.

If the similarity is high, it means that the suspect model is likely to be derived from the victim model; otherwise, it is unlikely.

What are the advantages of REEF?

No training required: This means it does not affect the performance of the model and does not add additional training costs.

Strong robustness: It is robust to various subsequent developments such as model pruning, fine-tuning, merging, arrangement and scaling transformations. Even if the suspect model undergoes extensive fine-tuning (up to 700B tokens of data), REEF can still effectively identify whether it originates from the victim model.

Theoretical Guarantee: Researchers have theoretically proven that CKA is invariant to column arrangement and scaling transformations.

Experimental results show that REEF performs well in identifying “shell” models, outperforming existing methods based on weights and semantic analysis.

The emergence of REEF provides a new tool for protecting LLM's intellectual property and helps combat unethical or illegal behaviors such as unauthorized use or copying of models.

Paper address: https://arxiv.org/pdf/2410.14273

All in all, the REEF method provides an effective, robust and efficient solution to the intellectual property protection problem of the LLM open source model, and contributes to building a healthier AI ecological environment.