OpenAI announced that ChatGPT's advanced voice mode has been visualized. Users who subscribe to Plus, Team or Pro can interact with ChatGPT in real time through their mobile phone cameras and have screen sharing capabilities. This feature has been delayed many times before and was finally officially launched after a long period of testing. However, not all users can use it immediately. Some regions and user types will have to wait until January next year or even longer.

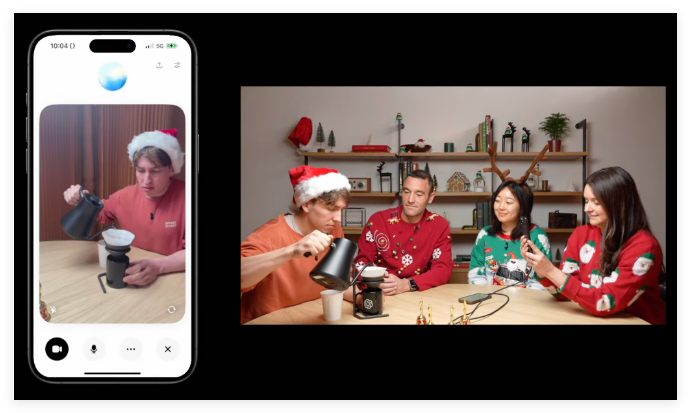

OpenAI announced on Thursday that the "Advanced Speech Mode" human-like conversation feature it developed for ChatGPT has been visualized. Users who subscribe to ChatGPT Plus, Team or Pro can now point their phone camera at an object and ChatGPT will respond in near real-time.

This advanced voice mode with vision features also features screen sharing capabilities that analyze content on the device screen. For example, it can explain various settings menus and provide advice on math problems.

The method of use is very simple: click the voice icon next to the ChatGPT chat bar, and then click the video icon in the lower left corner to start the video. If you want to share your screen, you can click on the three-dot menu and select "Share Screen".

Regarding the feature rollout, OpenAI said that the advanced speech mode with vision will be rolled out starting this Thursday and ending next week. It's important to note that this won't be available to all users right away. ChatGPT Enterprise and Edu users will have to wait until January next year, while users in the EU, Switzerland, Iceland, Norway and Liechtenstein have not yet announced a specific timetable.

During a recent appearance on CNN's "60 Minutes," OpenAI President Greg Brockman demonstrated advanced visual analysis capabilities for speech patterns to Anderson Cooper. When Cooper drew human body parts on the blackboard, ChatGPT was able to understand and comment on his drawings. For example, it states that the brain is positioned accurately and suggests that the brain shape is closer to an ellipse.

However, during the demonstration, this advanced speech mode also revealed some inaccuracies regarding geometric issues, showing the potential risk of "hallucinations".

It’s worth mentioning that this advanced voice mode with visual features has been delayed multiple times. In April, OpenAI promised it would launch "within weeks," but later said it would need more time. This feature was not available to some ChatGPT users until early this fall, and the visual analysis function was not yet available at that time.

Amid growing competition in artificial intelligence, rivals such as Google and Meta are also developing similar capabilities. This week, Google has opened its real-time video analysis conversational artificial intelligence project Project Astra to some Android testers.

In addition to visual features, OpenAI also launched a festive "Santa Mode" on Thursday, allowing users to enable Santa's voice through the snowflake icon next to the notification bar in the ChatGPT app.

This visual update of ChatGPT’s advanced voice mode marks an improvement in AI’s ability to interact with the real world, but it also exposes challenges and limitations in technology development. In the future, the improvement and popularization of similar functions is worth looking forward to. The advancement of AI technology will continue to affect our lifestyle.