Google recently released the sixth-generation artificial intelligence accelerator chip Trillium, whose performance improvement and energy efficiency optimization are eye-catching. Trillium chips have demonstrated outstanding capabilities in Gemini 2.0 AI model training. The training performance is four times that of the previous generation products. At the same time, energy consumption is greatly reduced, and the training performance per dollar is increased by 2.5 times. This will profoundly change the economics of artificial intelligence development and Pushing the field of machine learning to new heights. Google has connected more than 100,000 Trillium chips into a single network to build one of the world's most powerful AI supercomputers. Its comprehensive integration capabilities in the field of AI infrastructure are impressive.

Google recently released its sixth-generation artificial intelligence accelerator chip Trillium, claiming that this breakthrough technological advancement will likely fundamentally change the economics of artificial intelligence development and push the boundaries of machine learning. The Trillium chip has demonstrated significant performance improvements during the training process of Google's newly released Gemini2.0 AI model. Its training performance is four times that of the previous generation product, while energy consumption is significantly reduced.

Google CEO Sundar Pichai emphasized at the press conference that the Trillium chip is the core of the company's AI strategy, and the training and inference of Gemini2.0 are completely dependent on this chip. Google has connected more than 100,000 Trillium chips in a single network to build one of the world's most powerful AI supercomputers.

The technical specifications of Trillium chips represent significant advancements in multiple dimensions. Compared with its predecessor, Trillium has increased the peak computing performance of a single chip by 4.7 times, while doubling the high-bandwidth memory capacity and inter-chip connection bandwidth. More importantly, the chip's energy efficiency has increased by 67%, which is an especially important indicator when data centers are facing huge energy consumption pressure.

On an economic level, Trillium's performance has also been impactful. Google said that compared with the previous generation of chips, Trillium improves training performance by 2.5 times per dollar invested, which may reshape the economic model of AI development. AI21Labs, an early user of Trillium, has reported significant improvements. The company's chief technology officer, Barak Lentz, said the progress in scale, speed and cost-effectiveness is significant.

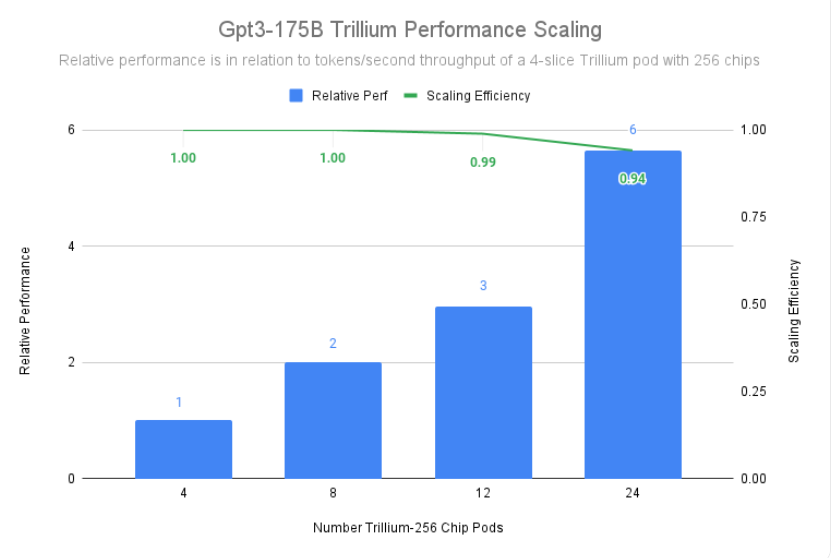

Google deployed Trillium in its AI supercomputer architecture, demonstrating its comprehensive integration approach to AI infrastructure. This system combines more than 100,000 Trillium chips and a 13-petabit-per-second Jupiter network to support the scalability of a single distributed training task across hundreds of thousands of accelerators.

The release of Trillium will further intensify competition in the AI hardware field, especially in a market dominated by Nvidia. While Nvidia's GPUs remain the industry standard for many AI applications, Google's custom chip approach may have advantages in specific workloads. Industry analysts pointed out that Google’s huge investment in custom chip development reflects its strategic judgment on the increasing importance of AI infrastructure.

With the continuous advancement of technology, Trillium not only means an improvement in performance, but also indicates that AI computing will become more popular and economical. Google said that having the right hardware and software infrastructure will be key to driving continued progress in AI. In the future, as AI models become increasingly complex, the demand for basic hardware will continue to increase, and Google clearly intends to maintain a leading position in this field.

Official blog: https://cloud.google.com/blog/products/compute/trillium-tpu-is-ga

The release of the Trillium chip marks a major breakthrough for Google in the field of AI hardware. Its significant improvements in performance, energy efficiency and economic benefits will have a profound impact on the artificial intelligence industry and indicate that AI computing will become more popular and economical in the future. Efficient. This is not only another lead for Google in the field of AI, but also represents the direction of technological progress in the entire industry.