OpenAI’s newly released video generation AI model Sora has attracted widespread attention, but the legality of its training data source has been questioned. Recently, an investigation revealed that Sora’s training data may contain a large amount of unauthorized game videos and live broadcast content, which has brought huge legal risks to OpenAI. The investigation found that Sora is able to generate videos of various gaming styles and can even reproduce the image of well-known anchors, indicating that its training data contains a large amount of gaming videos and live broadcast content.

At a time when OpenAI's recently released video-generating AI model Sora has attracted widespread attention, an in-depth investigation revealed that the model's training data may contain a large amount of unauthorized gaming videos and live broadcast content, which may pose significant legal risks to the company.

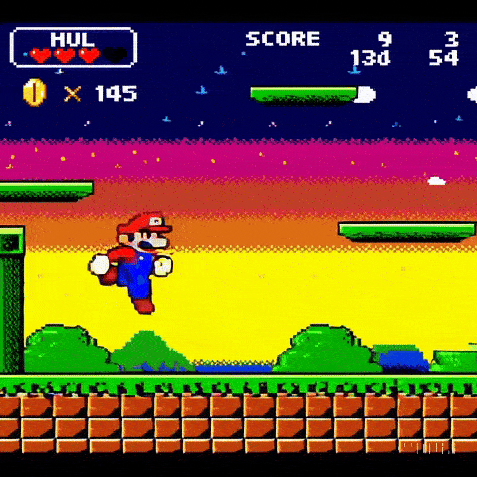

Through detailed testing, the researchers found that Sora is capable of generating videos in a variety of gaming styles, from Super Mario Bros.-style scenes to Call of Duty-style first-person shooter footage, and even the style typical of 90s arcade fighting games. What’s even more remarkable is that Sora also showed an amazing understanding of Twitch live content and was able to generate characters that are very similar to well-known anchors Auronplay and Pokimane.

Intellectual property lawyers have serious warnings about this. Joshua Weigensberg, an attorney for Pryor Cashman, noted that using unauthorized video game content to train AI models could pose serious legal risks. Experts emphasize that the copyright issue of game videos is extremely complex, involving not only the content copyright of game developers, but also the unique copyright of game records by players and video producers.

OpenAI has always maintained a vague attitude towards the source of training data. The company only acknowledged the use of "publicly available" data and licensed content from media libraries such as Shutterstock, but did not elaborate on the specific source of the gameplay video. This opacity further fuels industry concerns about potential copyright infringement.

Currently, the technology industry is facing a series of similar intellectual property lawsuits. From Microsoft and OpenAI being accused of reusing authorized codes, to AI art application companies facing accusations of artist rights infringement, to music AI startups being sued by record labels, copyright issues have become one of the biggest obstacles on the road to the development of generative AI.

Legal experts warn that even if AI companies may ultimately prevail in these lawsuits, individual users may still be at risk of intellectual property infringement. "Generative AI systems often output identifiable intellectual property assets," Weigensberg said. "Regardless of the programmer's intent, complex systems may still generate copyrighted material."

As world model technology continues to evolve, this problem becomes more complex. OpenAI believes that Sora can essentially generate video games in real time, and the similarity between this "synthetic" game and training content may lead to more legal disputes.

Industry lawyer Avery Williams put it bluntly: "Training artificial intelligence platforms with sounds, actions, characters, songs, dialogue and artwork from video games essentially constitutes copyright infringement." Legal disputes surrounding "fair use" will have a profound impact on the video game industry and have a profound impact on the creative market.

As one of the most compelling AI video generation technologies currently available, Sora’s training method reflects the legal gray area faced by generative AI. In the game of technological innovation and intellectual property protection, OpenAI will face increasing challenges.

Sora's case highlights the copyright problems faced in the development of generative AI. How to strike a balance between technological innovation and intellectual property protection is a major challenge facing OpenAI and the entire AI industry. This is not only related to the legal risks of enterprises, but also related to the healthy development of AI technology in the future.