The latest Tülu3 series of models released by the Allen Institute for Artificial Intelligence (AI2) has brought impressive breakthroughs to the field of open source language models. Not only is Tülu3 comparable in performance to closed-source models such as GPT-4o-mini, but more importantly, it is completely open source and provides comprehensive training data, code, training recipes, and evaluation frameworks, which is critical to promoting open source models. The development of training technology is of milestone significance. It solves many problems existing in the practical application of traditional pre-training models, such as generating harmful information and difficulty in following instructions, etc., and brings new possibilities to research and application in the field of artificial intelligence.

In the field of artificial intelligence, post-training technology is gradually becoming an important means to improve model performance. Recently, the Allen Institute for Artificial Intelligence (AI2) released the Tülu3 series of models, which is a completely open source advanced language model with performance comparable to closed source models such as GPT-4o-mini. Tülu3 not only contains model data, code, and training recipes, but also provides an evaluation framework, aiming to promote the development of open source model post-training technology.

Traditionally, pre-trained models alone are often ineffective in meeting practical application needs, may produce toxic or dangerous information, and are difficult to follow human instructions. Therefore, post-training stages such as instruction fine-tuning and human feedback learning are particularly important. However, how to optimize the post-training process is still a technical problem, especially when improving one ability of the model, it may affect other abilities.

In order to overcome this problem, major companies have increased the complexity of post-training methods, trying multiple rounds of training and combining artificial and synthetic data, but most methods are still closed source. In contrast, the release of the Tülu3 series has broken through the performance gap between open source models and closed source models and brought new training ideas.

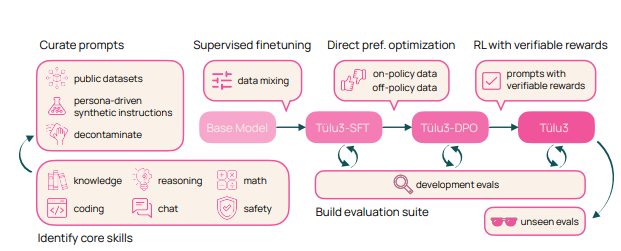

The training process of Tülu3 is divided into four stages: data construction, supervised fine-tuning, preference adjustment and reinforcement learning with verifiable rewards.

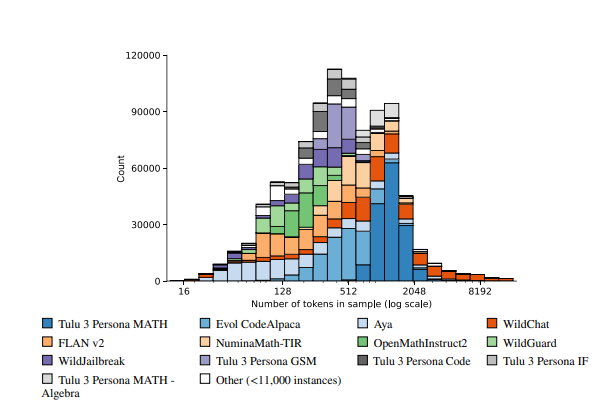

First, researchers focus on the core skills of the model and construct training data by combining artificial data with synthetic data.

Second, supervised fine-tuning is performed to ensure that the model performs as well as other state-of-the-art models on specific skills.

Third, the direct preference optimization method is used to further improve the overall performance of the model. Finally, the innovative method of verifiable reward reinforcement learning is introduced to help the model better complete tasks with verifiable results.

The Tülu3 model is built on the basis of Llama3.1 and has excellent performance in areas such as reasoning, mathematics, programming and instruction following. Compared with other open-source and closed-source models, Tülu3’s comprehensive capabilities perform well in multiple benchmarks, marking a major advancement in post-open source training technology.

Paper link: https://allenai.org/papers/tulu-3-report.pdf

Demo:https://playground.allenai.org/

Highlight:

? Tülu3 is an open source language model launched by AI2, which has comparable performance to closed source models such as GPT-4o-mini.

? Post-training technology is crucial and can effectively improve the performance of the model in practical applications.

? The innovative training process of Tülu3 is divided into four stages: data construction, supervised fine-tuning, preference adjustment and verifiable reward reinforcement learning.

The open source nature of Tülu3 allows researchers to deeply study its training methods and make improvements and innovations on this basis, which will greatly promote the development of open source language models. Its excellent performance in many fields also indicates that the open source model will play a more important role in the future. It is expected that Tülu3 can further promote the popularization and application of artificial intelligence technology.