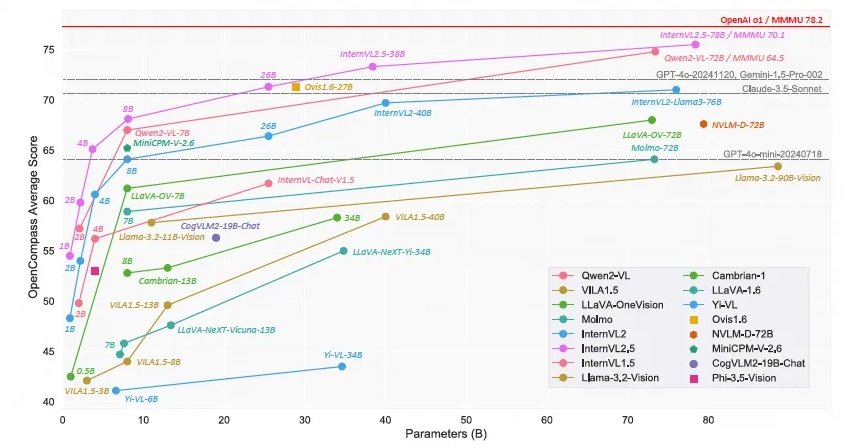

Shanghai AI Laboratory has launched the open source multi-modal large-scale language model - Scholar·Wanxiang InternVL2.5. Its performance has reached an accuracy of more than 70% on the multi-modal understanding benchmark (MMMU), which is comparable to GPT-4o and Claude- Commercial models such as 3.5-Sonnet have comparable performance. The InternVL2.5 model uses chain thinking reasoning technology to demonstrate strong performance in multiple benchmark tests, especially in multi-disciplinary reasoning, document understanding, and multi-modal hallucination detection. The open source nature of this model will greatly promote the development and innovation of multi-modal AI technology.

Recently, Shanghai AI Laboratory announced the launch of the Scholar·Wanxiang InternVL2.5 model. With its outstanding performance, this open source multi-modal large-scale language model has become the first open source model to exceed 70% accuracy on the Multi-modal Understanding Benchmark (MMMU), competing with commercial models such as GPT-4o and Claude-3.5-Sonnet Comparable performance.

The InternVL2.5 model achieved an improvement of 3.7 percentage points through Chain of Thinking (CoT) reasoning technology, demonstrating strong test time scalability potential. The model is further developed based on InternVL2.0, further improving performance by enhancing training and testing strategies and improving data quality. In-depth research is conducted on visual encoders, language models, dataset sizes, and test time configurations to explore the relationship between model size and performance.

InternVL2.5 demonstrates competitive performance in multiple benchmarks, especially in multi-disciplinary reasoning, document understanding, multi-image/video understanding, real-world understanding, multi-modal hallucination detection, visual grounding, multi-language capabilities, and Pure language processing and other fields. This achievement not only provides the open source community with a new standard for the development and application of multi-modal AI systems, but also opens up new possibilities for research and applications in the field of artificial intelligence.

InternVL2.5 retains the same model architecture of its predecessors InternVL1.5 and InternVL2.0, follows the "ViT-MLP-LLM" paradigm, and implements the integration of the new incremental pre-trained InternViT-6B or InternViT-300M with various Pre-trained LLMs of different sizes and types are integrated together using randomly initialized two-layer MLP projectors. To enhance the scalability of high-resolution processing, the research team applied a pixel-shuffle operation to reduce the number of visual tokens to half the original number.

The open source nature of the model means that researchers and developers can freely access and use InternVL2.5, which will greatly promote the development and innovation of multi-modal AI technology.

Model link:

https://www.modelscope.cn/collections/InternVL-25-fbde6e47302942

The open source release of InternVL2.5 provides valuable resources for multi-modal AI research. Its excellent performance and scalability are expected to promote further breakthroughs in this field and promote the birth of more innovative applications. Looking forward to seeing more surprising results based on InternVL2.5 in the future.