NVIDIA’s latest open visual language model, NVILA, has achieved significant breakthroughs in accuracy and efficiency, becoming another milestone in the field of visual AI. Compared with other large-scale vision models, NVILA has achieved significant reductions in training costs, memory usage, and latency. It has also demonstrated excellent performance in multiple benchmark tests, even surpassing some competitors. The article introduces the technical details of NVILA in detail, including "expansion first and compression later" technology as well as dynamic S2 expansion, DeltaLoss-based data set pruning and other optimization strategies, aiming to balance the accuracy and efficiency of the model. This marks the development of visual language model technology in a more economical and efficient direction.

Recently, NVIDIA launched a new generation of open visual language model - NVILA. Designed to optimize accuracy and efficiency, it has become a leader in the field of visual AI with its outstanding performance.

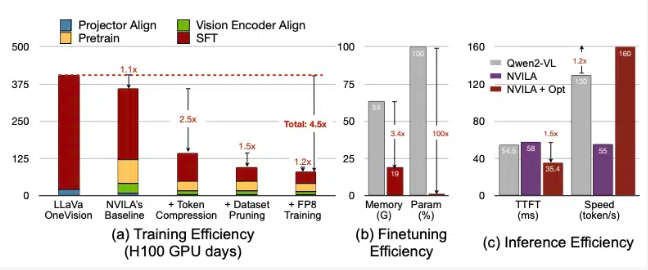

According to NVIDIA, NVILA reduces training costs by 4.5 times, reduces the memory required for fine-tuning by 3.4 times, and reduces pre-filling and decoding latencies by almost 2 times. These data are compared with another large vision model, LLaVa OneVision.

In video benchmarks, NVILA outperformed GPT4o Mini, and also performed well in comparisons with GPT4o, Sonnet3.5, and Gemini1.5Pro. In addition, NVILA also achieved a slight victory in the comparison with Llama3.2. Despite this, NVIDIA stated that the model has not yet been released to the Hugging Face platform, and they promised to release the code and model soon to promote the reproducibility of the model.

NVIDIA pointed out that the cost of training visual language models is very high. It takes approximately 400 GPU days to train a 7B parameter visual language model. At the same time, fine-tuning such a model is also very memory-intensive, with a 7B parameter model requiring more than 64GB of GPU memory.

Therefore, NVIDIA uses a technique called expand-then-compress to balance model accuracy and efficiency. Instead of reducing the size of photos and videos, the model uses multiple frames from high-resolution images and videos to ensure no detail is lost.

During the compression process, the model reduces the size of the input data by compressing visual information into fewer tokens and groups pixels to retain important information. NVIDIA mentioned in the paper that doubling the resolution will double the number of visual tokens, which will increase training and inference costs by more than 2 times. Therefore, they reduce this cost by compressing space/time tokens.

NVIDIA also showed demonstrations of some models, and NVILA can answer multiple queries based on a picture or a video. Its output results are also compared with the VILA1.5 model previously released by NVIDIA. In addition, NVIDIA also introduced some other technologies in detail, such as dynamic S2 expansion, DeltaLoss-based data set pruning, quantization using FP8 precision, etc.

These techniques are applied to an 8B parameter model, and the specific details can be viewed on Arxiv.

Paper entrance: https://arxiv.org/pdf/2412.04468

Highlight:

? The NVILA model reduces training costs by 4.5 times, improving the efficiency of visual AI.

? With high-resolution images and video frames, NVILA ensures the integrity of the input information.

? NVIDIA promises to release code and models soon to promote reproducibility of research.

All in all, the emergence of NVILA has brought new possibilities to the development of visual language models. Its efficient training and inference process will lower the threshold for visual AI applications and promote further development in this field. We look forward to NVIDIA's early disclosure of code and models so that more researchers can participate and jointly promote the progress of visual AI technology.