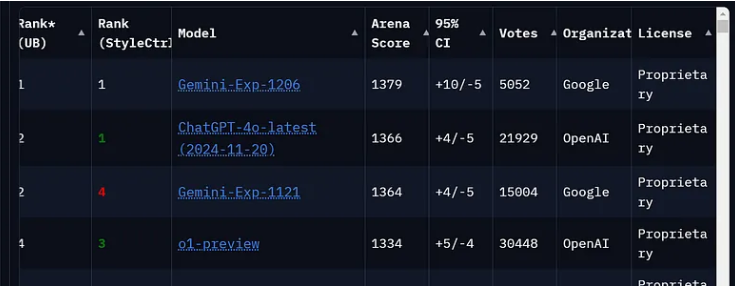

Google's Gemini-Exp-1206 language model has achieved remarkable results in the LMArena rankings, attracting industry attention. Its Arena Score of 1379 surpassed ChatGPT-4.0 and became the new leader. However, ChatGPT-4.0 shows stronger user acceptance and reliability with a higher number of votes. This article will provide an in-depth analysis of the advantages and disadvantages of Gemini-Exp-1206, as well as the evaluation mechanism of the LMArena platform, and discuss its impact on the development of the field of artificial intelligence.

Google's latest foray into generative AI has attracted widespread attention. After several months of mediocre performance, Google Gemini quickly got on the fast track and launched a new experimental language model-Gemini-Exp-1206. According to the latest ChatArena rankings, this model stands out among many competitors and becomes the leader in generative AI.

Gemini-Exp-1206 achieved the highest Arena Score on LMArena, reaching 1379 points, slightly higher than the 1366 points of ChatGPT-4.0. This score shows that Gemini-Exp-1206 performed well in multiple evaluations, demonstrating its excellent overall capabilities. In addition, the new model also shows stronger performance compared to the previous Gemini-Exp-1114.

So, what is LMArena? LMArena, also known as Chatbot Arena, is an open source platform for evaluating large language models. Developed jointly by LMSYS and UC Berkeley SkyLab, this platform is designed to support the community's evaluation of LLM performance through real-time testing and direct comparison.

In the rankings, Arena Score represents the average performance of the model in various tasks. The higher the score, the stronger the ability. Although the score of GeminiExp-1206 is higher than that of ChatGPT-4.0, in terms of the number of votes, ChatGPT-4.0 is still far ahead, with a total of 21,929 votes, while Gemini-Exp-1206 received 5052 votes. A higher number of votes generally means greater reliability, as it indicates the model has been tested more extensively.

Additionally, the 95% confidence interval data shows that Gemini has a CI of ±10/-5, while ChatGPT has a CI of ±4/-5. This shows that Gemini has a higher average score, but ChatGPT-4.0 performs better in terms of performance stability.

It’s worth mentioning that Gemini experimental models are cutting-edge prototypes designed for testing and feedback. These models provide developers with early access to Google’s latest AI advances while demonstrating continued innovation. However, these experimental models are temporary and may be replaced at any time, and are not suitable for use in production environments.

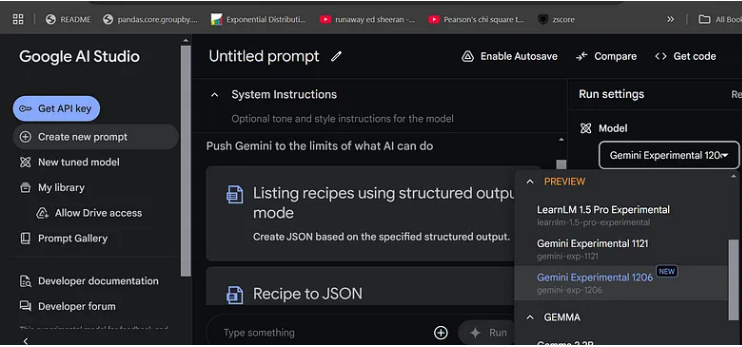

If you want to use Gemini-Exp-1206 for free, just go to Google AI Studio, log in, select the create prompt, and change the model to Gemini Experimental1206 in the settings to start chatting.

Although the results of Gemini-Exp-1206 are quite dramatic, its experimental nature needs to be kept in mind. It will take time for future potential to be revealed, and the industry is looking forward to the steady release of this strong contender.

Project entrance: https://ai.google.dev/gemini-api/docs/models/experimental-models?hl=zh-cn

Highlights:

? Gemini-Exp-1206 achieved a high score of 1379 on the LMArena rankings, surpassing the 1366 score of ChatGPT-4.0.

?️ ChatGPT-4.0 received 21,929 votes, which was significantly higher than Gemini-Exp-1206’s 5052 votes, showing its reliability.

? The Gemini experimental model provides developers with unprecedented opportunities to experience AI, but it is still in the testing stage and is not suitable for production use.

All in all, Gemini-Exp-1206 shows strong potential, but its experimental nature and low vote number also remind us that we still need to be cautious in practical applications. In the future, with further improvement of the model and test feedback from more users, the Gemini series models are expected to occupy a more important position in the field of generative AI. Continuing to pay attention to its development will help to better understand the future trends of large language models.