Epoch AI recently released a large-scale language model training computing power simulator. The simulator provides valuable reference for researchers by simulating the cost and efficiency of training GPT-4 under different hardware conditions. Simulation results show that even using an old graphics card GTX580 from 2012 can train GPT-4, but the cost will be ten times that of modern hardware, which highlights the importance of hardware performance improvement for AI model training. The simulator also supports multi-data center training simulation, allowing users to customize parameters and analyze the performance differences of different hardware and training strategies, providing an important decision-making basis for the training of future large-scale AI models.

Recently, artificial intelligence research company Epoch AI released an interactive simulator specifically designed to simulate the computing power required to train large language models. Using this simulator, the researchers found that while it was possible to train GPT-4 using older graphics cards from 2012, such as a GTX580, the cost would be ten times as much as today's modern hardware.

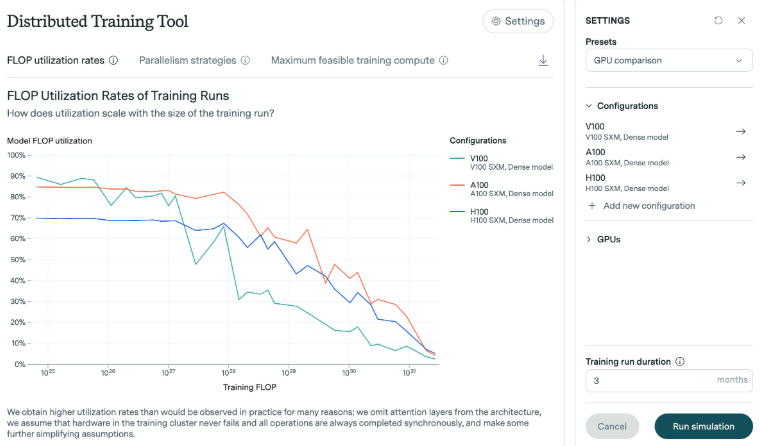

Research from Epoch AI shows that the number of floating-point operations (FLOPs) required to train GPT-4 is between 1e25 and 1e26. For this study, the simulator analyzed the efficiency of different graphics cards, especially as the model scales up. The results show that as the model grows, efficiency generally decreases. Take the H100 graphics card launched in recent years as an example. It can maintain high efficiency for a long time, while the efficiency of the V100 graphics card drops more obviously when facing larger training scales.

In Epoch AI's experiments, the memory of the GTX580 graphics card was only 3GB. This graphics card was the mainstream choice when training the AlexNet model in 2012. Although technology has advanced, the researchers believe that training on such a large scale is possible using older hardware, but the resources and costs required would be prohibitive.

In addition, the simulator supports complex training simulations across multiple data centers. Users can customize parameters such as data center size, latency, and connection bandwidth to simulate training runs across multiple locations. This tool also allows analyzing performance differences between modern graphics cards (such as H100 and A100), studying the effects of different batch sizes and multi-GPU training, and generating detailed log files documenting the model's output.

Epoch AI said it developed the simulator to deepen its understanding of hardware efficiency improvements and assess the impact of chip export controls. With the expected increase in large-scale training missions during this century, it is especially important to understand the hardware requirements required in the future.

All in all, this research and simulator of Epoch AI provides important reference value for the training of large language models, helping researchers better understand hardware efficiency, optimize training strategies, and provide more reliable training for future AI models. prediction.