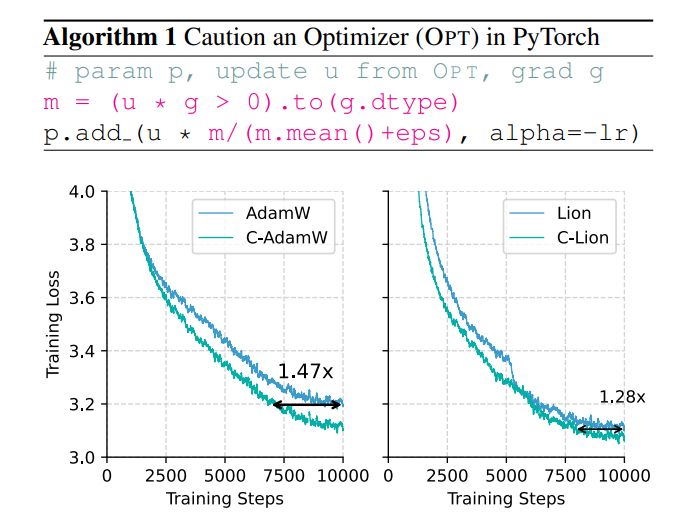

The efficiency and energy consumption issues of large model training are becoming increasingly prominent, and the traditional AdamW optimizer is unable to cope with the huge model scale. To solve this problem, an all-Chinese team proposed a new optimizer called C-AdamW (Crudent AdamW). The core idea of C-AdamW is to "think before you act". By accurately identifying the update direction, the model can avoid wasting resources on the wrong path, thereby increasing the training speed and reducing energy consumption. This optimizer improves training speed up to 1.47x in Llama and MAE pre-training with almost no additional computational overhead and can be achieved with simple modifications to existing code.

In the world of AI, "Strength can bring about miracles" seems to be the golden rule. The larger the model, the more data, and the stronger the computing power, the closer it seems to be to the Holy Grail of intelligence. However, behind this rapid development, there are also huge pressures on cost and energy consumption.

In order to make AI training more efficient, scientists have been looking for more powerful optimizers, like a coach, to guide the parameters of the model to continuously optimize and ultimately reach the best state. AdamW, as the default optimizer for Transformer pre-training, has been the industry benchmark for many years. However, in the face of the increasingly large model scale, AdamW also began to appear unable to cope with its capabilities.

Isn't there a way to increase training speed while reducing energy consumption? Don't worry, an all-Chinese team is here with their "secret weapon" C-AdamW!

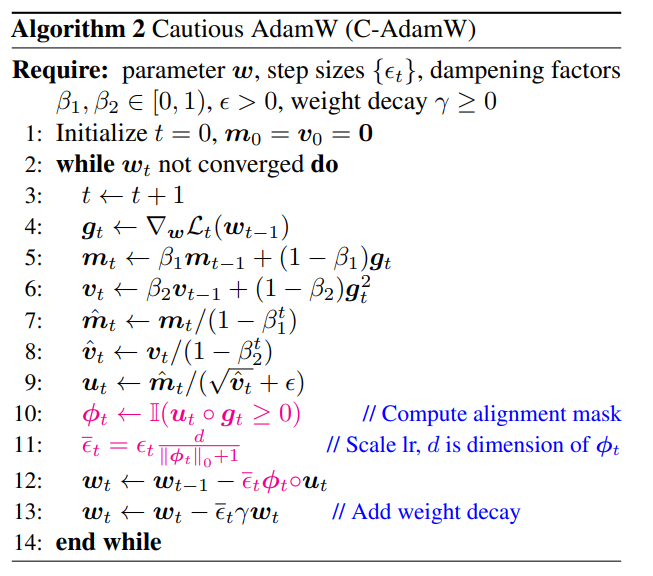

C-AdamW's full name is Cautious AdamW, and its Chinese name is "Cautious AdamW". Doesn't it sound very "Buddhist"? Yes, the core idea of C-AdamW is "think before you act".

Imagine that the parameters of the model are like a group of energetic children who always want to run around. AdamW is like a dedicated teacher, trying to guide them in the right direction. But sometimes, children get too excited and run in the wrong direction, wasting time and energy.

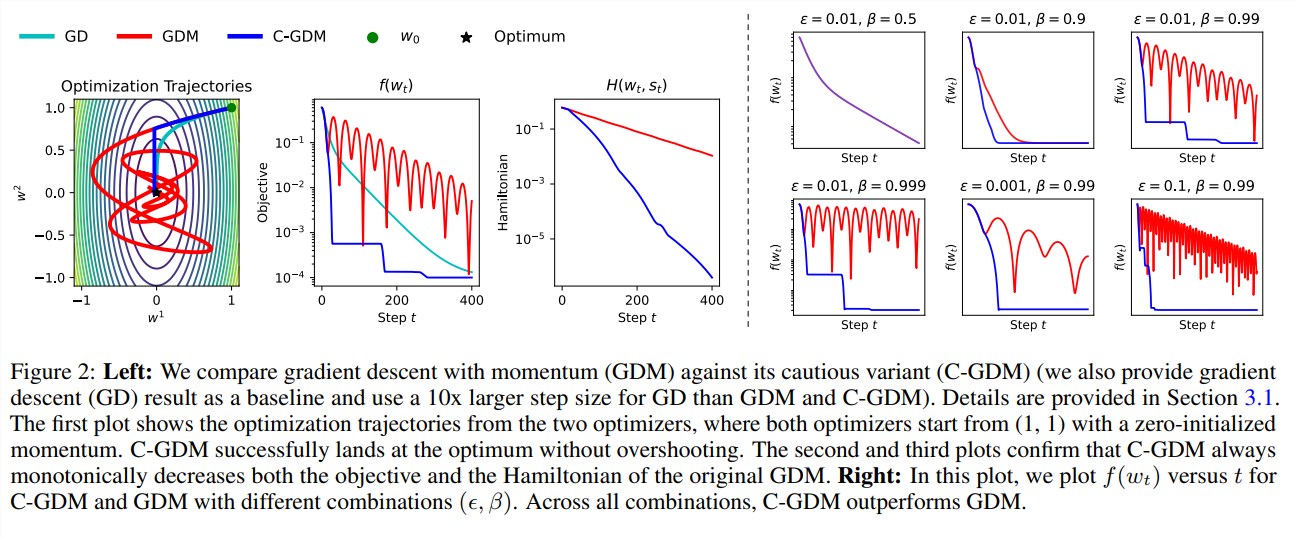

At this time, C-AdamW is like a wise elder, wearing a pair of "fiery eyes" that can accurately identify whether the update direction is correct. If the direction is wrong, C-AdamW will decisively call a stop to prevent the model from going further down the wrong road.

This "cautious" strategy ensures that each update can effectively reduce the loss function, thus speeding up the convergence of the model. Experimental results show that C-AdamW increases the training speed to 1.47 times in Llama and MAE pre-training!

More importantly, C-AdamW requires almost no additional computational overhead and can be implemented with a simple one-line modification of existing code. This means that developers can easily apply C-AdamW to various model training and enjoy "speed and passion"!

The "Buddhist" aspect of C-AdamW is that it retains Adam's Hamiltonian function and does not destroy the convergence guarantee under Lyapunov analysis. This means that C-AdamW is not only faster, but its stability is also guaranteed, and there will be no problems such as training crashes.

Of course, "Buddhist" does not mean "not enterprising". The research team stated that they will continue to explore richer ϕ functions and apply masks in feature space rather than parameter space to further improve the performance of C-AdamW.

It is foreseeable that C-AdamW will become the new favorite in the field of deep learning, bringing revolutionary changes to large model training!

Paper address: https://arxiv.org/abs/2411.16085

GitHub:

https://github.com/kyleliang919/C-Optim

The emergence of C-AdamW provides a new way of thinking to solve the efficiency and energy consumption problems of large model training. Its high efficiency and low cost make it have broad application prospects, and it is worth looking forward to its future development in the field of deep learning.